- Home /

- Resources /

- Learning center /

- Journey to Metal

Journey to Metal

Transition to Equinix Metal with a comprehensive understanding of the direct access and control benefits over bare metal environments, covering networking, operating systems, storage, security, and services, contrasted with traditional cloud providers.

You've made the big decision! It is time to move ~~house~~ cloud-provider. Equinix Metal is your new home.

Or maybe you are concerned with being all-in on one provider, mitigating your risk, and maturing to a multicloud strategy.

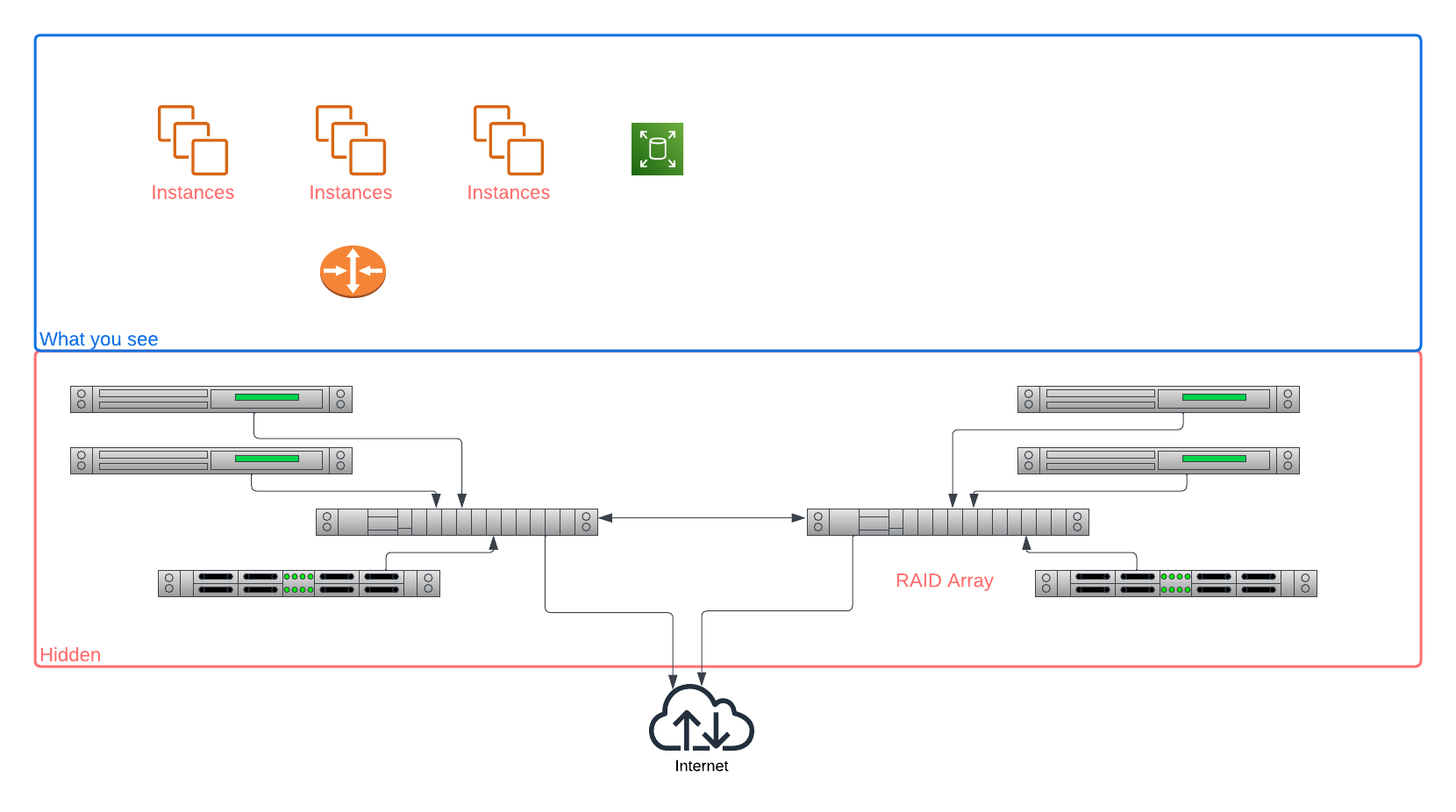

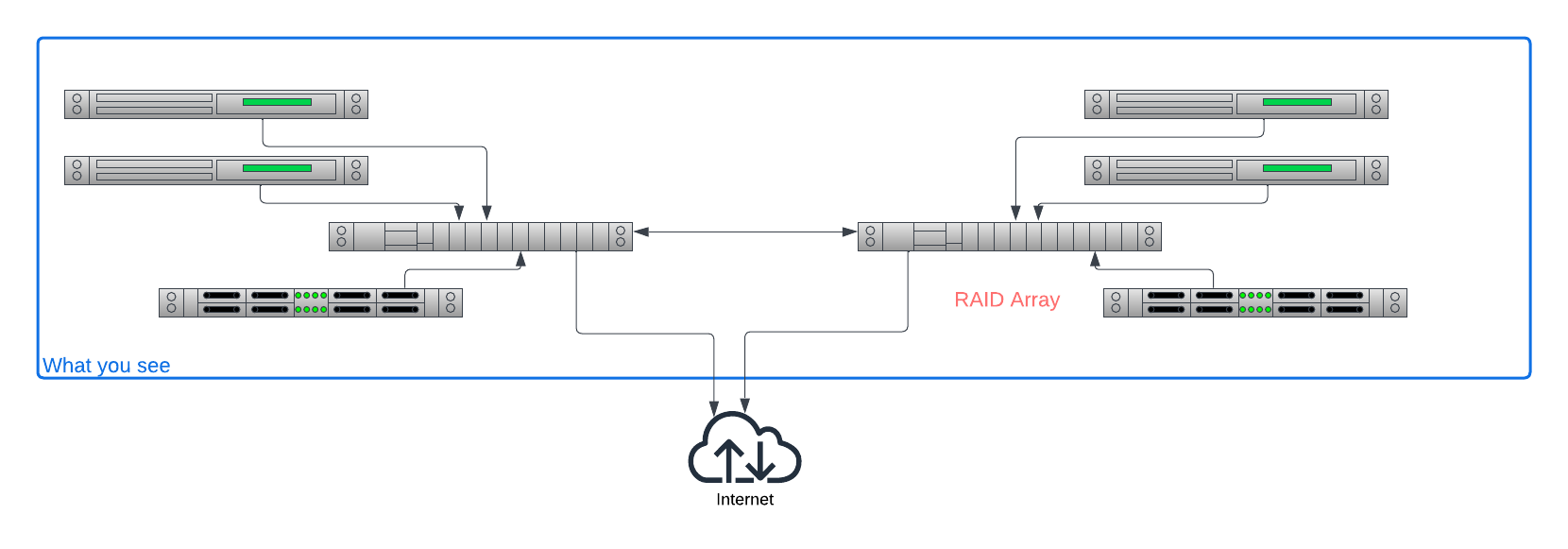

Of course, every cloud provider is different, and Equinix Metal is no exception. In some ways, Equinix Metal is even more unique, in that you are working directly with the bare metal. This means the actual server, not the virtual machine (VM) on top. It means the actual network, not a software-defined network (SDN) overlay. It means the actual drive, not a network block storage volume that is made to look local to your VM.

It also means that some of the abstracted services to which you have offloaded your concerns - databases, firewalls, load balancers - simply do not exist.

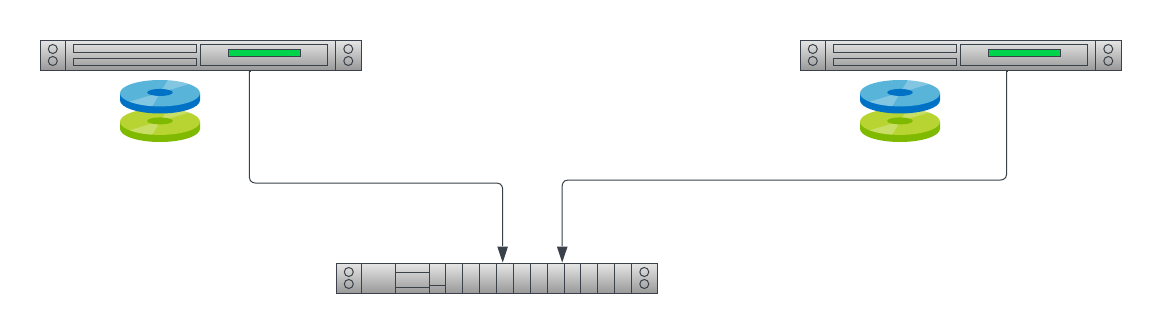

You are going from this:

To this:

Your hyperscaler cloud servicer provider (CSP) made things simpler for you by hiding many things. With Equinix Metal, you are in control, but that means you need to take control. Equinix Metal gives you the power, but you need to work to take advantage of it.

What do we need to plan for? What will be different?

There are a lot of answers to that question; we will focus on the five most important ones.

1. Networking

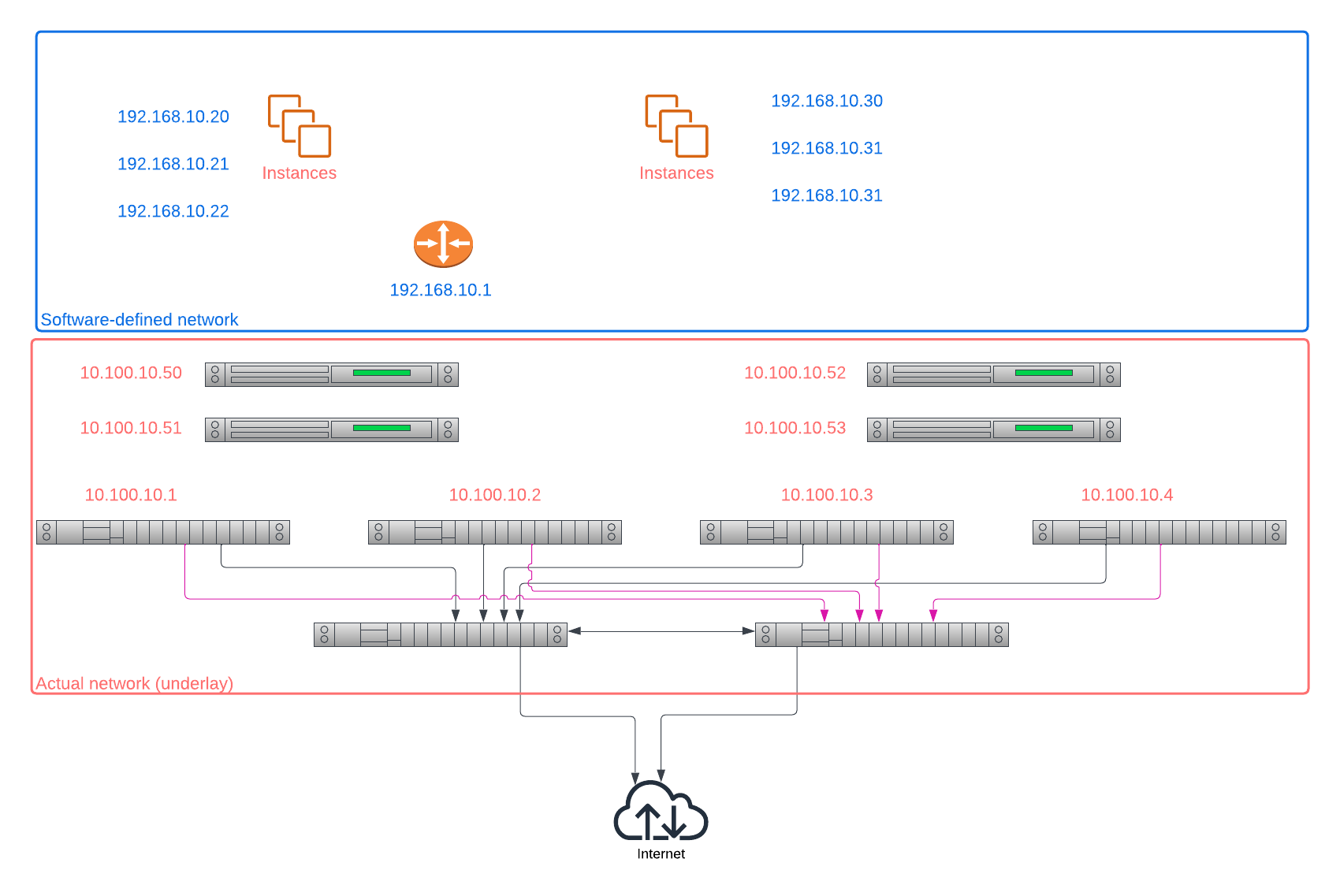

In most other CSPs, when you set up your project, you create a virtual private cloud, or VPC. You assign it the CIDR network range that you want, and then you divvy it up into subnets as you wish.

Of course, your CSP is not letting you slice and dice their actual network! They won't even let you near it.

Instead, you are creating a software-defined network (SDN), which is layered on top of the CSP's actual network. Your instances only see virtual network interfaces (VIFs), which are connected to the SDN. To your instance, all it sees is, "I have a network, the IP ranges and subnets are exactly as I expected."

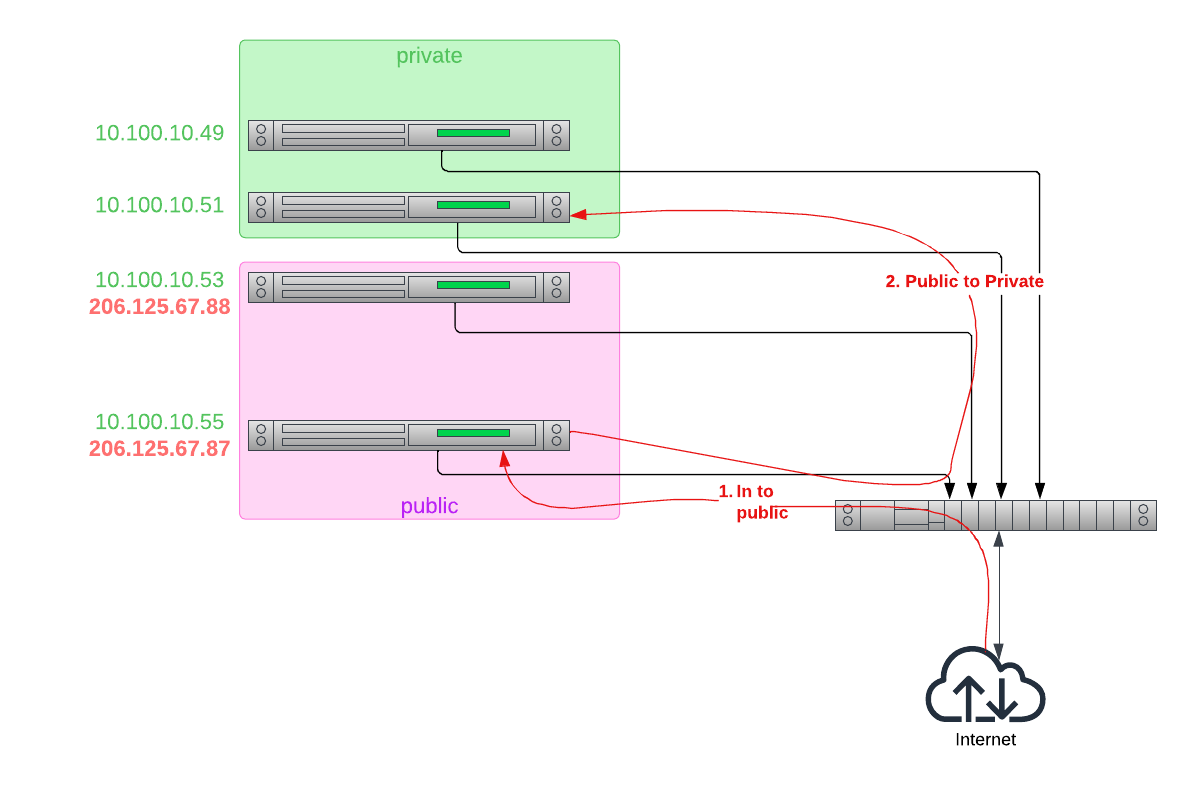

With Equinix Metal, you are working directly with the actual network. You are not creating a virtual network. This has several implications.

First, you cannot control the IP address you get. Equinix Metal is giving your host a real IP address. In

the case of private addresses, it is taken from the private IP range 10.0.0.0/8, specifically the subset

for the metro in which you deploy the server.

No two servers in the same metro have the same private IP.

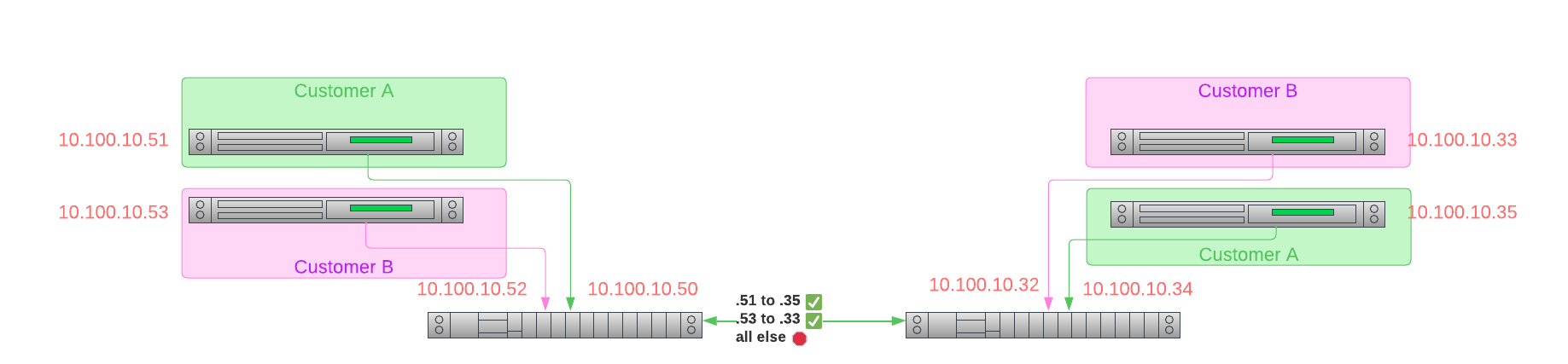

How, then, you ask, does Equinix Metal keep your server from communicating with the server in another project you own, let alone one from someone else? Simple: routing. Equinix Metal routers are aware of which IPs "go together", and essentially firewall off any traffic that should not be allowed.

You also cannot control the public IP address you get, although this is not that different from other CSPs. Like all CSPs, if you request a public address, the provider - Equinix Metal or any other - gives you one from its range.

And, like most cloud providers, if you want an "Elastic IP", one that is assigned not to a specific service, but instead to your account, that you can move around - or even assign to multiple servers at once - Equinix Metal offers those. For more information, read our Elastic IP Getting Started Guide.

Second, you need to assign the IPs to your servers. In many other CSPs, the CSP-provided OS images run DHCP, and the provider runs DHCP servers, which allow the hosts to just boot up and get IPs.

Equinix Metal does not use DHCP, and does not run DHCP servers. This means both the Equinix Metal-assigned private address, the assigned public address, and any others, are assigned via static IP addressing, which means, some of the time, you need to add it.

What does "some of the time" mean?

Equinix Metal engineers are pretty smart. Despite using straight, off the shelf, ready-to-run operating system images, they have built the boot process so that, if a public IP is assigned at boot time, it will be injected automatically.

Try it out. Go to the console, deploy a new server with a public IP, log in, and you will see that it has the IP addresses assigned.

root@t3-small-x86-01:~# ifconfig bond0

bond0: flags=5187<UP,BROADCAST,RUNNING,MASTER,MULTICAST> mtu 1500

inet 145.40.102.89 netmask 255.255.255.254 broadcast 255.255.255.255

inet6 fe80::b696:91ff:fe88:f560 prefixlen 64 scopeid 0x20<link>

inet6 2604:1380:a0:9600::1 prefixlen 127 scopeid 0x0<global>

ether b4:96:91:88:f5:60 txqueuelen 1000 (Ethernet)

RX packets 1271 bytes 494173 (494.1 KB)

RX errors 0 dropped 0 overruns 0 frame 0

TX packets 967 bytes 139960 (139.9 KB)

TX errors 0 dropped 7 overruns 0 carrier 0 collisions 0

bond0:0: flags=5187<UP,BROADCAST,RUNNING,MASTER,MULTICAST> mtu 1500

inet 10.1.108.129 netmask 255.255.255.254 broadcast 255.255.255.255

ether b4:96:91:88:f5:60 txqueuelen 1000 (Ethernet)

enp3s0f0: flags=6211<UP,BROADCAST,RUNNING,SLAVE,MULTICAST> mtu 1500

ether b4:96:91:88:f5:60 txqueuelen 1000 (Ethernet)

RX packets 1271 bytes 494173 (494.1 KB)

RX errors 0 dropped 0 overruns 0 frame 0

TX packets 967 bytes 139960 (139.9 KB)

TX errors 0 dropped 0 overruns 0 carrier 0 collisions 0

enp3s0f1: flags=6147<UP,BROADCAST,SLAVE,MULTICAST> mtu 1500

ether b4:96:91:88:f5:60 txqueuelen 1000 (Ethernet)

RX packets 0 bytes 0 (0.0 B)

RX errors 0 dropped 0 overruns 0 frame 0

TX packets 0 bytes 0 (0.0 B)

TX errors 0 dropped 0 overruns 0 carrier 0 collisions 0

lo: flags=73<UP,LOOPBACK,RUNNING> mtu 65536

inet 127.0.0.1 netmask 255.0.0.0

inet6 ::1 prefixlen 128 scopeid 0x10<host>

loop txqueuelen 1000 (Local Loopback)

RX packets 214 bytes 17370 (17.3 KB)

RX errors 0 dropped 0 overruns 0 frame 0

TX packets 214 bytes 17370 (17.3 KB)

TX errors 0 dropped 0 overruns 0 carrier 0 collisions 0

If you look at the network configuration, in /etc/network/interfaces, you will see that

these were statically assigned:

root@t3-small-x86-01:~# cat /etc/network/interfaces

auto lo

iface lo inet loopback

auto enp3s0f0

iface enp3s0f0 inet manual

bond-master bond0

auto enp3s0f1

iface enp3s0f1 inet manual

pre-up sleep 4

bond-master bond0

auto bond0

iface bond0 inet static

address 145.40.102.89

netmask 255.255.255.254

gateway 145.40.102.88

bond-downdelay 200

bond-miimon 100

bond-mode 4

bond-updelay 200

bond-xmit_hash_policy layer3+4

bond-lacp-rate 1

bond-slaves enp3s0f0 enp3s0f1

dns-nameservers 147.75.207.207 147.75.207.208

iface bond0 inet6 static

address 2604:1380:a0:9600::1

netmask 127

gateway 2604:1380:a0:9600::

auto bond0:0

iface bond0:0 inet static

address 10.1.108.129

netmask 255.255.255.254

post-up route add -net 10.0.0.0/8 gw 10.1.108.128

post-down route del -net 10.0.0.0/8 gw 10.1.108.128

The important parts are the lines iface bond0 and iface bond0:0. Notice that both of these are marked

static.

However, if you change the IP, add an Elastic IP, or add one after initial boot, it is up to you to configure your addresses. This is not hard, but it may be different for you.

2. Operating Systems

If you are used to hyperscaler CSPs, or even your private cloud like with VMWare, then you are used to operating systems images. You either pick a pre-built list from their ready-to-run set or a marketplace, or build your own and save it in that format to their storage.

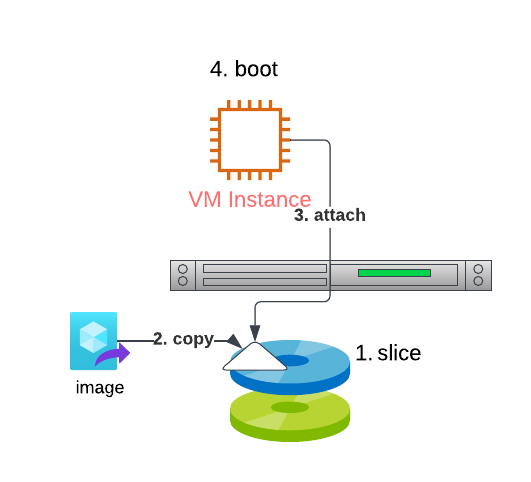

This works for the most part because they are using VMs and virtualized storage. When you create an instance, they do something similar to:

- Create a slice of disk space for the boot, whether local on the physical device or on a storage area network (SAN) (and they usually prefer the network)

- Copy your chosen operating system image to that slice

- Attach that slice to the VM, making it look like its own block storage device

- Boot the VM, pointing to that block storage device

When you are working with physical machines, a lot of this is not available. There are no "hidden disks" on the "underlying physical machine" or the network that you can slice up and "present to the VM" as if it were a "real attached disk". You are getting the entire local disk, and that is it.

How do you solve this?

Well, it has been solved for years now. Long before there were cloud, or even VMs, organizations needed to be able to boot lots of machines.

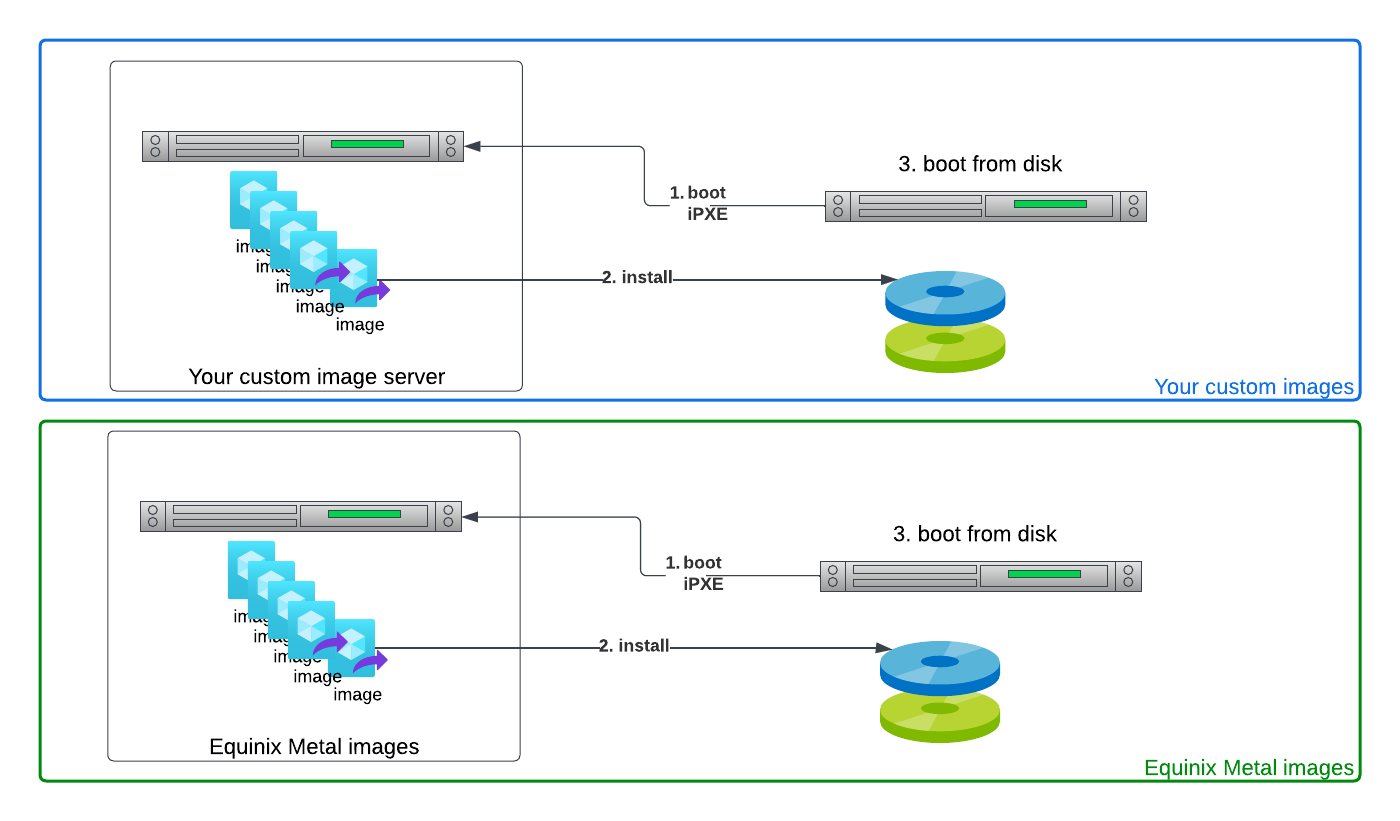

We rely on network booting and configuration management. We use iPXE to boot every server from the network. The modestly brilliant Equinix Metal engineers built a system that tracks each server, what OS it should be booting, and what state it is in.

For more details on iPXE, see our What is iPXE guide.

This leaves you with two options when getting ready to deploy your new server.

First, you can pick from our pre-built images. When you pick these, you are configuring Equinix Metal to say, "when my server boots and reaches out over the network, serve up that ready-to-run image."

Second, you can use any custom image you want. In that case, you need to make it available over the network. It can be from another server on the Equinix Metal network, e.g. in your project, or even out on the public Internet. You just tell Equinix Metal, when configuring the server, where to find the iPXE configuration for it.

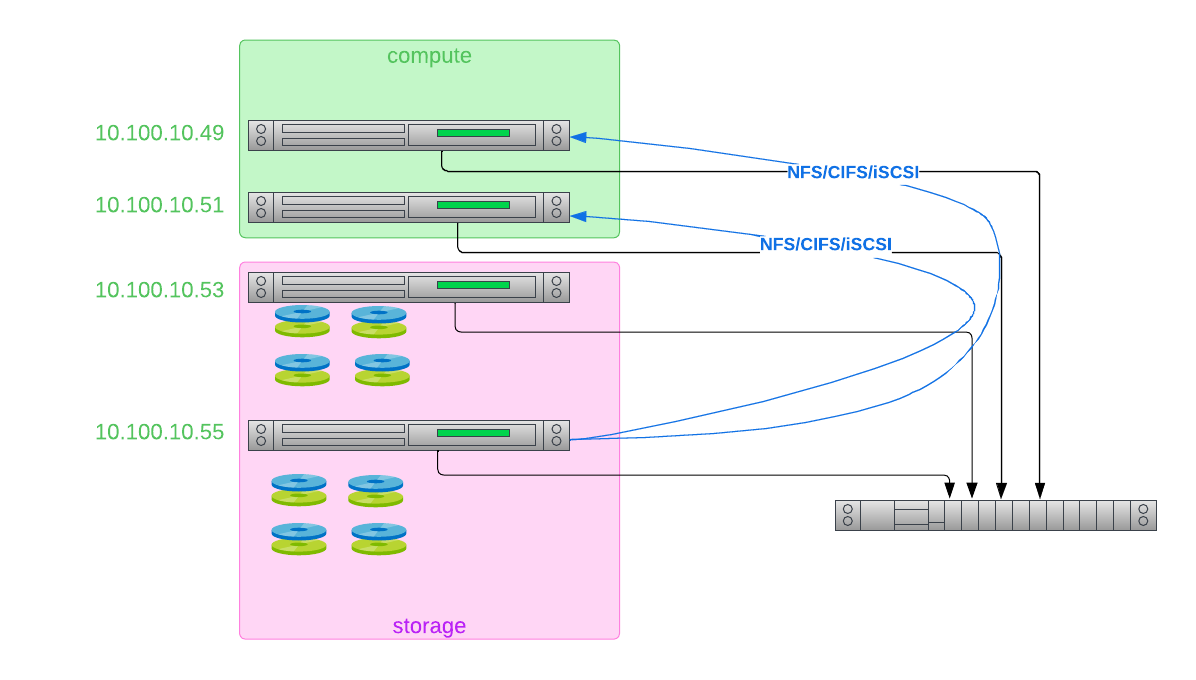

3. Storage

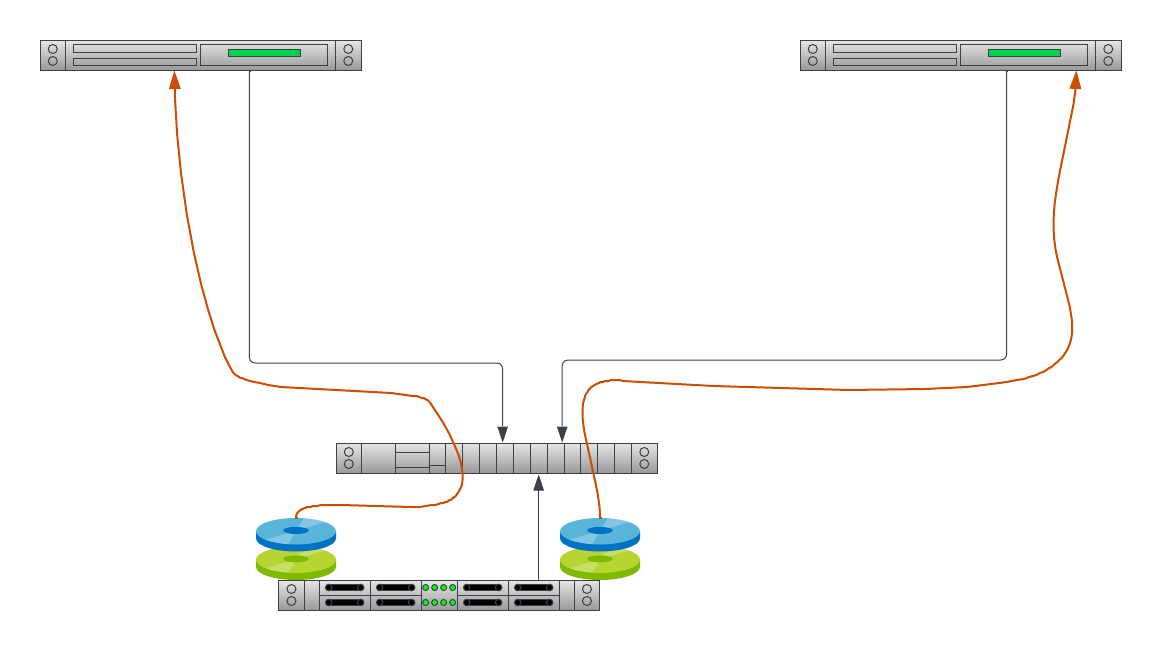

Many CSPs have a combination of network block storage, or "elastic block storage", and local storage. Actually, most of them encourage you to use their network storage, rather than local storage. Many instances do not have any local storage at all.

This is for two reasons.

- It is much more cost-effective for them to slice up a massive storage array in a SAN and present each slice to as many customers as possible, than to try slicing local storage, which is limited to the VMs on that physical device, and therefore will be more wasteful.

- It is much easier to manage a SAN centrally than it is to manage local storage on hundreds of thousands or even millions of physical servers.

At Equinix Metal, we don't manage a massive array of network storage. Actually, we don't manage any storage at all. When you deploy a server, you get a lot of storage locally. That means you get all of the performance benefits of storage separated from your application by less than a few inches of high-speed bus.

This is great for performance, but it does mean that you need to manage your own storage. If you want multiple partitions, or to create RAID arrays, or install other filesystems, e.g. high-performance and scale ZFS, that is up to you.

4. Security

Security is a very broad topic, one that touches every aspect of technology. That makes it far too deep for us to do it justice. We do, however, want to highlight a few things, specifically related to network security.

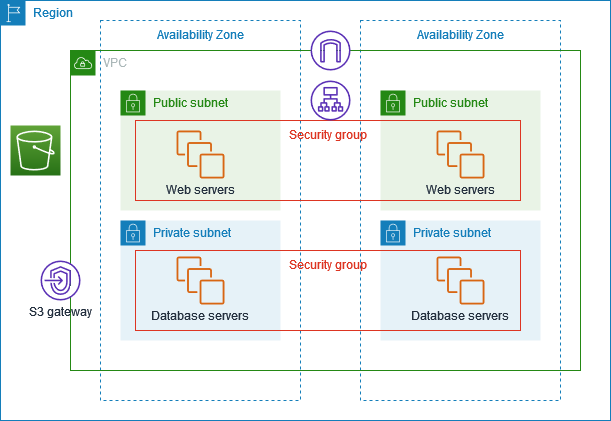

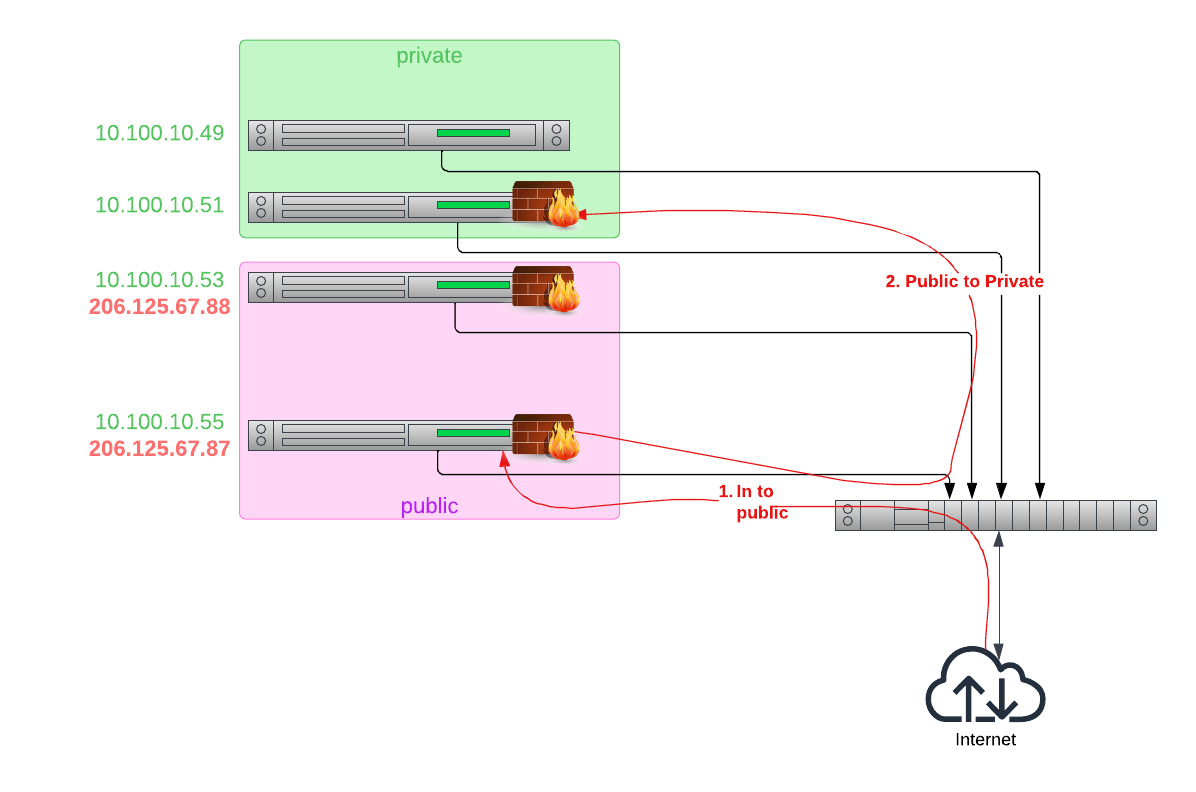

For cloud providers with VPCs, it is a common practice to create a multi-tier network, not that differently than we have been doing on premise for decades. It can get much more complex than this, depending on your requirements, but the basic form, in a nutshell, is:

- Create a VPC

- Create public and private subnets

- Create firewall access rules that tightly control which traffic can enter each subnet. For example, only allow https/443 into the public subnet, and only allow database connections, and only from the public subnet, into the private subnet.

- Create security groups on the various servers to further protect them.

You provide limited Internet access, and maybe public IPs, only to servers in the "public subnet"; the "private subnet" cannot be accessed from the Internet at all.

AWS's examples for VPC include exactly these. The Web and database servers example has web servers in one security group in the public subnet, and database servers in another security group in the private subnet.

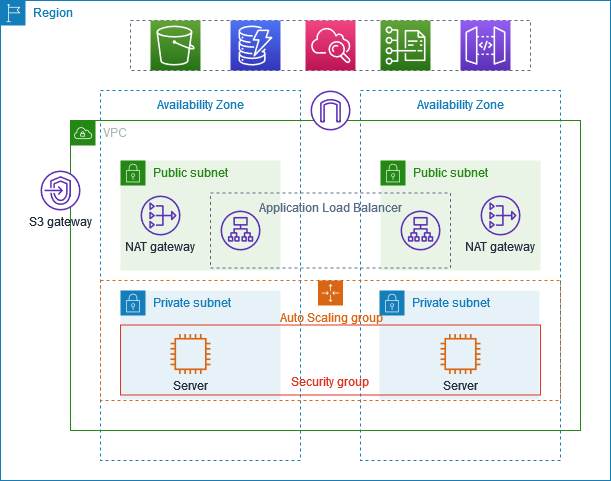

The Private servers example goes even further, putting nothing in the public subnet but AWS-managed load balancers.

What does all of the above have to do with Equinix Metal?

Equinix Metal provides you with the power, and the complexity, of direct access to the underlying network. This means that there are no VPCs, no subnets, no provider firewalls. If these things are important to you, you need to plan for them.

How can you create these network security constructs in Equinix Metal?

There are several paths.

First, for servers that should not be publicly reachable, do not assign a public IP address. Lest you be concerned, this is more than just not putting the address on the server. Because you do not assign an address, the upstream Equinix Metal router should not route any public packets to the server. The Equinix Metal network infrastructure functions as a firewall, blocking those servers off from the Internet.

You then can put both public and private addresses on those servers that should reach the Internet, making those servers the gateways to you private servers.

Whether those servers act like firewalls, or load balancers, or are Internet-exposed Web servers, is up to you.

Second, you can put firewall software on your servers. They can be on your backend servers, your front-end servers, your gateways, or all of the above.

Third, Equinix Metal allows you to define your own networks using Virtual Local Area Networks, or VLANs. With VLANs, you control the IP addresses inside the VLAN, and set up how traffic flows into and out of the VLAN, whether to the Internet, other VLANs or even other cloud providers via Equinix Fabric.

For more details on available networking configurations be sure to check out the Layer 2 Networking Guide.

5. Services

Services covers a very large area. Some cloud providers try to offer as many services as possible (sometimes upsetting their prior partners on the way as they try to take their business). It also makes it challenging to focus on the core business. Of course, some do the mix better than others.

Cloud providers have literally thousands of managed service offerings, from load balancers and firewalls at the low level, to managed databases and Kubernetes clusters above.

We only will list a few of them.

Storage

Most cloud providers have two different kinds of storage: block and object. Block storage is just "disk drives over the network", which looks local to your VM instances. You interface with block storage usually by mounting it into your server. Object storage stores objects, and is accessed using Web protocols like https. Of course, all of those objects have to be stored somewhere. In many cases, the cloud provider uses block storage "under the covers" to store the objects.

Equinix Metal does not provide managed storage services directly. Local disk on your servers, especially SSDs and newer NVMe, provide a lot of storage space for no additional cost at the fastest speed possible.

If you need shared storage across multiple servers in your project, set up one or a few servers to act as storage servers, and use a network storage protocol, like NFS or CIFS/SMB for file access, or S3 for object access, to access the storage.

Fortunately, Metal has several guides to using these storage technologies.

Of course, any local storage is sensitive to failure; you only are as good as your data! So be sure to read Equinix Metal's guide to data redundancy and guide to backups.

If you want a truly managed high-end storage solution, Equinix Metal has a partnership with Pure Storage, offering dedicated storage appliances in your project. For information, see this page. If the metal is yours, shouldn't the storage be yours, too?

Databases

Databases are a critical part of most applications. Cloud providers offer some managed databases. At Equinix Metal, you have complete control. Deploy your servers, and then put your database software on them.

Keep in mind the sensitivity to security we discussed above, where and how they should be accessed, and protections around them when deploying databases.

Kubernetes

Kubernetes is much easier to deploy and manage than when it first came out. Between kubeadm and easier to install distributions like k3s, it is much easier to deploy and manage your own Kubernetes cluster.

And in case you want to know why Equinix Metal chose not to build its own managed Kubernetes services, here's our founder, Zac Smith's article on why we "forgot" to build one.

Kubernetes is really important to deploying and managing workloads in the cloud-native era, so Equinix Metal has all of the tools you need to deploy and manage a Kubernetes cluster on Equinix Metal, as well as integrate cleanly with the provider. From Cluster API to Cloud Controller Manager, we have it all.

Conclusion

Migrating your workloads from a hyperscaler cloud provider to Equinix Metal brings with it enormous benefits in terms of cost, power and flexibility. To take advantage of those, you need to understand the differences. These include how the provides differ, both in how they work and also in what they offer.

Anything you can do on another cloud provider, you can do on Equinix Metal, at times much better. That power, however, comes with the need to understand how to use it and take ownership of setting it up.

Last updated

07 January, 2025Category

Tagged

ArticleYou may also like

Dig deeper into similar topics in our archives

Crosscloud VPN with WireGuard

Learn to establish secure VPN connections across cloud environments using WireGuard, including detailed setups for site-to-site tunnels and VPN gateways with NAT on Equinix Metal, enhancing...

Kubernetes Cluster API

Learn how to provision a Kubernetes cluster with Cluster API

Kubernetes with kubeadm

Learn how to deploy Kubernetes with kubeadm using userdata

OpenStack DevStack

Use DevStack to install and test OpenStack on an Equinix Metal server.