Kubernetes Cost Optimization and How Bare Metal Can Help

What levers you can pull to ensure you’re not overspending on infrastructure running your clusters.

Much of the work in designing and managing application infrastructure is about striking the balance between performance, reliability and cost: How to ensure the right levels of performance and reliability without overspending?

Kubernetes is great at automating some of that work, with its ability to scale clusters up and down as needed. But simply using Kubernetes doesn’t address the cost challenges. As we outline further down in the article, multiple factors affect the overall cost of running infrastructure orchestrated by Kubernetes. A big one is the choice of the hosting platform underneath your Kubernetes clusters. And that’s what we’ll focus on in this article, explaining how to optimize those infrastructure costs, and how one particular type of infrastructure—on-demand bare metal—can be one of the most effective options.

More about Kubernetes on bare metal:

- So You Want to Run Kubernetes On Bare Metal

- Making the Right Choice: Kubernetes on Virtual Machines vs. Bare Metal

- Kubernetes Management Tools for Bare Metal Deployments

- Tutorial: Installing and Deploying Kubernetes on Ubuntu

Kubernetes and Its Cost Implications

Kubernetes is a powerhouse for container orchestration. But using it effectively isn’t trivial, especially when cost is a concern. Here’s a high-level overview of the cost considerations when running Kubernetes.

The Benefits

- Scalability: This is one of Kubernetes’s most remarkable features. As your traffic grows, it can automatically add pods to handle the increased load. When the demand decreases, it scales back, which means you only pay for the resources you need.

- Resource efficiency: Kubernetes manages containers in a way that ensures they're allocated the right amount of resources. It minimizes waste by reusing resources that would otherwise remain idle.

- Automated operations: Kubernetes automates many of the routine tasks required to run a containerized application, from deployment to updates and scaling. This means less manual intervention, fewer errors and lower labor costs.

The Challenges

- Complexity: The incredible power and flexibility of Kubernetes also makes it incredibly complex. Managing a Kubernetes cluster effectively requires expertise in various domains, such as networking, storage and security. Those without a solid understanding of these components may encounter hidden costs in troubleshooting, maintenance or even basic setup of the system.

- Overprovisioning: While Kubernetes excels in managing resources efficiently, misconfiguration and lack of proper monitoring can easily lead to overprovisioned resources. This usually happens when an admin doesn’t fully understand an application’s resource requirements or doesn’t monitor and adjust the system on a regular basis. Continuous assessment and rightsizing of pods and nodes can mitigate this challenge.

- Licensing and support: This part varies depending on an organization’s specific setup and needs, but there are often additional costs for licensing, third-party tools, or specialized support services. While many Kubernetes tools and resources are open source, certain enterprise-grade solutions or plugins require paid licenses or subscription fees. Examples include Datadog and New Relic, used for monitoring and logging, and Aqua Trivy, used for security scanning. Additionally, you might need to invest in external support or training if you lack in-house expertise. All these costs can add up!

What Affects Kubernetes Costs

The choices admins make that affect the cost of running Kubernetes generally fall in four areas: hosting, usage, architecture and code.

Hosting

Choosing where to host your Kubernetes cluster is a fundamental decision that directly impacts costs. Cloud providers, such as AWS, Azure and Google, offer managed Kubernetes services that simplify many tasks: Amazon Elastic Kubernetes Service (EKS), Azure Kubernetes Service (AKS) and Google Kubernetes Engine (GKE). Each has its own pricing structure.

You can host Kubernetes on your own bare metal hardware, which requires substantial investment in equipment, facilities and maintenance but gives you full control and performance of non-virtualized infrastructure.

There is also a happy medium, which is using on-demand bare metal servers. Equinix Metal provides these globally. They are fully automated, provisioned and deprovisioned remotely and can be managed via a robust API using the same familiar infrastructure automation tools admins use to manage traditional, virtualized cloud infrastructure.

Usage

How exactly you use your Kubernetes cluster directly affects how much it costs to run it. Running more nodes, pods or applications that consume extensive CPU, memory and storage naturally costs more. Network bandwidth can also be a significant factor, especially if your applications move a lot of data traffic between different regions.

Architecture and Services

Architecture decisions and integrations with third-party services can also influence costs. Stateless applications, for instance, don’t drive storage spend, while stateful ones may need some complex and costly persistent storage solutions.

Fees for using specialized managed services, such as databases or messaging queues, further drive up costs. So does deploying across multiple regions for high availability and/or low latency via proximity to end users.

Code

How you code and package your applications can also impact cost. Applications that are coded efficiently consume fewer CPU and memory resources. A container image’s size can affect storage and network costs. Large images take up more space and can slow deployment, so it’s always best to look for ways to shrink and optimize images whenever possible.

Kubernetes Cost Optimization

Here are some common methods for keeping Kubernetes infrastructure costs under control.

Rightsizing Pods and Nodes

Cost optimization starts with ensuring that your pods and nodes are appropriately sized for the workloads they're handling. Overprovisioning resources can lead to wasted expenditures, while underprovisioning can harm performance. Careful analysis of your application's resource needs and regular monitoring and adjustment can lead to significant savings without compromising performance.

Autoscaling

Kubernetes has native autoscaling features for adapting clusters dynamically according to workload demands. Automatically scaling resources up and down based on real-time requirements ensures that you're only paying for what you need. It can also improve responsiveness and resilience.

# Example of enabling autoscaling in Kubernetes

kubectl autoscale deployment my-deployment --min=2 --max=5 --cpu-percent=80

Check out our deep dives on Kubernetes autoscaling:

- What You Need to Know About Kubernetes Autoscaling

- Understanding the Role of Cluster Autoscaler in Kubernetes

Multitenant Clusters

Rather than running separate clusters for different teams or projects, multiple users can share a single cluster. Properly configured, multitenancy can maximize resource utilization. Tools that enable multitenancy include Loft and Kubernetes namespaces, in combination with role-based access control (RBAC).

Hosting Choices

The choice of underlying computing infrastructure impacts cost in several ways. Not only the cost of using the infrastructure should be considered but also the cost of labor and expertise required. Managed services are convenient but come at a premium, while bare metal, on the other end of the spectrum, requires a greater skill level to use effectively. Using a mix of infrastructure types is also a valid approach. For example, cloud spot instances are a cost-effective way to host noncritical workloads, while bare metal can be used for workloads where performance and control are non-negotiable.

Cost Optimization Tools

There are a variety of tools and platforms designed specifically for monitoring and managing Kubernetes costs, such as Kubecost and OpenCost. They provide resource utilization insights, highlight inefficiencies and suggest optimizations. You can integrate these tools with your workflow to make cost management more proactive and streamlined.

Leveraging Bare Metal to Optimize Kubernetes Costs

Bare metal infrastructure comes in a variety of flavors, from owning and managing servers on premises to traditional dedicated hosting to on-demand bare metal as a service.

Cost efficiency is just one of many considerations when deciding which type of infrastructure a Kubernetes cluster should run on—but it’s a big one. There are varying degrees of flexibility and management complexity associated with the different types of bare metal (the degree of management complexity is another major factor affecting total cost), but the basic common characteristic is that there is no hypervisor, so you’re maximizing utilization of the hardware resources and you’re not paying hypervisor software licensing fees. (You can reduce your costs further by reserving bare metal capacity from a provider like Equinix for a longer period of time, which gets you meaningful discounts.

Bare Metal Key Benefits:

- Dedicated resources: There's no sharing of CPU, RAM, or storage among virtual machines. The entire server's resources are dedicated to your applications, allowing for better performance and efficiency.

- Predictable performance: Virtualized environments can suffer from the noisy neighbor effect, where VMs on the same host compete for resources. Bare metal provides consistent, predictable performance.

- Total control: With bare metal, you have total control over the hardware. You can choose the exact configuration that suits your needs, from the processor type to the amount of RAM and storage. This level of customization makes it easier to tailor your infrastructure to your workload’s needs, making for higher levels of resource utilization.

Scenarios Where Bare Metal Hosting Is Beneficial

Bare metal offers distinct advantages over virtualized servers for workloads that have distinct needs and characteristics. Here are a few examples:

- Builds and tests of applications that directly interact with hardware, such as operating systems

- Designing customized private cloud implementations with their own hypervisors

- Prototyping, workshopping or running proofs of concept for performance-sensitive applications

- Critical workloads where every bit of performance matters, such as high-frequency trading and online ad marketplaces

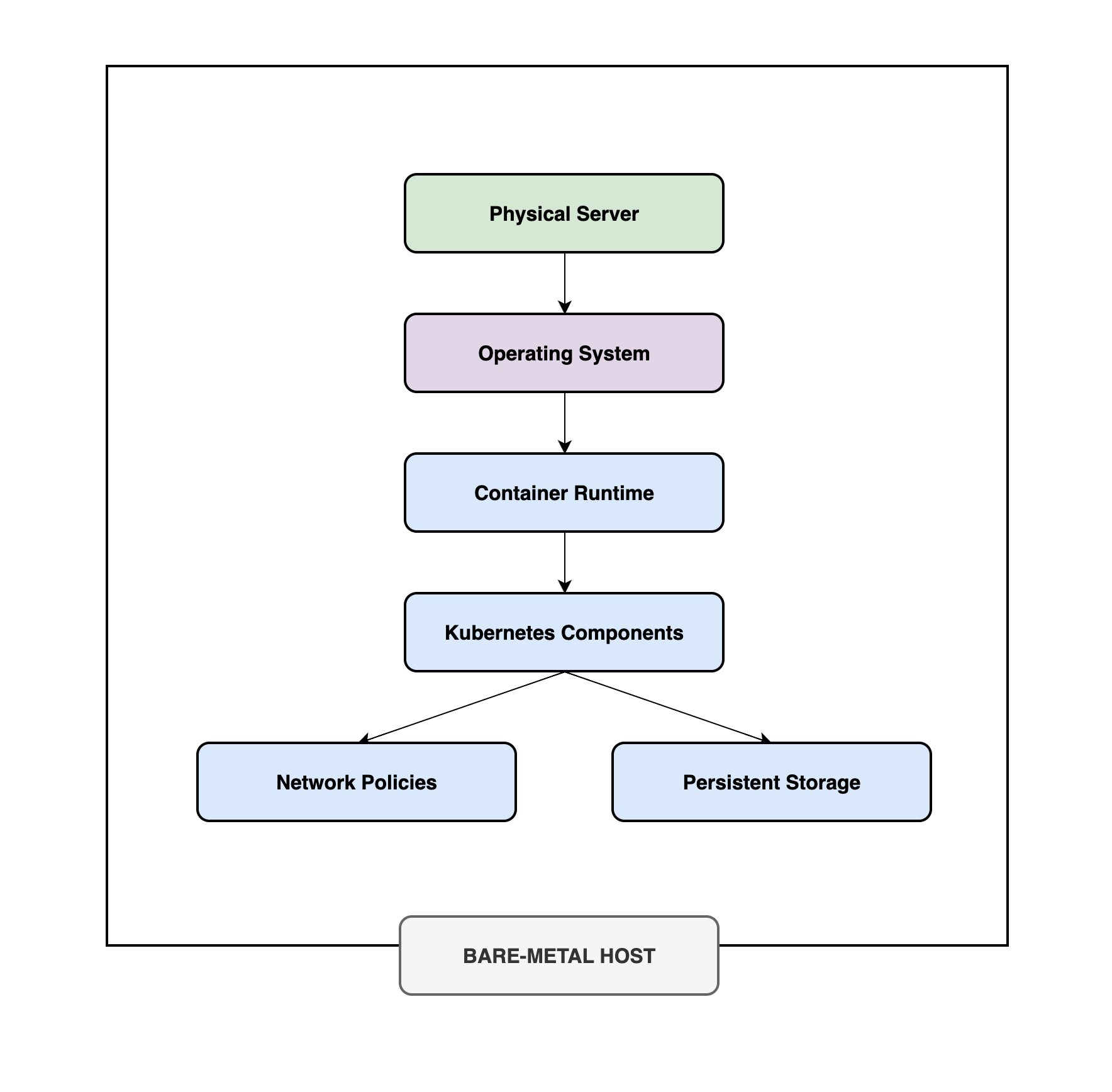

Deploying Kubernetes on Bare Metal

Deploying Kubernetes on bare metal is not a trivial task. A platform like Equinix Metal, with an API for provisioning bare metal servers on demand, a wide range of networking options and a rich set of integrations with popular Kubernetes tools, can make the process easier.

Here’s what the basic steps for deploying Kubernetes look like at a high level, using Equinix Metal as an example (a set of detailed guides on this):

- Choose the right hardware: Consider CPU, memory, storage and network requirements of your workload. Equinix offers a variety of AMD, Ampere Arm and Intel CPUs servers with different memory, SSD and NVMe storage and network configurations.

- Prepare the operating environment: Install the OS and the necessary drivers. Ensure that the chosen OS is compatible with your Kubernetes version. Equinix Metal supports a variety of popular operating systems out of the box, but users can install an OS of their choice with custom iPXE.

- Configure networking: Networking in a bare metal environment can be complex. You need to set up load balancers, switches and routers and configure network policies. Equinix Metal servers are provisioned with all this configured by default, including a route to the internet and private inter-server connectivity, while a huge variety of custom configuration options are available to the more networking-savvy users.

- Install Kubernetes: You can use a Kubernetes installation tool like kubeadm or a third-party tool for this.

- Configure storage: This step is crucial for stateful apps, and there are some important questions to answer here. Do you need file or object storage? Will you use a storage service, run your own standalone storage or deploy your storage service in Kubernetes itself?

- Implement monitoring and logging: For effective management, implement robust monitoring and logging solutions. Popular tools like Prometheus and Grafana can be used with Equinix Metal.

- Consider security: Security is essential in any Kubernetes deployment. Make sure you implement firewalls, access controls and encryption. There’s a variety of security solutions validated on Equinix Metal.

- Create and manage clusters: To create and manage your Kubernetes clusters, utilize tools like Helm for package management and consider automation solutions for ongoing management.

Network Planning

Effective network planning is the key to success with bare metal hosting. Implementing robust load balancing ensures even traffic distribution, while proper ingress configuration controls how external traffic reaches the applications running in your Kubernetes clusters. (Equinix Metal has a Kubernetes integration—Cloud Controller Manager—that supports many service-type/Layer 4 load balancers). Network policies strengthen security, allowing control over pod communication; and service discovery mechanisms ensure smooth application functioning across clusters.

Storage Management

Choosing the right persistent storage solution for your bare metal infrastructure is vital for data reliability. Options such as Ceph, GlusterFS and local SSDs each cater to different needs and use cases. Ceph, for instance, provides scalable block storage that can seamlessly integrate with Kubernetes, while GlusterFS offers a scalable network file system suitable for large-scale storage tasks. Meanwhile, local SSDs provide high-speed storage that is ideal for data-intensive applications. If you’re building a large-scale critical environment like a distributed hybrid multi-cloud, then a full-featured high-performance solution like Pure Storage on Equinix Metal may be the way to go.

Regular backups are crucial to safeguard against data loss and proper management (such as monitoring, provisioning, upgrades and patches and data integrity checks) of storage volumes can greatly enhance performance.

Availability/Redundancy

Bare metal hosting requires careful attention to availability and redundancy. Configuring high availability ensures that your applications remain operational if a server fails. Crafting a robust disaster recovery plan further shields you from unexpected events, minimizing potential downtime. (Here’s a useful guide for implementing a highly available Kubernetes cluster control plane on Equinix Metal.)

Conclusion

Deploying and running Kubernetes on bare metal in a way that maximizes its benefits takes considerably more experience and expertise than traditional virtualized cloud services. The cost of that expertise, either in house or outsourced, should figure in the overall cost calculation.

For teams that know how to put together the compute and networking primitives that a platform like Equinix Metal offers and leverage its powerful API and integrations in ways that cater to their specific workloads, the cost savings can be substantial and so can the advantages of performance and locations of Metal metros in global edge markets.