A Cloud Architect's Guide to Kubernetes Microservices

Why microservices are a powerful approach to architecting modern applications, and why Kubernetes is the platform of choice for doing it.

Microservices architecture is a modern approach to software development where applications are broken down into small independent components. Kubernetes was designed to manage, deploy and scale applications built this way.

This article explores the pros and cons of microservices and explains why Kubernetes is an excellent tool for the job. It also provides best practices for enhancing the experience of deploying, scaling and managing microservices in Kubernetes.

More on using Kubernetes:

- What You Need to Know About Kubernetes Autoscaling

- Understanding Kubernetes Network Policies

- Installing and Deploying Kubernetes on Ubuntu

- Unleashing the Power of Multi-Cloud Kubernetes—a Tutorial

Advantages of Microservices

Microservices-based architectures enable developers to build powerful, highly efficient applications. The big advantages of the approach are:

Independent Deployment and Scaling

Microservices allow individual components and services to be deployed and scaled independently without affecting the entire application. For instance, if a specific service requires more resources, it can be scaled up to meet demand without impacting other services.

The opposite is also true; services can be scaled down to save cost when demand is low. The result is an architecture that better utilizes the available resources and can quickly respond to changing market conditions and customer needs.

Technology Stack Flexibility

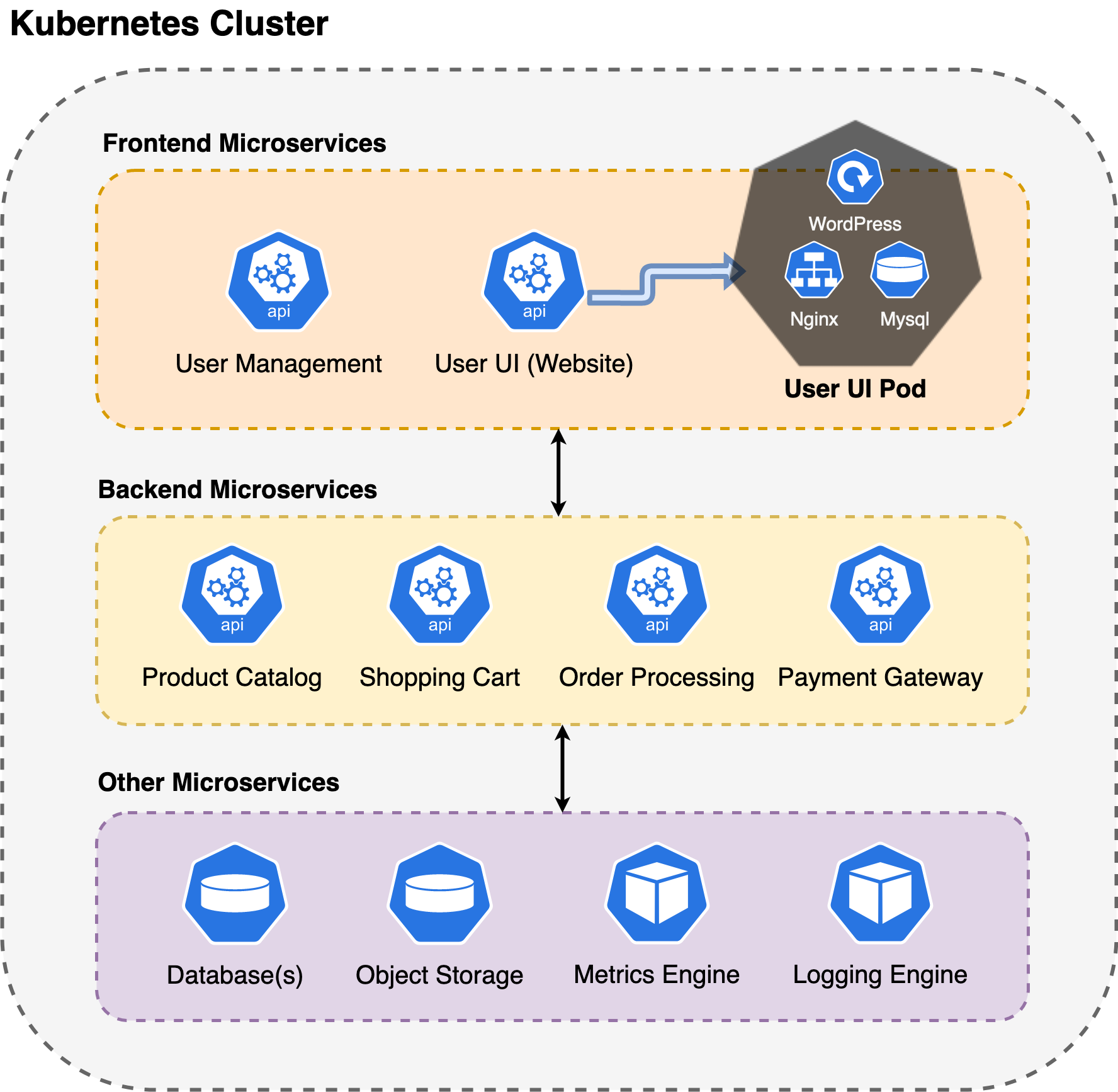

Each microservice can be built using the technologies, frameworks and languages that best suit application-specific requirements. A hypothetical e-commerce application, for example, might consist of the following microservices:

The UI here uses WordPress, Nginx and MySQL to serve the website's pages. But that’s not set in stone. Suppose you're not comfortable developing PHP modules for WordPress. If that’s the case, you can use a static website builder, such as Hugo, written in Go; Gatsby, written in React; or any other framework that best suits your team’s skills.

Likewise, each API can be written in a different language. The Product Catalog API could be written in Python, the Shopping Cart API in Node.js and the Payment Gateway could use the SDK that best fits your needs.

This decoupling empowers developer teams to choose the most appropriate tools and technologies for each service, resulting in better performance and overall system efficiency.

Enhanced Fault Isolation

Failure of a single component in a monolithic application usually affects the entire application. The advantage of a microservices-based architecture here is obvious.

Developers can enhance fault isolation through mechanisms that minimize cascading failures, such as continuous integration/continuous delivery (CI/CD) pipelines that test each microservice before its deployed in production or probes that monitor microservice health. In mission-critical applications, developers can intentionally isolate critical services to facilitate debugging in case of failures.

All this improves fault isolation, leading to greater application stability, less downtime and better user experience.

Easier Maintenance and Faster Development

Breaking an application into small independent components simplifies maintenance and accelerates development. It reduces teams’ dependency on each other, allowing them to work on different services concurrently. Since a single service’s codebase is much smaller than an entire monolith’s, troubleshooting and maintenance are far easier, creating a more maintainable application and reducing technical debt.

To summarize, the biggest benefits of a microservices-based architecture are in the areas of scaling, flexibility, fault isolation and development speed. However, as with all things in life, these benefits come at a cost.

Disadvantages of Microservices

Key drawbacks of a microservices-based architecture include:

Higher Complexity

The divide-and-conquer premise of microservices inevitably increases complexity. Each microservice must be developed, deployed and managed individually, which can become cumbersome as the number of microservices grows. Additionally, your most effective developers will be those who are proficient in multiple technologies and languages, folks who can work effectively across multiple microservices.

Network Latency and Communication Overhead

In a microservices-based architecture, components communicate with each other over a network. This can introduce latency and communication overhead, negatively impacting performance, particularly in high-traffic applications. Developers must also be able to account for potential network failures and design appropriate fallback mechanisms.

Data Consistency Challenges

Ensuring data consistency across multiple microservices can be challenging. Implementing transactions and handling data synchronization across services requires careful planning and can lead to complex solutions, such as the saga pattern or event-driven architecture.

Deployment and Monitoring Complexities

The sheer number of components involved in a microservices based application complicates deployment and monitoring. Orchestrating deployment across multiple services and environments requires advanced tooling and expertise. Similarly, monitoring a distributed system demands a comprehensive approach to collecting and analyzing data from each service, which can be both resource-intensive and time-consuming.

In short, despite its many benefits, a microservices architecture is not a one-size-fits-all solution. Developers and businesses must weigh all these trade-offs before deciding if microservices are the right choice for a specific application.

Kubernetes and its capabilities should factor greatly in that decision. It addresses a lot of the inherent drawbacks in microservices architectures while upleveling their advantages.

Why Kubernetes Is a Great Fit for Microservices

Kubernetes features perfectly complement the microservices pattern, making it a great fit for this architecture. Let’s unpack that statement.

Container Orchestration

As discussed, the number of independent components running simultaneously complicates work with microservices architectures. Kubernetes addresses this complexity by managing the deployment and scaling of containers. It ensures that each microservice has the resources it needs to run effectively while also minimizing the operational overhead associated with manually managing multiple containers.

Scalability and Autoscaling

One of the primary advantages of microservices is the ability to independently scale individual components. Kubernetes supports this through several scaling mechanisms. The most common is the Horizontal Pod Autoscaler (HPA), which automatically adjusts the number of replicas of a specific pod based on demand.

Meanwhile, a DevOps team can implement the Vertical Pod Autoscaler (VPA) to set appropriate container resource limits based on live data and the Cluster Autoscaler (CA) to dynamically scale infrastructure to accommodate the fluctuating needs of a microservices-based application. (Here’s a handy guide for using the Cluster Autoscaler.)

Combined, its autoscaling features allow Kubernetes to handle the complex workloads associated with microservices architectures in real time.

Self-Healing Capabilities

Kubernetes’s self-healing capabilities enable the enhanced fault isolation of microservices. Kubernetes automatically monitors pods' health and restarts failed containers, ensuring that the system remains operational overall even as some of its components fail. This significantly reduces the risk of system-wide outages, improving application resilience.

Load Balancing and Service Discovery

Microservices often face network latency and communication overhead challenges. Kubernetes addresses these issues by offering built-in load balancing and service discovery. Load balancing distributes traffic evenly among microservice instances to avoid performance bottlenecks.

Service discovery enables microservices to easily locate and communicate with one another through a centralized mechanism, reducing the complexity of interservice communication.

Declarative Configuration and Versioning

Managing data consistency and deployment complexities can be challenging in a microservices environment. Kubernetes uses a declarative configuration approach, allowing developers to describe the system's desired state in configuration files. This approach simplifies the deployment process and ensures that all components are in sync. Furthermore, versioning of configuration files helps track changes and enables rolling back to previous versions if needed, promoting a consistent and predictable deployment process.

High Availability

High availability (HA) is a powerful feature that ensures continuous operation of a Kubernetes cluster and all the microservices that run on it despite failures. This is achieved by distributing and replicating resources, such as control plane components (i.e. API server, etcd, controller manager and scheduler), worker nodes and microservices across multiple nodes and availability zones. As a result, Kubernetes minimizes downtime, maintains consistent performance and ensures seamless user experience.

As you might expect, HA is crucial for mission-critical applications that require uninterrupted service and reliable infrastructure, making Kubernetes a great fit for this purpose.

To illustrate why Kubernetes is a really good option for deploying and managing microservices, let’s look at an example.

Microservices Architecture Example

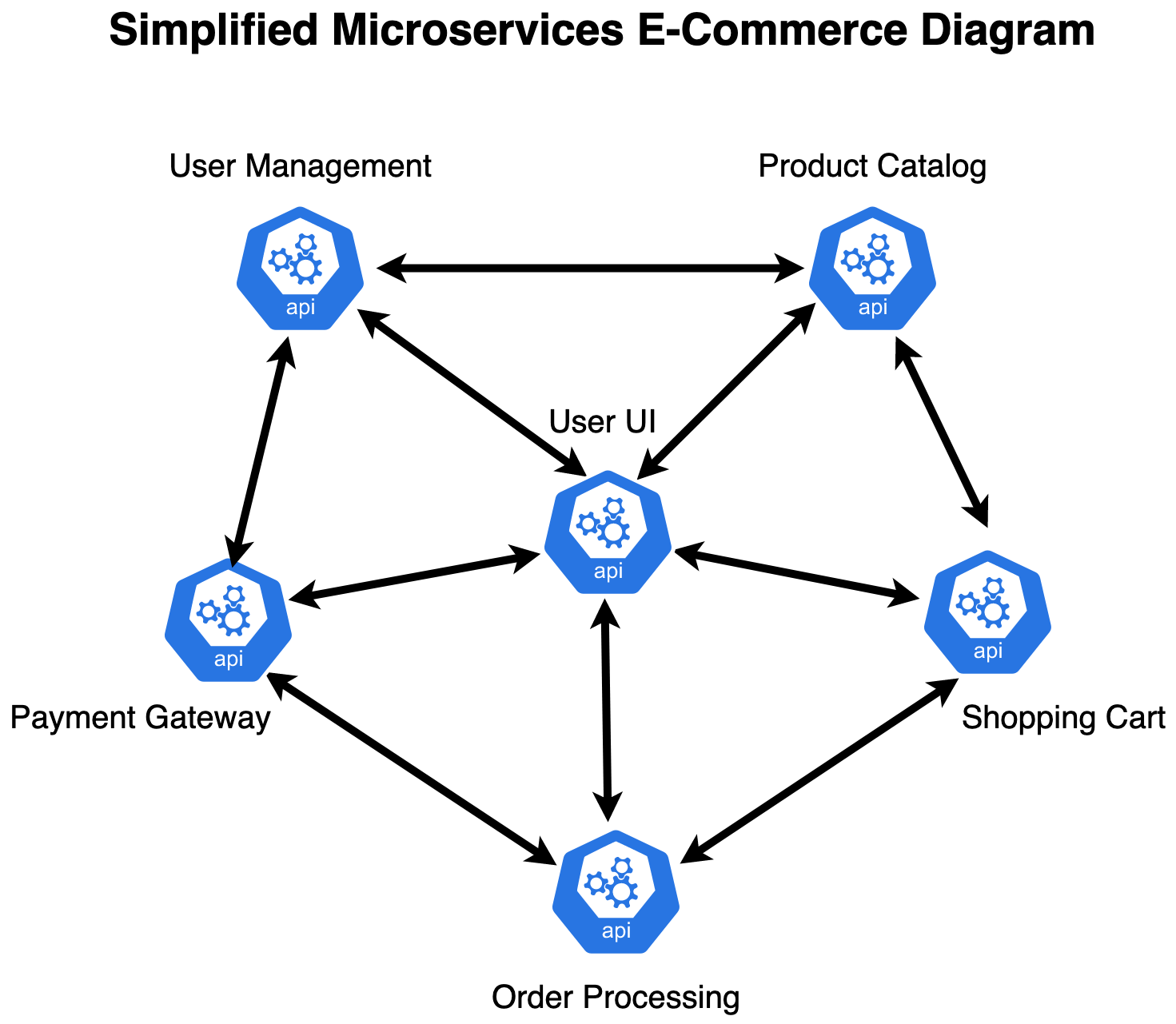

Recall our hypothetical e-commerce platform, where several microservices are running on the Kubernetes cluster.

In this simplified representation, you can see how the different microservices constantly interact with each other, forming a complicated network.

Now let’s see how Kubernetes can benefit this platform.

Frontend Microservices

In this example, there are two microservices, User UI and User Management, responsible for managing the frontend and interacting with clients.

User UI

The UI is the entry point for the platform’s visitors. It handles incoming HTTPS requests, routes them to the appropriate microservices and processes the responses. In other words, this microservice integrates the platform’s various services to ensure a seamless user experience.

Given its importance, the UI must always be available. The HA functionality in Kubernetes makes that relatively simple. If necessary, you can even use a multicloud strategy to maximize performance and reliability for mission-critical apps and services.

User Management API

The User Management API handles user authentication, registration and profile management. It communicates with the database backend to store and retrieve user information. This microservice is essential to security and privacy of user data.

The flexible nature of the microservices architecture comes in handy here. For example, you could implement Auth0, an API designed with microservices in mind. It provides endpoints for users to securely log in, sign up or log out and supports multiple identity protocols, such as OpenID Connect, OAuth 2.0 and SAML.

Backend Microservices

As often happens, the backend involves a greater number of microservices. To keep the example simple, we’ll limit the discussion to the fictional microservices Product Catalog, Shopping Cart, Order Processing and Payment Gateway only. In a real production deployment the number of microservices can grow to include extra functionality, such as metrics and logs.

Product Catalog

The Product Catalog microservice manages inventory. It provides APIs for adding, editing and removing products, as well as search and filtering. This service communicates with the frontend and the database backend to ensure that product information is up to date and consistent across the platform.

Again, the microservices architecture’s flexibility makes many solutions possible. One could be to include a Redis in-memory data store to get a fast, searchable product catalog. This would be a great user experience improvement.

Shopping Cart

The Shopping Cart microservice in our example manages user sessions and their associated shopping carts. It allows users to add, remove and modify items in their cart while providing real-time pricing and availability updates. This service interacts primarily with the Product Catalog and User Management API to ensure a seamless user experience, something that requires great flexibility.

Because it’s a microservice, this API can be developed using different languages and frameworks that easily adapt to the rest of the components. Popular options include Nest.js, Spring Boot and .NET.

Order Processing

The Order Processing microservice handles the entire order lifecycle, from checkout to fulfillment. It coordinates with the Shopping Cart, Payment Gateway and other relevant services to ensure that orders are processed correctly and efficiently. It also communicates with external shipping and logistics providers to facilitate order delivery.

Given its crucial role, the scalability Kubernetes enables for microservices is especially beneficial for this microservice. Whenever e-commerce traffic spikes, say, during Christmas, Black Friday or Valentine's Day, Order Processing can scale up just enough to handle the demand.

Payment Gateway

The Payment Gateway microservice manages the payment processing. It securely communicates with external payment providers (i.e. Visa or PayPal) to validate and process transactions.

Here Kubernetes shines again, thanks to its self healing capabilities. As you'll see shortly, developers can implement health checks in critical microservices like this one to ensure that transactions are as efficient and secure as possible.

Kubernetes enables developers to harness the full potential of microservices while minimizing their drawbacks. The truth is, however, that implementing Kubernetes itself comes with a hefty set of new challenges. Here are some best practices that help address those challenges.

Best Practices for Kubernetes and Microservices

In this section, you'll learn about five best practices to follow when running microservices on Kubernetes:

1. Design Microservices for Failure

Microservices should be designed to handle failures gracefully, ensuring that the entire system remains stable even during individual component failures.

Developers should implement proper error handling and fallback mechanisms within the microservices. Coupled with Kubernetes’s self-healing capabilities, this ensures that failed containers are rescheduled and restarted whenever necessary.

2. Implement Proper Health Checks and Readiness Probes

Health checks and readiness probes are crucial for maintaining the reliability and stability of microservices running on Kubernetes. Health checks allow Kubernetes to monitor the health of individual containers and take appropriate action whenever one becomes unresponsive.

Readiness probes inform Kubernetes when a container is ready to start accepting traffic. By implementing these checks, Kubernetes can ensure that the only containers in use are containers that are healthy and ready, improving overall system resilience.

3. Use Resource Quotas and Limits

Resource quotas and limit ranges are essential for managing resources efficiently and preventing any individual microservice from consuming more resources than it needs.

Kubernetes allows you to set resource quotas on namespaces, ensuring that each microservice has access to a fair share of resources. You can also define range limits on the resources that each container can use, preventing resource starvation and improving overall system stability.

4. Implement Monitoring and Observability

Monitoring and observability are vital for diagnosing issues, understanding system performance and making informed scaling and optimization decisions. Proper monitoring and logging solutions enable teams to collect and analyze metrics, logs and traces and get valuable insights into system health and performance. This helps identify and resolve issues quickly and plan for future growth.

The Grafana observability stack is one monitoring and observability option that comes to mind, but there are dozens of observability, monitoring and analysis tools available.

5. Secure Microservices with RBAC and Network Policies

Cluster security is a top priority when deploying microservices on Kubernetes. Role-based access control (RBAC) and network policies enhance security of your microservices by controlling access to Kubernetes resources and limiting communication between different microservices.

RBAC allows you to define and enforce access control policies based on user roles, while network policies help restrict traffic between pods, ensuring that only authorized services can communicate with one another. (If you’re interested in a deeper dive into Kubernetes security, there are other tasks that also help secure your Kubernetes cluster.)

By following these best practices, you'll improve the stability of your microservices and their security and be able to efficiently manage the resources of your Kubernetes cluster.

Conclusion

Microservices’ benefits include better reliability, efficiency and scalability. In this article you learned why Kubernetes is an ideal orchestration platform for getting the most out of microservices, as well as some of the disadvantages you need to be aware of.

Here are some more useful resources if you want to explore Kubernetes and microservices further: