- Home /

- Resources /

- Learning center /

- What is Cloud Adja...

What is Cloud Adjacency?

In this article we'll talk about what is cloud adjacency and how we got here.

On this page

What is Cloud Adjacency?

It sounds like another one of the latest cloud buzzwords, but it has real meaning, because it can have real value to businesses.

In order to understand cloud adjacency, let's look at the various alternative places for your infrastructure to live. Once we understand those, we can see how cloud adjacency fits in.

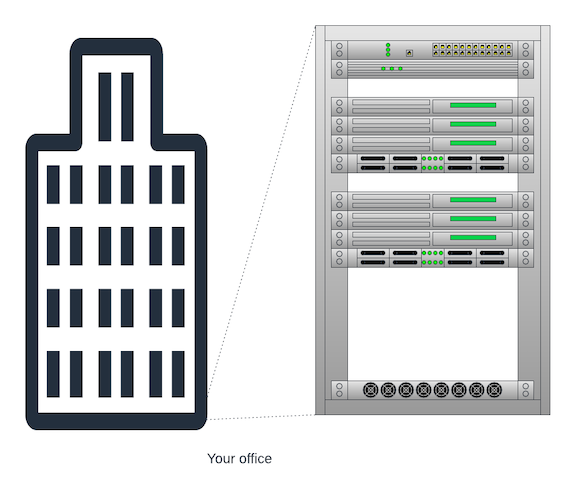

On-Premises

The most basic type of infrastructure placement is "on-premises." As its name implies, this is infrastructure that is located on your premises. This could be in your office, in a data center that you own, or in a data center that you lease, such as a colocation facility.

For a long time, this really meant on your own premises. You started with it under your desk, then in a data closet, eventually in some secured room in the back of your building, and eventually a proper, if expensive, data centre that you owned and operated in your office.

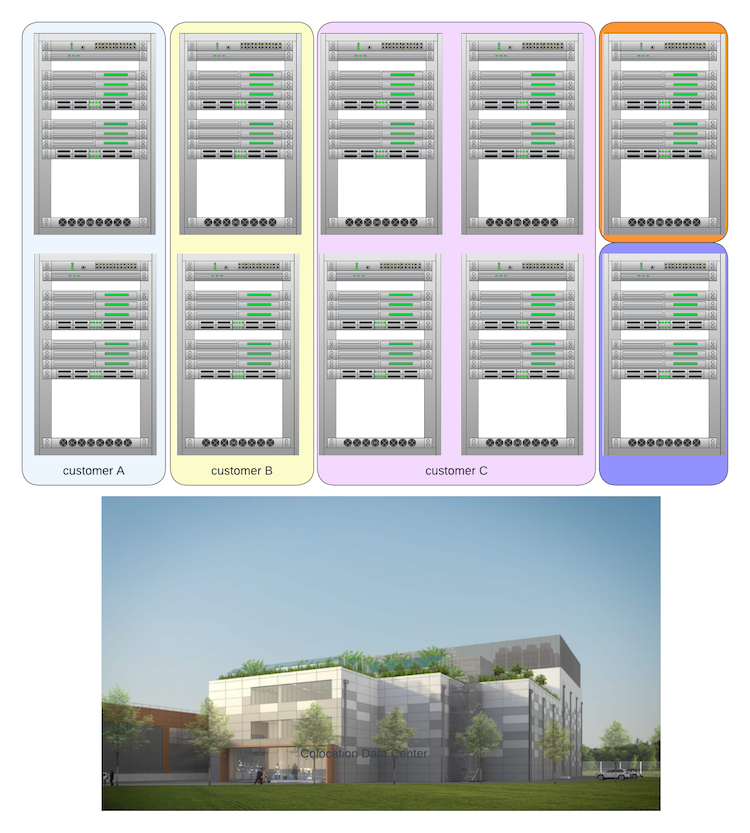

Over time, the business case for owning and operating your own data center became less and less compelling. Almost every company moved its "on-premise" infrastructure to a data center managed by a colocation firm. For more information on colocation, see our What is Colocation? article.

So the definition of "on-premises" doesn't really mean "infrastructure on the premises of a building that we own and operate," as it used to. Instead, it means:

infrastructure we own or lease that is located in a data center, which we either own or from whom we lease some space, power, cooling and connectivity.

Cloud

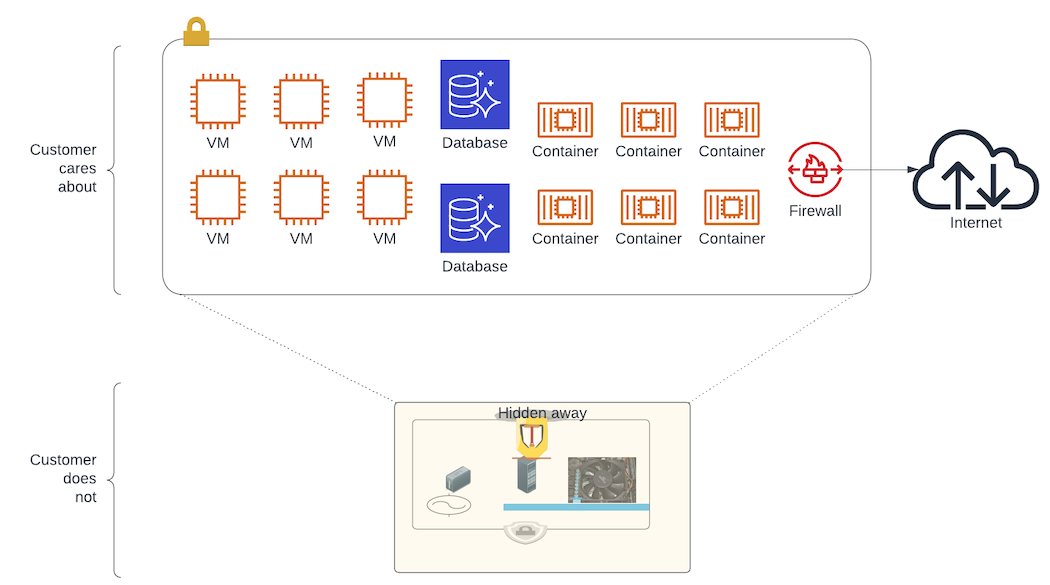

With the launch of AWS EC2 in 2006, the cloud as we know it was born. The cloud is where you neither own nor lease physical servers and build up networks and connectivity, between them and to external sites, like partners or the Internet.

Instead, you lease computing resources (cpu, storage, memory) from a cloud-provider. You do not know which physical server your instances are on, which rack, or how they are connected. You do not think about things like routers and cross-connects and switches and cables. You just get computing resources and connectivity.

Of course, there are other definitions of cloud, and one can quibble with the one we provided above, but this is more than accurate enough to provide a contrast with on-premises.

The cloud is the precise opposite of on-premises. You do not own or lease any physical infrastructure; you probably do not even know where the infrastructure physically is located, other than a general region. You cannot rack, power and connect devices, no matter how much you may want to. Finally, you are restricted to the computing resources and connectivity that the cloud provider offers.

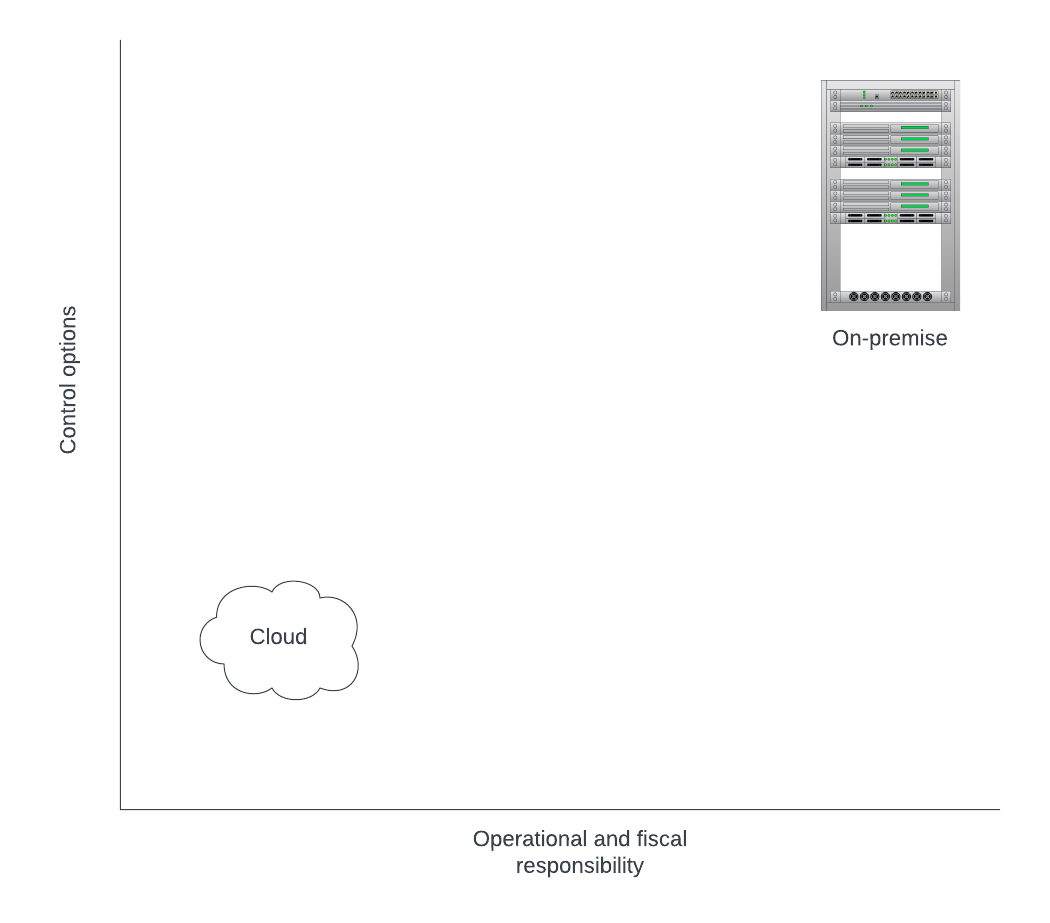

Cloud and on-premises really are polar opposites. One gives you all of the options you want, and all of the operational responsibilities and fiscal ownership -- like purchasing and leasing -- that come with it. The other gives you a limited (if large) set of options, and takes care of all of the operational management, rolled into a single hourly or monthly fee.

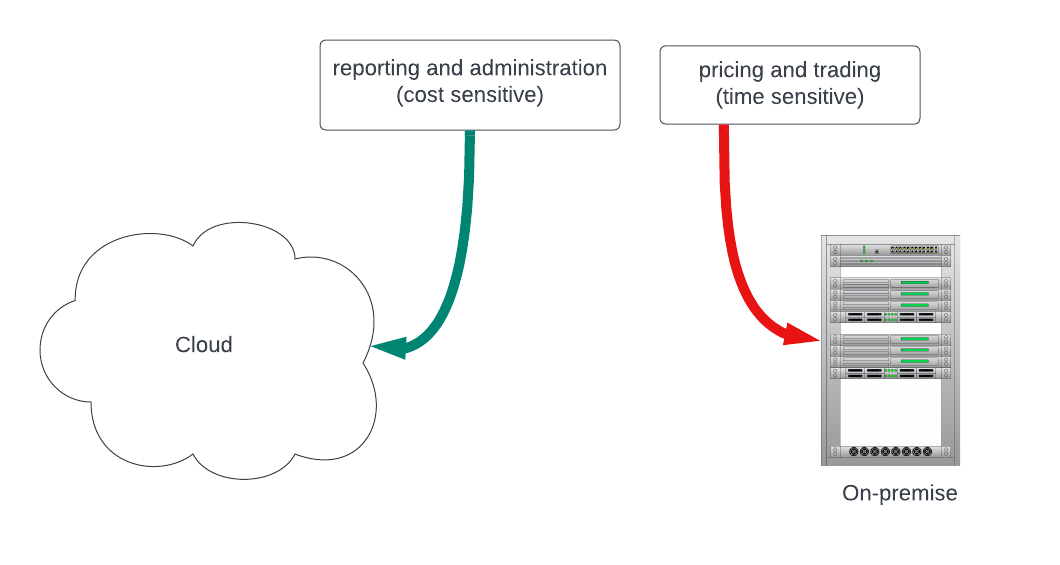

What happens if neither quite fits your needs? For example, suppose you are a foreign exchange trading firm. On the one hand, you must be in extremely close network proximity to all of the other FX trading firms, and your processing timing sensitivity is so high that microseconds count. This does not work in the public cloud. Virtual machines over a physical, virtualized network, and a data centre that is 5-10 milliseconds (or more) away from other firms can put you out of the running.

On the other hand, that is just for your actual pricing and trading. The bulk of your compute likely is reporting and administration, activities that have none of those issues, and can be iterated upon quickly. These are suited perfectly to the cloud.

Enter hybrid cloud.

Hybrid Cloud

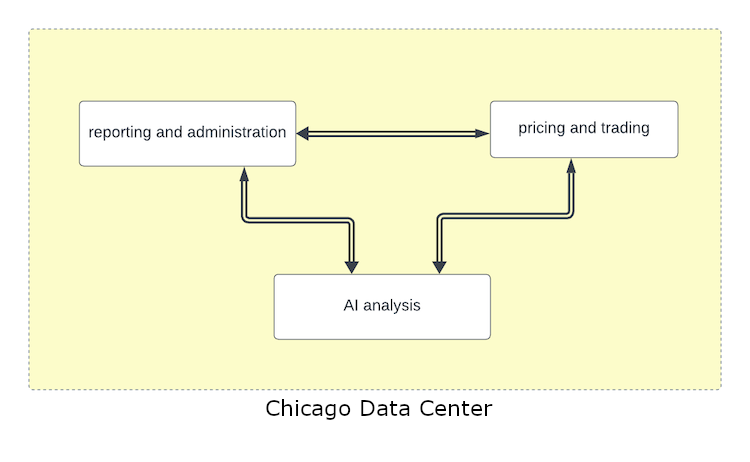

Hybrid cloud is the combination of on-premises and cloud. It is the ability to run some of your infrastructure in the cloud, and some of it on-premises. For our fictitious foreign exchange firm, it is the right balance: run pricing and trading on-premises, run reporting and administration in the cloud.

Hybrid cloud also can include using multiple cloud providers. For example, you may use AWS for your reporting and administration, Google Cloud for your machine learning and AI, and Azure for your office productivity, while all the super-low-latency activities happen on-premises.

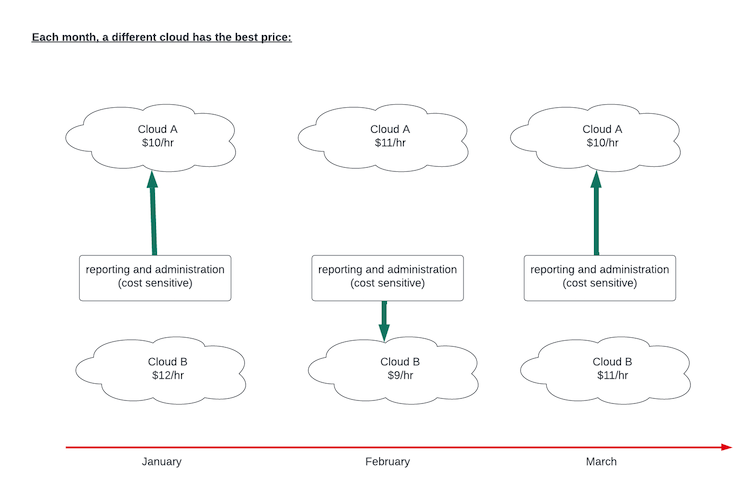

Alternatively, you might do the same activities in two or three different clouds. This allows you to do price arbitrage, using one cloud when its prices are lower, and switching to another when its prices are lower. It also allows you to manage your risks better. If an event happens in cloud provider A, or if you have a falling out with them, you do not need to spend a year figuring out how to move your infrastructure to cloud provider B. You are already running there!

Hybrid cloud is a great solution for many companies. It allows you to take advantage of the best of both worlds, as well as to manage risk and get the best pricing.

We covered cloud, for when everything just fits in the public cloud. We covered on-premises, for services that must run in your own data center (or really colocation), whether due to performance, compliance or complexity reasons. Finally, we covered hybrid cloud, for the best of both worlds.

What else would we need?

Cloud Adjacency

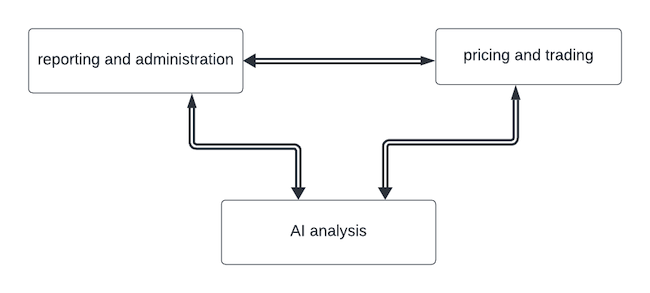

In our foreign exchange example above, we assumed most of our services could run pretty independently of each other. We ran AI in Google, administration in AWS, office productivity in Azure, and trading on-premises.

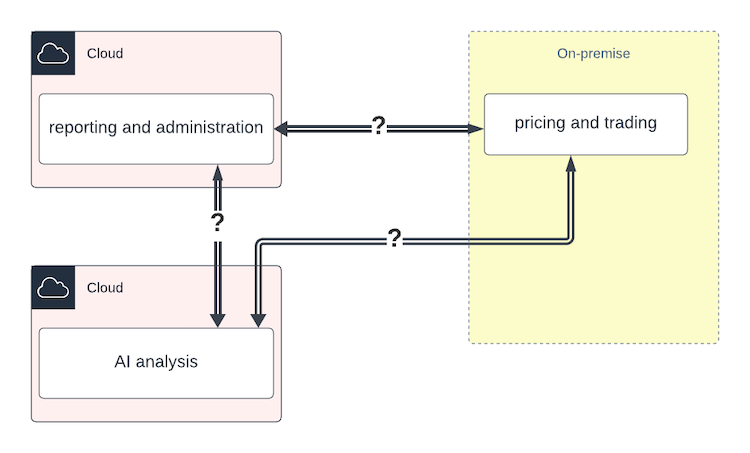

In the real world, these services never are fully independent. For example, administration and reporting will not work if they do not have access to the data that is being generated by the trading systems. Similarly, AI cannot work if it does not have access to the reporting and trading data.

If everything is on the same cloud, or on-premises, this isn't too bad. You control the placement of, well, everything, so just place them all in proximity to one another.

In hybrid cloud, it is a little more challenging. You need to connect these various "landing zones" (cloud A, cloud B,

on-premises) together.

In hybrid cloud, it is a little more challenging. You need to connect these various "landing zones" (cloud A, cloud B,

on-premises) together.

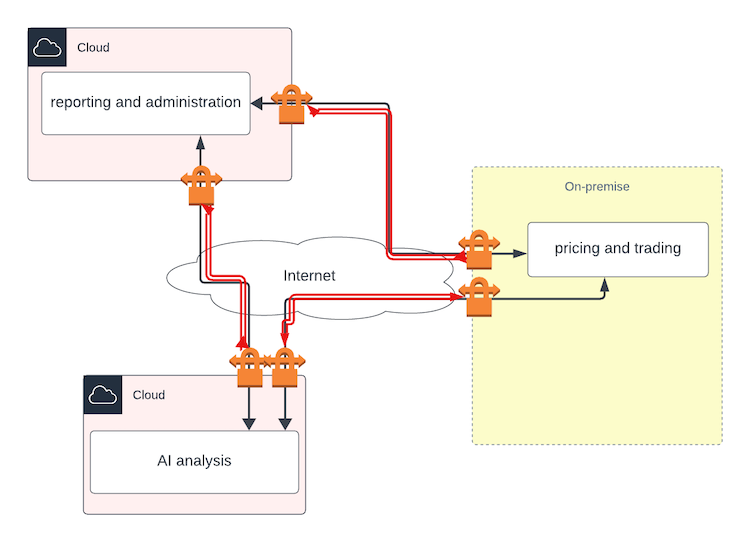

The simplest solution is connecting them over the Internet. This works, albeit opening some security and compliance concerns. "No problem," you say, "I can do it over a VPN." In some cases, that may be enough. If your data and processes are really sensitive, or certain kinds of regulated, that very well may not fit the bill.

Further, the performance can be unpredictable.

Well, since all of the clouds have some form of private connection, such as AWS DirectConnect or Google Cloud Interconnect, you can run a private connection from your Virtual Private Cloud (VPC) in the public cloud to your private data centers.

This is definitely an improvement, and works in many use cases. It likely will solve most of your security and compliance issues, with the right setup and configuration.

So what else is left? Three issues:

- connectivity

- performance

- cost

Didn't we just say that the cloud providers have lots of options? Over the Internet, VPN over the Internet, and direct private connection. So why is connection an issue?

We already described how over the Internet, security (even with a VPN) and performance can be an issue for many use cases. That leaves private connection. This may be the best option, but it is not without its issues.

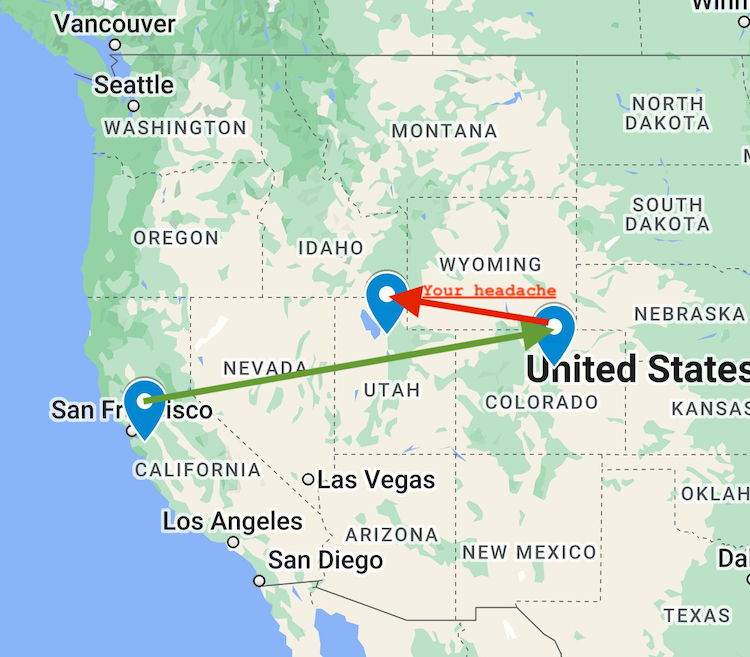

First, let's look at connectivity. You need to be at a place your connections terminate. For example, here is the list of AWS DirectConnect locations by region. There are lots, but they are not infinite. There is no connection, for example, in Salt Lake City, UT, and only one in Denver, CO. What if your on-premises location -- most likely your colocation provider -- is in Salt Lake City, or in a different location in Denver? You are out of luck.

Sure, you can then get a connection between the sites, for example, between your colo in Salt Lake and the nearest AWS DirectConnect site (probably Denver), but that is another connection for you to purchase, another link to maintain.

Second, it introduces latency. If you are running services in a us-west region of your cloud provider, and your colocation is in Equinix CH1 in Chicago (a very common location), you are looking at ~50 milliseconds of latency. That may not sound like a lot, but it can add up very quickly. Your processes take time to run anyways; do you really want to be adding that latency?. In some cases it will be fine, but in many others it will not.

Third, there is cost. Every time data moves into or out of a cloud provider region, you pay. Actually, often it is billed even between availability zones in the same region (little secret: Equinix Metal does not charge between facilities in the same metro). Continuing our previous example, you are going to pay every time you move traffic in and out of your us-west region.

All of the above adds up to a lot of complexity and cost for what may be poor performance.

How do we get past that? Cloud adjacency.

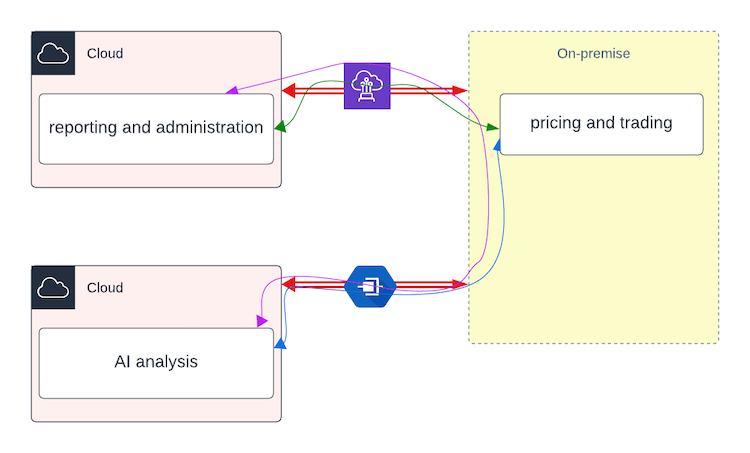

Cloud adjacency, then, is not a new architecture for deploying your infrastructure; it is an optimized way of thinking about where you place your infrastructure in a hybrid cloud architecture.

For performance, focus on placing your cloud assets as close as possible to your colocation, or your colocation as close as possible

to your cloud. If you are running cloud services in us-west, then Equinix's range of Silicon Valley data centres

SV*

are a much better choice than something in the Midwest or East Coast, let alone Europe or Asia; and the same in the reverse.

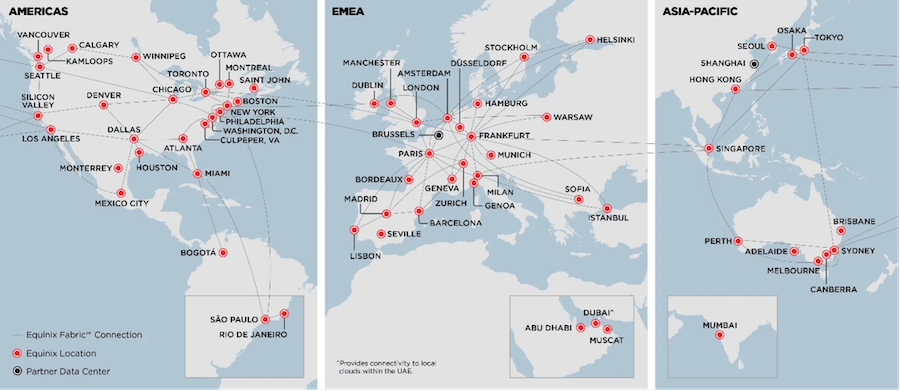

For connectivity, you try to place your infrastructure in a data centre with solid direct connectivity to the cloud providers you want to use. Often, those overlap between cloud providers - unsurprisingly, Equinix's New York-region NY5 and London region LD5 are very popular, as they are major financial services colocation sites, making them valuable to all cloud providers - but sometimes, they do not, and you have to pick. Additionally, there are global mesh network providers with excellent connectivity across a much broader footprint than the official cloud connection providers. For example, Equinix Fabric provides direct connectivity to Equinix Data Centres across the globe and cloud providers across the industry.

Finally, for cost, you want to minimize the amount of data that moves in two different planes:

- Between cloud provider regions (and zones)

- Between cloud provider regions and your colocation

You will pay for all of that traffic, which can add up significantly. You want to design your software so that the maximum processing of data happens within each "landing zone." For example, process simplification of data on your colocation storage and processing, and then send the smaller amounts to the cloud for reporting. Or run your machine learning models in the cloud and then send the results to your adjacent colocation.

Cloud adjacency is an infrastructure deployment strategy, wherein you select your infrastructure placement and network connectivity to optimize for connectivity, performance and cost. It takes the best of colocation and one or multiple public clouds, like hybrid cloud, but then takes advantage of your knowledge of your application for the best outcome for your needs.

You may also like

Dig deeper into similar topics in our archives

Crosscloud VPN with WireGuard

Learn to establish secure VPN connections across cloud environments using WireGuard, including detailed setups for site-to-site tunnels and VPN gateways with NAT on Equinix Metal, enhancing...

Kubernetes Cluster API

Learn how to provision a Kubernetes cluster with Cluster API

Kubernetes with kubeadm

Learn how to deploy Kubernetes with kubeadm using userdata

OpenStack DevStack

Use DevStack to install and test OpenStack on an Equinix Metal server.