Understanding Kubernetes Network Policies

The default, unrestricted communication within a namespace, doesn’t really work at scale. Here’s how to set and enforce rules for networking within a cluster as it grows.

As your Kubernetes cluster gets more complex and the number of workloads increases, managing network traffic in the cluster becomes a challenge. This is when knowledge of Kubernetes network policies is critical.

Kubernetes network policies allow you to configure and enforce a set of rules for how traffic moves between pods and services within a cluster. They give you fine-grained control over traffic, making it easier to secure, isolate and optimize communication between workloads.

Here is a walkthrough of the process for creating and managing Kubernetes network policies. We’ll go over the different components of a policy, including selectors, ingress rules and egress rules and cover different policy examples and best practices for implementing them. By the end of this guide, you’ll have a solid understanding of using Kubernetes network policies to secure and manage traffic in a cluster.

More about using Kubernetes:

- Installing and Deploying Kubernetes on Ubuntu

- Unleashing the Power of Multi-Cloud Kubernetes—a Tutorial

- So You Want to Run Kubernetes On Bare Metal

- Making the Right Choice: Kubernetes on Virtual Machines vs. Bare Metal

Why You Need Kubernetes Network Policies

Kubernetes allows unrestricted network communication between all pods and services within a namespace. In other words, any pod in a namespace can communicate with any other pod in the namespace using its IP address—regardless of which deployment or service it belongs to. While this default behavior can be suitable for small-scale applications, it’s problematic at scale. As a cluster grows in size and complexity, unrestricted communication among pods can increase the attack surface and lead to security breaches.

Implementing Kubernetes network policies in your cluster can improve:

- Security: With Kubernetes network policies, you can specify which pods or services are allowed to communicate with each other and which traffic should be blocked from certain access. This makes it easier to prevent unauthorized access to sensitive data or services.

- Compliance: Compliance requirements are non-negotiable in industries like healthcare or financial services. Meet compliance requirements by ensuring that traffic is only allowed to flow between specific workloads.

- Troubleshooting: Make it easier to troubleshoot networking issues, especially in large clusters, by providing visibility into which pods and services should be communicating with each other. Policies can also help you pinpoint the source of networking issues, facilitating faster resolution.

Kubernetes Network Policy Components

A robust network policy includes:

- Policy types: There are two types of Kubernetes network policies: ingress and egress. Ingress policies allow you to control incoming traffic to a pod, while egress policies allow you to control outgoing traffic from a pod. They’re specified in the

policyTypesfield of theNetworkPolicyresource. - Ingress rules: These define the incoming traffic policy for pods, specified in the ingress field of the

NetworkPolicyresource. You can define the source of the traffic, which can be a pod, a namespace, or an IP block, as well as the destination port or ports that the traffic is allowed to access. - Egress rules: These define the outgoing traffic policy for pods. Here, you’ll specify the destination of the traffic, which can be a pod, a namespace, or an IP block, as well as the destination port or ports that the traffic is allowed to access.

- Pod selectors: These select the pods to which you want to apply a policy. Specify labels for the selectors, and the pods that match the selector are then subject to the rules specified in the policy.

- Namespace selectors: Similar to pod selectors, these allow you to select the namespaces to which a policy applies.

- IP block selectors: IP block selectors specify a range of IP addresses to allow or deny traffic to or from. You can use CIDR notation to specify the range of IP addresses.

Implementing Network Policies

Now let’s get into creating, updating and deleting network policies in Kubernetes. This tutorial walks you through the creation of three demo applications: a frontend, a backend and a database. Using these examples, you’ll learn how to restrict and allow network traffic between applications.

First, you need to have a running cluster. To create a local cluster on your machine, I recommend using minikube. Because minikube doesn’t support network policies out of the box, start minikube with a network plugin like Calico or Weave Net.

Use the following command to start minikube so you have a minikube cluster with network support:

minikube start --network-plugin=cni --cni=calico

With a cluster running, this tutorial uses a dedicated namespace to keep the cluster organized:

kubectl create namespace network-policy-tutorial

Create three sample pods (Frontend, Backend and Database) in the namespace:

kubectl run backend --image=nginx --namespace=network-policy-tutorial

kubectl run database --image=nginx --namespace=network-policy-tutorial

kubectl run frontend --image=nginx --namespace=network-policy-tutorial

Verify that the pods are running:

kubectl get pods --namespace=network-policy-tutorial

You should get the following response:

NAME READY STATUS RESTARTS AGE

backend 1/1 Running 0 12s

database 1/1 Running 0 12s

frontend 1/1 Running 0 22s

Create the respective services for the pods:

kubectl expose pod backend --port 80 --namespace=network-policy-tutorial

kubectl expose pod database --port 80 --namespace=network-policy-tutorial

kubectl expose pod frontend --port 80 --namespace=network-policy-tutorial

Get the IP of the respective services:

kubectl get service --namespace=network-policy-tutorial

You should get a response similar to the following:

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

backend ClusterIP <BACKEND-CLUSTER-IP> <none> 80/TCP 24s

database ClusterIP <DATABASE-CLUSTER-IP> <none> 80/TCP 24s

frontend ClusterIP <FRONTEND-CLUSTER-IP> <none> 80/TCP 24s

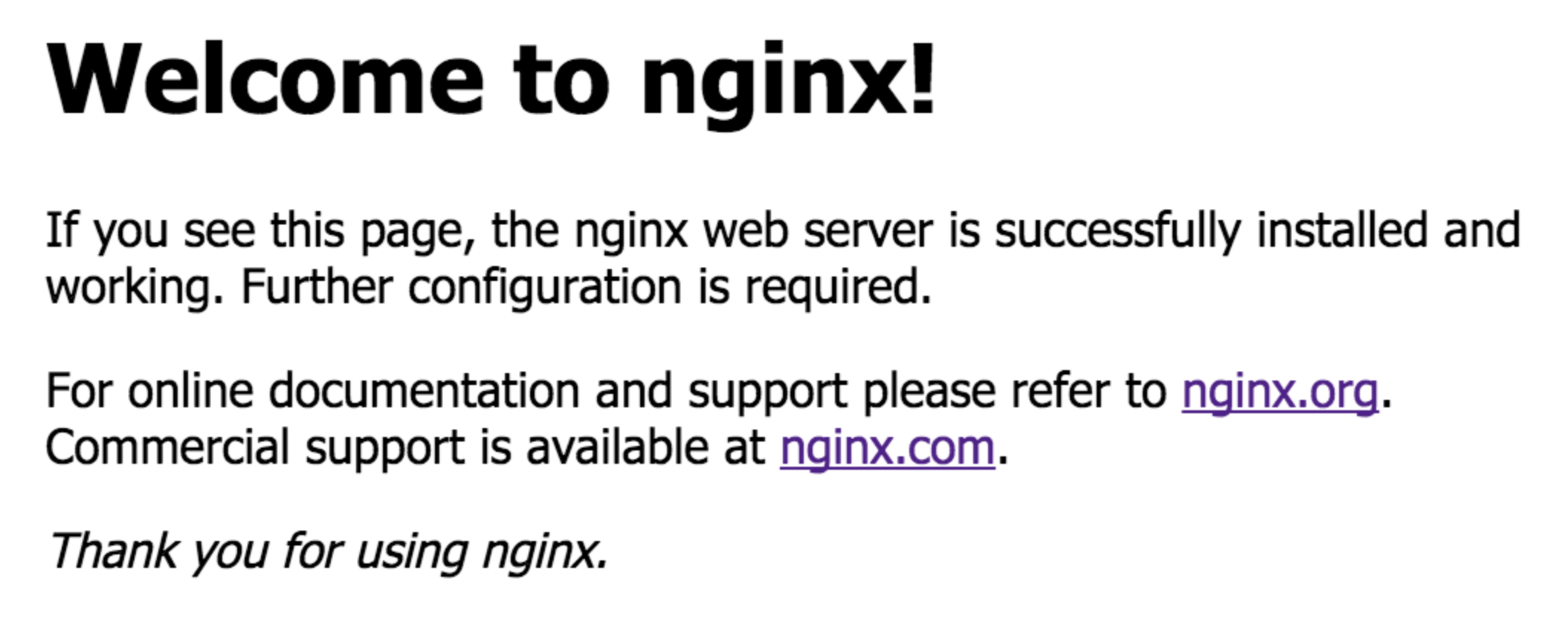

Check if the frontend can communicate with the backend and database:

kubectl exec -it frontend --namespace=network-policy-tutorial -- curl <BACKEND-CLUSTER-IP>

kubectl exec -it frontend --namespace=network-policy-tutorial -- curl <DATABASE-CLUSTER-IP>

Replace <BACKEND-CLUSTER-IP> and <DATABASE-CLUSTER-IP> with their respective IPs. Find them by running kubectl get service --namespace=network-policy-tutorial.

You’ll get the following response:

Example 1: Restricting Traffic in a Namespace

This first example demonstrates restricting traffic within the network-policy-tutorial namespace. You’ll prevent the frontend application from communicating with the backend and database applications.

To begin, create a namespace-default-deny.yaml policy that denies all traffic in the namespace:

apiVersion: networking.k8s.io/v1

kind: NetworkPolicy

metadata:

name: namespace-default-deny

namespace: network-policy-tutorial

spec:

podSelector: {}

policyTypes:

- Ingress

- Egress

Then run the following command to apply the network policy configuration in the cluster:

kubectl apply -f namespace-default-deny.yaml --namespace=network-policy-tutorial

Now try to access the backend and database from the frontend again; you’ll see that it’s no longer possible for the frontend to communicate with the backend and database and vice versa.

kubectl exec -it frontend --namespace=network-policy-tutorial -- curl <BACKEND-CLUSTER-IP>

kubectl exec -it frontend --namespace=network-policy-tutorial -- curl <DATABASE-CLUSTER-IP>

Example 2: Allowing Traffic from Specific Pods

Now, let’s see if we can allow the following outward traffic in the cluster:

Frontend -> Backend -> Database

This enables the frontend to send outward traffic to the backend only, while the backend can only receive inward traffic from the frontend alone. Likewise, the backend can only send outward traffic to the database, and the database can only receive inward traffic from the backend.

Create a new policy, frontend-default-policy.yaml, and paste in the following code:

apiVersion: networking.k8s.io/v1

kind: NetworkPolicy

metadata:

name: frontend-default

namespace: network-policy-tutorial

spec:

podSelector:

matchLabels:

run: frontend

policyTypes:

- Egress

egress:

- to:

- podSelector:

matchLabels:

run: backend

Then run the following command to apply the policy:

kubectl apply -f frontend-default-policy.yaml --namespace=network-policy-tutorial

For the backend, create a new policy, backend-default-policy.yaml, and paste in the following code:

apiVersion: networking.k8s.io/v1

kind: NetworkPolicy

metadata:

name: backend-default

namespace: network-policy-tutorial

spec:

podSelector:

matchLabels:

run: backend

policyTypes:

- Ingress

- Egress

ingress:

- from:

- podSelector:

matchLabels:

run: frontend

egress:

- to:

- podSelector:

matchLabels:

run: database

Run the command to apply the policy as before:

kubectl apply -f backend-default-policy.yaml --namespace=network-policy-tutorial

Moving on in a similar fashion for the database, create a new policy, database-default-policy.yaml, and paste in the following code:

apiVersion: networking.k8s.io/v1

kind: NetworkPolicy

metadata:

name: database-default

namespace: network-policy-tutorial

spec:

podSelector:

matchLabels:

run: database

policyTypes:

- Ingress

ingress:

- from:

- podSelector:

matchLabels:

run: backend

Run the command to apply the policy:

kubectl apply -f database-default-policy.yaml --namespace=network-policy-tutorial

And now that you’ve applied these network policy configurations, execute the following to receive a response:

kubectl exec -it frontend --namespace=network-policy-tutorial -- curl <BACKEND-CLUSTER-IP>

kubectl exec -it backend --namespace=network-policy-tutorial -- curl <DATABASE-CLUSTER-IP>

However, if you execute this next piece of code instead, you won't receive a response, because the traffic flow hasn't been opened in the namespace:

kubectl exec -it backend --namespace=network-policy-tutorial -- curl <FRONTEND-CLUSTER-IP>

kubectl exec -it database --namespace=network-policy-tutorial -- curl <FRONTEND-CLUSTER-IP>

kubectl exec -it database --namespace=network-policy-tutorial -- curl <BACKEND-CLUSTER-IP>

Example 3: Combining Ingress and Egress Rules in a Single Policy

When you need to control an application’s ingress and egress traffic in your cluster, you don’t have to create separate network policies for each. Instead, you can combine both traffic flows into one network policy, as you can see in the contents of this backend-default-policy.yaml file:

apiVersion: networking.k8s.io/v1

kind: NetworkPolicy

metadata:

name: backend-default

namespace: network-policy-tutorial

spec:

podSelector:

matchLabels:

run: backend

policyTypes:

- Ingress

- Egress

ingress:

- from:

- podSelector:

matchLabels:

run: frontend

egress:

- to:

- podSelector:

matchLabels:

run: database

Example 4: Blocking Egress Traffic to Specific IP Ranges

Actually, let’s have one more example. You can also configure certain applications to send out traffic to certain IPs in your cluster.

Instead of creating a new yaml configuration file, let’s update the backend-default-policy.yaml file you created earlier. You’ll replace the egress section of the yaml configuration. Instead of using podSelector to restrict IP to the database, you’ll use ipBlock.

Open the file and update the content of the file with the following code:

apiVersion: networking.k8s.io/v1

kind: NetworkPolicy

metadata:

name: backend-default

namespace: network-policy-tutorial

spec:

podSelector:

matchLabels:

run: backend

policyTypes:

- Ingress

- Egress

ingress:

- from:

- podSelector:

matchLabels:

run: frontend

egress:

- to:

- ipBlock:

cidr: <DATABASE-CLUSTER-IP>/24

In this backend-default-policy.yaml updated configuration, you’re blocking the frontend application from sending outward traffic to this IP range (<DATABASE-CLUSTER-IP>/24), which your database falls under. That means that if your <DATABASE-CLUSTER-IP> is 10.10.10.10, then all IP requests from 10.10.10.0 to 10.10.10.255 are blocked.

Before you apply the configuration, first check the network policies. You should see the following:

kubectl get networkpolicy --namespace=network-policy-tutorial

NAME POD-SELECTOR AGE

backend-default run=backend 6m16s

database-default run=database 5m48s

frontend-default run=frontend 6m50s

namespace-default-deny <none> 8m22s

Now apply the updated configuration of backend-default-policy.yaml in your cluster:

kubectl apply -f backend-default-policy.yaml --namespace=network-policy-tutorial

Notice that no new network policy was added. That’s because you didn’t change the metadata.name label; Kubernetes updated the configuration of the network policy under the same name, instead of creating a new network policy.

Now if you try to access the database from the frontend, it’s no longer possible:

kubectl exec -it backend --namespace=network-policy-tutorial -- curl <DATABASE-CLUSTER-IP>

You can use the kubectl delete command to delete a network policy. For instance, you can delete the backend-default-policy like so:

kubectl delete -f backend-default-policy.yaml --namespace=network-policy-tutorial

The network policy configuration that configures the backend to receive traffic from the frontend has been deleted; the frontend application is no longer able to access the backend.

Best Practices for Using Kubernetes Network Policies

Of course, there are some best practices to keep in mind when creating network policies in Kubernetes. Let’s take a look at a few of the most important ones.

Ensure Proper Isolation

Since Kubernetes network policies allow you to control network traffic between pods, it’s important to define them well to ensure proper isolation.

The first step in ensuring proper isolation is to identify which pods should be allowed to communicate with each other and which should be isolated from each other. Then define rules to enforce these policies.

You should also:

- Use namespaces to isolate workloads from each other.

- Define network policies for each namespace that restrict traffic based on the principle of least privilege.

- Limit network traffic between pods and services to only what is necessary for their operation.

- Use labels and selectors to apply network policies to specific pods and/or services.

Monitor and Log Network Policy Activity

Monitoring and logging network policy activities is essential to detect and investigate security incidents, troubleshoot network issues and identify opportunities for optimization. Monitoring can also ensure that network policies are properly enforced and that there are no gaps or misconfigurations.

With Kubernetes tools like kubectl logs and kubectl describe, you can view your network policies’ logs and status information. You could also use third-party monitoring and logging solutions to gain more visibility into network traffic and policy enforcement.

Scale Network Policies in Large Clusters

As your cluster starts growing, especially when you have multiple applications in the cluster, the number of pods and network policies in the cluster will increase significantly. Design your network policies such that they can scale up as your cluster grows, with more workloads and nodes.

You can achieve scalability by using selective pod labeling and pod matching rules, avoiding over-restrictive policies and using efficient network policy implementations. Don’t forget to periodically review and optimize your network policies to ensure that they’re still necessary and effective.

Evaluate Third-party Network Policy Solutions

While Kubernetes includes built-in support for network policies, you can also use third-party solutions that offer additional capabilities.

Evaluate them against factors like:

- Ease of deployment

- Compatibility with your existing network infrastructure

- Performance and scalability

- Ease of use and maintenance

And of course, ensure that whatever third-party solution you work with adheres to Kubernetes API standards and is compatible with your cluster's version of Kubernetes.

Conclusion

Clearly, Kubernetes network policies are a powerful tool for securing and controlling network traffic between workloads in your cluster. They allow you to define and enforce network security policies at a granular level, ensuring proper isolation and reducing the risk of unauthorized access or data breaches.

Now that you’ve learned the basics of Kubernetes network policies, you can create and enforce policies using kubectl commands, block egress traffic to specific IP ranges and limit traffic between pods in different namespaces. You’ve also learned some best practices for implementing network policies, such as monitoring and logging policy activity and evaluating third-party network policy solutions.

Additional Resources

If you're interested in learning more about Kubernetes networking and network policies, Kubernetes’s own documentation is of course a great place to start. Other helpful resources include Kubernetes community forums, blogs and online courses:

Remember that while Kubernetes network policies are a powerful tool, they do require careful planning to be effective. Follow best practices and leverage the right resources, and you can ensure that your network policies will provide robust security and control for your Kubernetes cluster.