Kubernetes the LogDNA Way

How LogDNA embraced portability with Kubernetes and saved more than $400k per month on its cloud bill.

How we embraced portability with Kubernetes and saved more than $400k per month on our cloud bill

This is one in a series of guest posts by engineers about how and why they use Kubernetes. Today’s post features Ryan Staatz, who joined LogDNA as its fourth employee. Ryan enjoys videogames, tinkering with electronics, playing piano, and an occasional glass of High West Double Rye Whiskey.

Before visiting with the folks at Equinix Metal, I don’t think I was ever asked what problem we were solving with Kubernetes. After giving it some thought, though, I realized we were striving to overcome multiple challenges.

Portability by Way of Necessity

One of the challenges we had in the early days of LogDNA was finding the right hosting provider for our needs. Since our product is very infrastructure-dependent, we tried a few different cloud providers before going “all in” with Equinix Metal. It’s been a good fit because Equinix provides us with the fundamental building blocks we need, paired with automation and great support.

When we were a scrappy startup, we juggled credits, moving from one cloud provider to the next depending on where we had the most credit. It saved us a lot of money, but it also meant we had to migrate our platform, which wasn’t as straightforward as expected. Our first migration wasn’t smooth because we didn’t realize that certain features were different between providers — like URLs that resolve to VMs not having a public or private option.

As we grew, the performance requirements for our VMs changed, so we started managing more dedicated infrastructure. As you can imagine, reconfiguring and restarting was a pain. We did most of this with homegrown bash scripts, which isn’t scalable.

Moving to Kubernetes

We moved to Kubernetes at the same time we migrated to Equinix Metal because we needed a way to manage multiple environments more easily. Since everything in Kubernetes is specified in a YAML file, version-controlling the specific differences between environments simplified how we managed our infrastructure. We made a bet with a “bare metal plus Kubernetes” solution, which leveled the playing field against our competitors by allowing us to standardize our software deployment across all types of hardware.

Another issue I know others out there have faced is scalability. There are upper limits to how much you can scale a single Kubernetes cluster, but after defining a pod, you can scale it as much as you like, providing you have the hardware resources available in your cluster. All you have to do is tell Kubernetes how to run your workload and how many you’d like, and boom, your application is up and running.

The mindset of “require and forget” is such a fantastic luxury because it frees up time otherwise spent trying to figure out which VMs should be running a given application. It’s been even easier on bare metal since Kubernetes treats each of these “big nodes” as a resource pool that can run as many containers as the machine has resources to handle. The lower overhead costs proportionally per node is a nice benefit.

Kubernetes has a built-in concept of services that are easy to use and let us route traffic internally and externally in our cluster. On top of that, services specified in the group are accessible to other applications by default — all they need to know is the service name. This feature seems simple, but it eliminates the need to manage routing, firewall rules, DNS, etc., manually, freeing up a substantial amount of operational overhead for us.

The transition to Kubernetes wasn’t painless, and there were limitations and complexity we uncovered when moving from VMs to K8s. Also, with Kubernetes on bare metal, conveniences like standard local disk encryption aren't there. But it’s been worth it because the machines we have outperformed anything we’ve used before, and it is much more cost-efficient.

It’s expensive to run at scale on cloud and hard to exit; we saved $5,000,000 in a year by switching to Equinix Metal. No joke — we cut our hosting bill by $400,000 a month.

Building from the ground up

When we built out our architecture, we had some parameters we needed to meet.

The first was that it had to be low maintenance. We didn’t want to spend a lot of our time or take frequent actions to manage it. We also needed it to be stable; it shouldn’t require regular troubleshooting. Our underlying infrastructure had to be performant because we don’t want an army of nodes to run our stack. Cost is always a consideration, and we had to make sure we didn’t break the bank.

Because our client base is in diverse industries, we have to keep compliance at the forefront of what we’re doing. Equinix Metal provides a secure environment to meet HIPAA and SOC2 compliance, but we still had to architect Kubernetes on our end. One of the requirements of PCI-DSS, for example, is file integrity monitoring and intrusion detection. This is a downside of running your own Kubernetes stack - you become responsible for the compliance underlying it. To avoid running additional software to meet these requirements, which creates additional costs, both financially and a resource cost on each server, we opted for a read-only operating system designed for containers, which essentially eliminates the need for file integrity monitoring.

We still run monitoring and security software in containers with Sysdig Monitoring and Sysdig Secure, but running separate software on the OS level was something we wanted to avoid.

Tooling up!

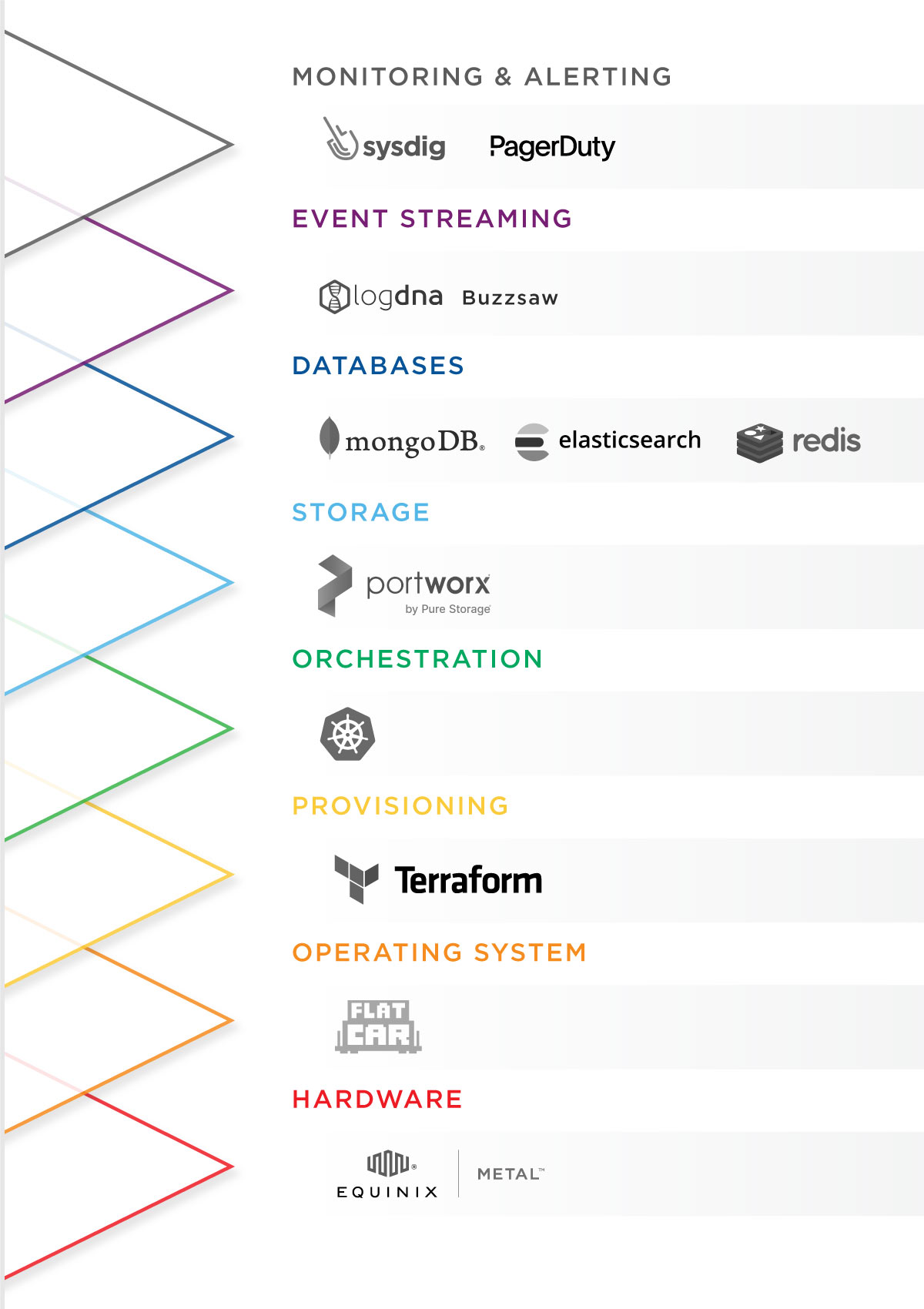

To manage our Kubernetes installation, we had to decide which tooling made sense for us. Here’s where we landed:

Host OS - Flatcar (Available natively on Equinix Metal): A purpose-built OS for hosting containers? Yes, please. On top of that, because the /usr system is a read-only file system, we can more easily achieve our compliance commitments without additional software bloat running on the nodes themselves, like File Integrity Monitoring.

Deployment - Razee. Razee allows us to automate and manage Kubernetes resource deployments across clusters, environments, and cloud providers. We can also visualize deployment information for our resources, monitor the rollout process, and find deployment issues more quickly. All of the CICD for deploying is pretty simple with Razee.

Infrastructure as Code - Terraform. Terraform gives us a simple, file-based configuration to launch infrastructure stored in version control to facilitate peer review. It also gives us repeatable and consistent deployments. Plus there’s a native Terraform provider for Equinix Metal.

Databases - MongoDB, Elasticsearch, and Redis. All of our user data is stored in MongoDB and indexed by Elasticsearch. We use Redis cache, both persistent and in-memory, for our alerts and caching menus.

Storage - Portworx - We started with our own local disk storage solution. We had to manage it with disk provisions, bootstrap it in the container, and deal with breaking API changes. The cost of operating our solution didn’t make sense, so we decided to pay a vendor and went with Portworx. This was a crucial decision for us to scale; we went from 3 environments to 15 in a year, and it would have been much harder without Portworx.

Event Streaming - Buzzsaw - For events to work the way we need them to, we had to rethink event streaming. We started with Kafka, but it didn't work for us. So we built Buzzsaw. It solved problems we had like ordering event processing; we needed to manage processing of log events prior to insertion into Elasticsearch. Say we see a spike in traffic; Buzzsaw lets us process old lines later by identifying a subset of the query to defer. Before Buzzsaw, we were using Kafka, but Kafka has no way to easily reorder logs to prioritize the processing of 'live' logs over older/stale ones. Buzzsaw is dynamic and safeguards fast changes even as traffic spikes 3, 4, or even 5 times higher so we can continue to meet SLAs. And we're able to process by line, bringing in enhanced metadata and pushing it to our transformers, which then send logs where they should go with minimal state syncing. Buzzsaw is our processing queue that can pause, start, and defer subsets of our queue to maximize uptime and minimize negative impact, Plus, the name fits with our log theme.

Monitoring and Alerting - Sysdig and PagerDuty - All of our monitoring and alerts are pretty streamlined by using Sysdig and pushing alerts through PagerDuty.

Some Totally Metal Workloads

Bare metal helps us achieve an incredible price to performance ratio. A large part of why we chose Equinix Metal was how much more bang we can get for our buck than with traditional cloud providers. Merely switching from SSD to NVMe disk drives gave our Elasticsearch clusters a substantial performance boost, and it was still far more cost-effective than any VM.

The only downside of bare metal is that you don't have as many built-in service offerings because it's a foundational, no-frills service. For instance, we can't autoscale our nodes in a matter of seconds like we did when we were still spinning up VMs. If you follow in our footsteps, you will need to manage capacity at a slower pace and plan accordingly. With our instrumentation and reasonably stable traffic patterns, capacity management becomes a low maintenance built-in process.

Since Equinix Metal doesn’t offer a managed Kubernetes service, you'll need to BYOK (bring your own Kubernetes). Maintaining this can be challenging if you've never done it before. There is an overwhelming number of ways you can set up your architecture, so be prepared to dive into some research.

Also, be aware that managing your own Kubernetes cluster isn't 'free'; it means your infrastructure team will be spending time bootstrapping nodes, updating systems, and troubleshooting issues. Equinix Metal has some amazing Slack-based support to help us — which is pretty impressive considering how low level in the stack their users are — but ultimately it is our job to manage the infrastructure. For us, this was a no-brainer since some of our largest bills were from our hosting provider, and the added management cost running on bare metal was a just drop in the bucket against our reduced TCO.

What Makes It Our Way

The modular nature of Kubernetes allows us to customize nearly every aspect, from networking to host-level optimizations. We don't host a Kubernetes stack built on the assumptions of others. We evaluated and chose each component based on the needs of our team. This is our stack, what we need, and that's enough for us to consider it Kubernetes our way.

Ready to kick the tires?

Use code DEPLOYNOW for $300 credit