Liquid Cooling In Action—On Our Own Production Servers

What we have learned deploying and running two-phase liquid cooling for a critical production workload.

Last year, when we kicked off our first ever deployment of a liquid-cooled production system, we weren’t after greater performance. We knew that substantial performance benefits would be a given. That much had been well documented, including by us. The greater barrier to adopting liquid cooling for a large data center operator is the need to build and scale the new operational knowledge. So, that was our objective for the deployment: to learn what it takes to run a liquid-cooled data center as an operator with a global footprint.

We had already been testing liquid cooling for several years prior, collaborating with a data center liquid cooling company named ZutaCore in our Co-Innovation Facility in Ashburn, Virginia. Then came a chance to see ZutaCore’s technology in action in the context of a real-life production environment. It came when Equinix acquired Packet, the bare metal cloud provider that’s now called Equinix Metal. The deal gave us a cloud-provider infrastructure of our own, so we decided to see how the cooling solution works in one of the racks running the Metal control plane. The point was to learn from deploying and running a liquid-cooled production environment, share the learnings with the greater Equinix team and explore the path to liquid cooling adoption enterprise customers can expect in colocation.

We set three basic criteria for the deployment (done in one of our New York Metro data centers):

- The local operational team would be empowered with all the knowledge necessary to perform any and all duties related to the liquid cooling system. This included retrofits and any ongoing service requirements for the system.

- Cage and rack power draw would remain at the contracted values. We would not attempt to increase rack or cage power density.

- We would make no changes to the facility to enable liquid cooling, and there would be no changes in temperature for adjacent customers.

Why ZutaCore? Because its two-phase data center liquid cooling system is a radically different design from single-phase liquid cooling designs. The latter are well established in the industry. There are single-phase products on the market from well-known players like CoolIT and upstarts like JetCool, as well as custom OEM solutions from IBM, Lenovo and HPE. Two-phase liquid cooling is a more recent data center market arrival, and we are excited by the magnitude of positive implications it carries for energy efficiency, power density and sustainability (as we explained in depth in a previous blog post).

Now that we’ve had a chance to deploy ZutaCore’s solution and watch it in action for a few months, we want to share some early observations.

Making Bubbles

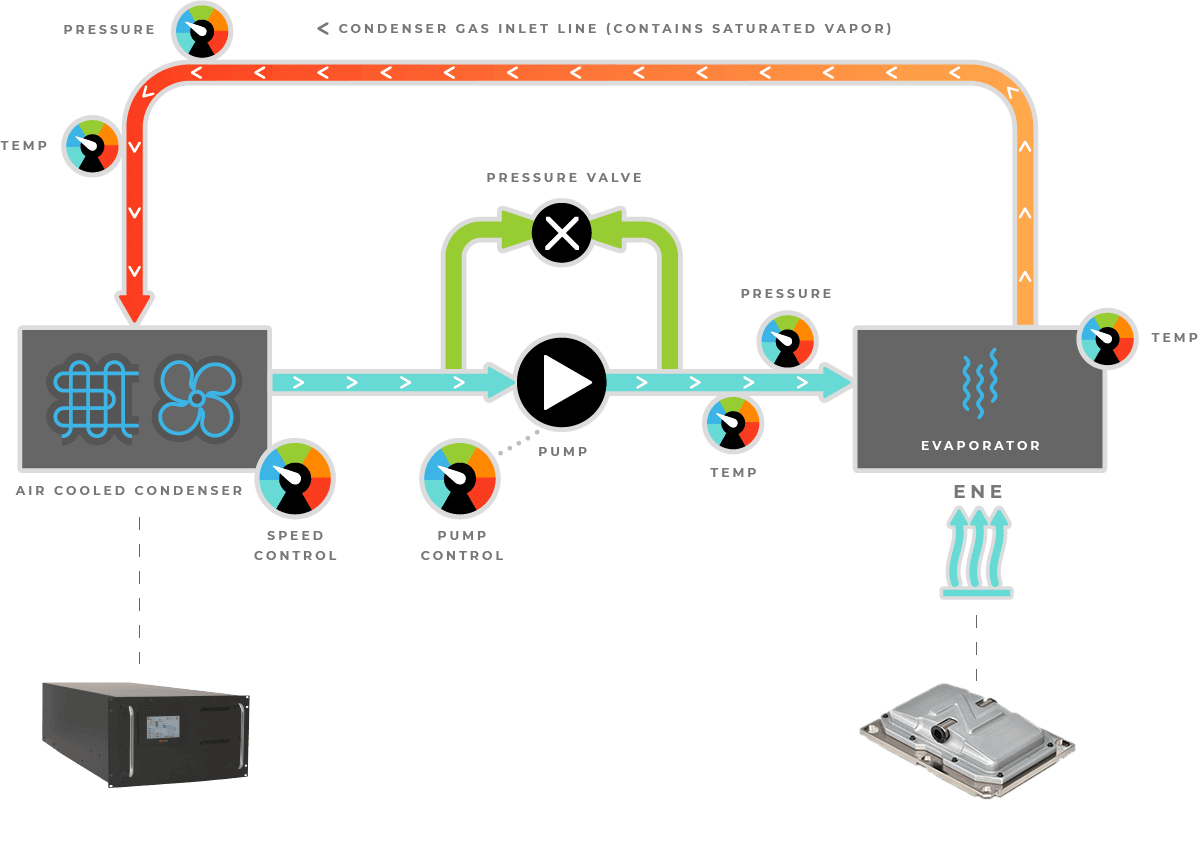

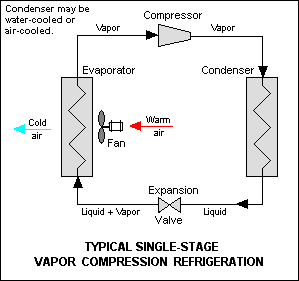

Traditional facility-level data center cooling systems (as well as home air conditioners and refrigerators) already take advantage of two-phase liquid cooling. Air is cooled using the two-phase principle in a centralized cooling unit and sent through the IT equipment to pick up heat and then release it outside. A system like ZutaCore’s takes air as the cooling medium out of the loop, removing heat directly at the source— often the processor—rather than first moving hot air to a centralized cooling unit.

A picture is worth a thousand words:

Note how similar ZutaCore’s cooling cycle in its diagram is to the standard refrigeration cycle:

Single-phase cooling systems absorb heat via conduction, while two-phase cooling relies instead on phase transformation, which refers to liquids’ ability to transform into vapor and then condense back to liquid form. The key in two-phase cooling is getting enough heat to vaporize the cooling fluid. Water, at sea level, evaporates at 100C, for example. Supercomputers have for decades been cooled with water, using single-phase, conduction-based cooling systems. Engineered refrigerant fluids are designed to evaporate at much lower temperatures than water. The boiling points of refrigerants used in home and car ACs, R-134A and R-1234yf, are around -26C (-15F) and -30C (-22F), respectively.

In the ZutaCore system, the two-phase fluid (refrigerant!) turns to vapor at about 92F (33C). If you build a tiny pool for the fluid on top of a processor and keep topping it up as the fluid evaporates, the temperature of the metal plate in the chip’s casing that’s in contact with the fluid (chipmakers call it the “Integrated Heat Spreader”) will generally remain at 92F. This temperature is referred to as Tcase, and the main challenge in cooling CPUs and GPUs is keeping Tcase at a safe operating point. The maximum Tcase Intel specifies for one of its legacy Xeon V2 server CPUs is 74C (165.2F), while one of the more recent 3rd Generation Xeon Scalable chips can run hotter, at 81C (177.8F). The lower the Tcase, the stricter the cooling requirements.

There’s a physical phenomenon that makes keeping Tcase under control with two-phase liquid cooling challenging. If you splash a little bit of water on an extremely hot surface—say, a heated frying pan—you’ll see water droplets form and bounce around instead of evaporating right away. This is called the Leidenfrost effect, and it happens because of a tiny layer of water vapor that forms under each droplet, insulating it from the hot surface. ZutaCore addresses the Leidenfrost effect with its Enhanced Nucleation Evaporator technology. (“Nucleation” is a fancy word for making bubbles.)

Fluid selection is critical to meeting performance requirements. Air conditioning refrigerants have evolved over time, migrating from ozone depleting R-12 and high-GWP (Global Warming Potential) R-134a to the current R-1234yf used in automotive applications. Equinix has been working with ZutaCore to shift toward liquids with lowern GWP, ideally <=1. Working with partners like ZutaCore allows us to provide critical feedback early in the product development cycle, which is especially important for topics that are core to Equinix’s sustainability goals. We’re influencing the fluid selection so we can take a long-term view of refrigerants in our data centers.

The Nuts and Bolts (Plus a Manifold)

For our first liquid-cooled production environment we went with a standard set of hardware: no overly power-dense CPUs, no GPUs. For expediency, we chose to convert air-cooled single-socket AMD SP3 platforms already in the field (the bare metal instances our customers know as m3.large.x86). These standard 19” 1RU servers are a high-volume system for us, a fleet that’s readily available for side-by-side testing.

The AMD processors’ TDP (Thermal Design Power, or the maximum amount of heat a chip can safely emit) is 180W to 200W. That’s nothing a traditional air cooling system can’t handle, so why did we choose to convert these servers when their CPUs are so easy to cool? For two reasons. First, we wanted to have a “paved-path” solution focused on sustainability before very high-TDP CPUs arrive in early 2023. Second, it wasn’t just any workload we were dealing with; we were going all in with a full rack of equipment powering our production control plane. That’s right, we’ve taken liquid cooling to production with console.equinix.com (our customer portal) and the Metal API!

The installation is a simple stackup: a 6-RU liquid-to-air exchanger at the bottom, more than 20 1-RU systems above it and a manifold on the side. Small-diameter tubing makes routing the liquid and vapor lines surprisingly easy. The short lateral runs from the server to the manifold are important—a shared manifold across racks is not a suggested installation method.

This photo gallery captures the key steps in our installation process (click the icon in the bottom left corner for full screen):

Photos by ChrisKPhotography

Leaks are always a concern when dealing with liquids, of course. The manifold and its quick connections use a non-spill design that releases at most a drop of fluid when disconnected. The fluid itself also has a convenient property of completely evaporating in atmospheric conditions. The pressure test during the commissioning process along with the use of a refrigerant leak detector provides high confidence of a leak-free stand-alone system.

We’ve trained data center teams for decades that liquids and electronics don’t go together. The resulting default aversion to anything with the word “liquid” in it doesn’t bode well for adoption of liquid cooling by the industry. But dielectric two-phase fluids are in fact designed to go well with electronics. Still, something as simple as documented IBX policies preventing the inbound shipment of liquids can add operational friction to an already challenging project. These policies are being actively reviewed based on our learnings.

Learning By Doing

Liquid cooling in data centers is new enough to make constant education extremely important. And the installation process was very educational. The Equinix Metal onsite teams at Equinix data centers that are responsible for day-to-day installation and maintenance have engineering teams available to them to address any issues as they arise. During the ZutaCore system installation, they had the right to stop at any point they didn’t feel sure or comfortable.

During a second round of installs, a couple of systems failed a pressure test done before they were installed in the rack. The team halted the installation and worked with a very supportive and responsive ZutaCore team on a resolution.

Our installation went against ZutaCore’s advice for the amount of heat required (>= 6kW) for the system to perform at an optimal level. What we observed was tJunction (temperature inside the processor casing) reported from the BMC jumping up and down (but not higher than an air-cooled equivalent) for very low heat levels in the overall system: <=~2kW in the liquid system as we started to add servers. The manifold is able to dissipate ~1kW of heat and cause early condensation of the vapor before it reaches the heat exchanger, or HRU (heat rejection unit), causing the temperature variation as the pressure changes. Even with the variation, we did not observe any tJunction higher than 52C, which is considerably lower than any air-cooled 1U equivalent under load. The ZutaCore team is experienced with the variables that will influence cooling performance; following their design guidance is highly suggested. The system currently operates at the power level advised by the ZutaCore team.

The installation has been stable and operational since getting commissioned in June 2022 with no issues.

Why Bother With All This?

Since it’s the start of the year, when everybody likes to make predictions, here’s one: Equinix is at the beginning of a journey we expect most Fortune 2000 companies to make in the next couple of years. Data center liquid cooling will go from being almost exclusively in the HPC realm to becoming a standard requirement for systems. Colocation operators like Equinix will be changing buildings and operational policies to enable customers to leverage it in pursuit of sustainability.

Equinix Metal has a unique role in the colocation ecosystem. We’re a colocation customer of our own parent company, which happens to also provide colocation to some of the biggest Fortune 2000 organizations. As such, we’re in a good position to help Equinix meet its own sustainability goals while addressing the issues every Fortune 2000 company will face. As an operator of data centers with a large footprint of its own IT equipment, we can help guide the industry toward a better outcome for this third rock from the sun.

One early improvement the data center industry is in need of is a better way to measure efficiency of our computing facilities. PUE, the data center energy efficiency metric that was created largely with the assumption that air cooling and fans were a necessary part of servers, switches and routers, doesn’t really capture the benefits of liquid cooling. The number is a simple calculation for total power a data center receives from the utility divided by the power consumed by IT. The smaller the divisor, the higher the PUE, but the divisor includes the power consumed by server fans, which are responsible for a substantial portion of the total IT power consumption. Direct-to-chip liquid cooling mostly removes the need for server fans, making PUE higher while lowering total power use.

To be sure, the advent of PUE has had an enormous positive impact by highlighting the challenges of enterprise data centers—contrasting their PUEs, generally at or in the vicinity of 2.0, with the highly efficient hyperscale operators’ PUEs, which tend to hover just above 1.0. But the newly intensified focus on sustainability and efficiency with which the total power is used requires a much more holistic measuring stick for efficiency.

At Interpack 2022, NVIDIA and Vertiv engineers proposed a new metric: Total Usage Efficiency, or TUE. Their paper, Interpack2022-97447, shows a striking 15% energy reduction on a site utilizing hybrid cooling (a combination of liquid and air), with 75% of the heat absorbed in a direct-to-chip cooling module and the remaining 25% left in air. A more holistic and up-to-date metric should look more along the lines of TUE than PUE.

Another big question to answer as liquid cooling adoption by the data center industry grows is what to do with all the heat absorbed by the liquid? There is too much of it to just dissipate in the atmosphere. It can be put to use.

Mechanical engineers use a classification system for various “grades” of heat to determine its usefulness. Lowest-grade heat is generally 100C or lower. Data center operators generally keep the air going into server inlets below 27C (80.6F), the ASHRAE A1 Recommended upper temperature limit. Assuming that the air gets 20F warmer as it goes through the server (a 20F Delta T), the maximum air temperature we’d be capturing in an HVAC system would be 38.1C (100.6F). Traditionally, data centers dump this heat into the atmosphere, as there’s very little use for this very low-grade heat. For context, a nice hot tub temperature is 40C (104F.)

With the right ecosystem, there could be a lot of value in capturing and reusing this heat. That ecosystem can be built in the communities that surround the data centers powering the digital economy. Governments in general play a significant role in enabling the decarbonization journey of communities where data centers operate, and liquid-cooled data centers give local governments an opportunity to build heat networks. These networks are popular in the Nordic countries. Equinix’s HE3 and HE5 data centers in Helsinki, for example, supply heat for homes and businesses. But heat networks are difficult for data center operators to implement on their own, often requiring the foresight by local governments to invest in their development alongside heat generators.

Heat networks need not be exclusive to places with cold climates. Heat pumps, of the absorption variety, can provide cooling for buildings and industrial systems. In water-stressed areas with access to brackish water and saltwater this heat can be used to create fresh water for local communities. Another option—and this is the easiest way to use heat networks—is making domestic hot water! All of these heat-reuse opportunities are front and center in the decisions we make at Equinix. Unfortunately, most of these technologies are still high-cost and low-volume. With the right type of investment, governments can help push their costs down and increase their adoption, much like they have done to make the return on investment in the combination of solar panels and lithium-ion batteries worthwhile.

Few data center technologies create non-computing-related benefits that extend outside a data center’s four walls. Two-phase direct-to-chip liquid cooling, with its promise to make computing infrastructure a source of energy is a rare example. As Equinix CEO Charles Meyers recently said, collectively, the ongoing data center construction that’s been taking place all around the world in recent years is one of humanity’s most important infrastructure projects. As the world’s largest digital infrastructure company, we are acutely aware of how important it is to get it right. This new data center cooling technology feels like a part of getting it right.

Published on

31 January 2023Category

Ready to kick the tires?

Use code DEPLOYNOW for $250 credit