- Home /

- Resources /

- Learning center /

- What is NVMe?

What is NVMe?

Uncover the essentials of Non-Volatile Memory Express (NVMe), a storage technology that offers significant speed improvements over traditional SSDs, making it ideal for handling large-scale data with high throughput and low latency on Equinix Metal.

On this page

What is NVMe?

We get this question quite a bit at Equinix Metal, where you are getting bare metal hardware. This means that you get all the power -- and the complexity -- of the hardware itself.

So what is it, and why does it matter?

In line with our "what is" series, let's look at some of the innards of how our computers work, specifically storage.

Let's start with the name "NVMe." NVMe is an acronym -- after all, how would you pronounce it otherwise, "nnvvmm-eee"? -- which stands for "Non Volatile Memory Express." Let's break that down.

First, "Non Volatile Memory." This is a fancy way of saying "storage that doesn't lose its data when you turn off the power." This is in contrast to "volatile memory," which is memory that does lose its data when you turn off the power. The most common form of volatile memory is RAM, or Random Access Memory.

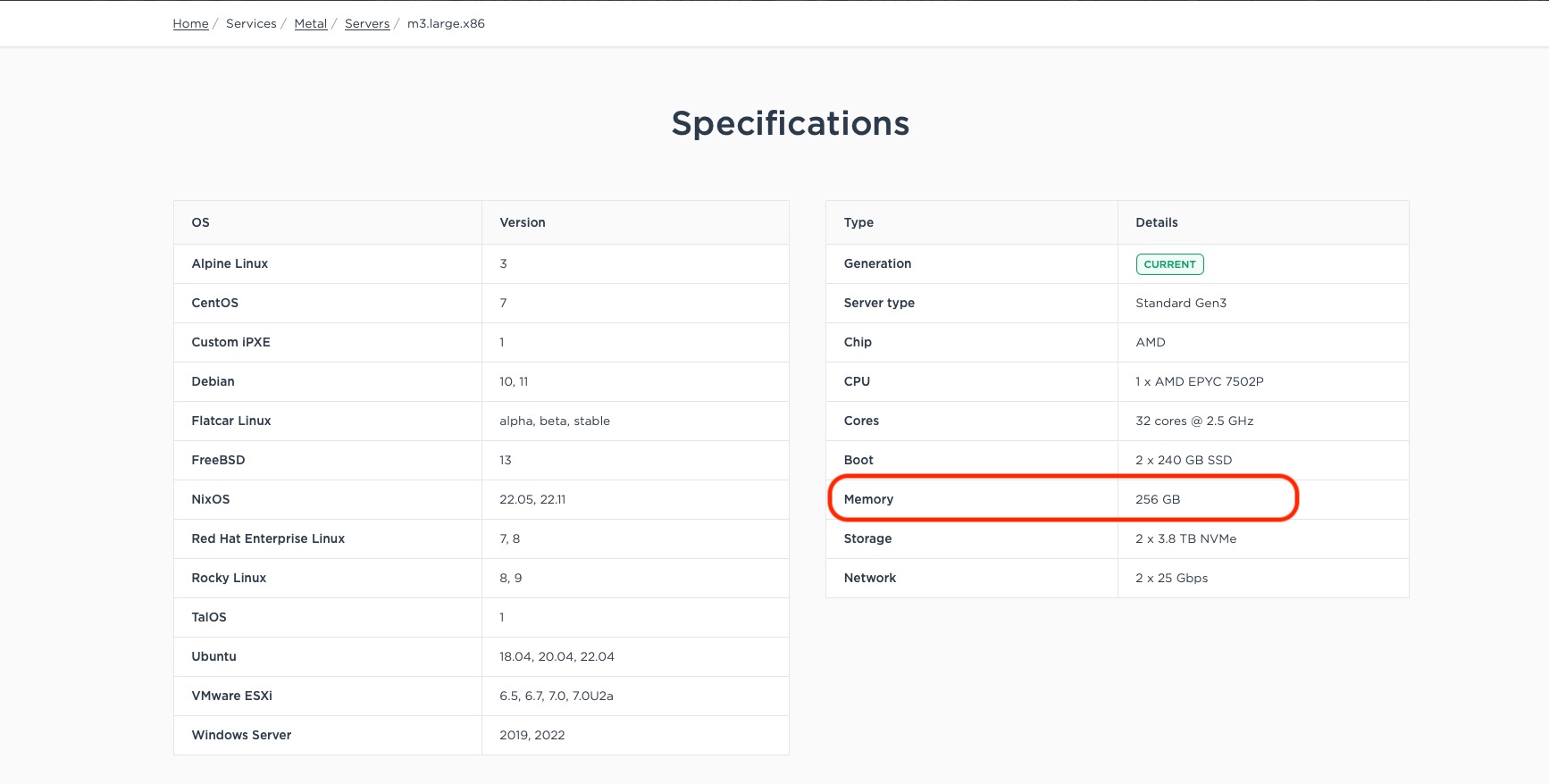

When you look up the specs for your computer, for example, Equinix Metal's all-purpose workhorse m3.large.x86, you see that it has 256GB of memory:

That 256GB of memory is volatile memory. As long as the computer is powered on, the memory will keep whatever the operating system, as well as your services running on it, have stored in it. Loaded all of the calculations for your spreadsheet? It's in memory as long as the power stays on. Got all of the parameters for your AI model in memory? It stays as long as the power stays on.

When the power goes off, all of it is lost. Which is why we have permanent, or non-volatile storage, like your hard disk drive (HDD) or solid state drive (SSD). These keep their state even without power.

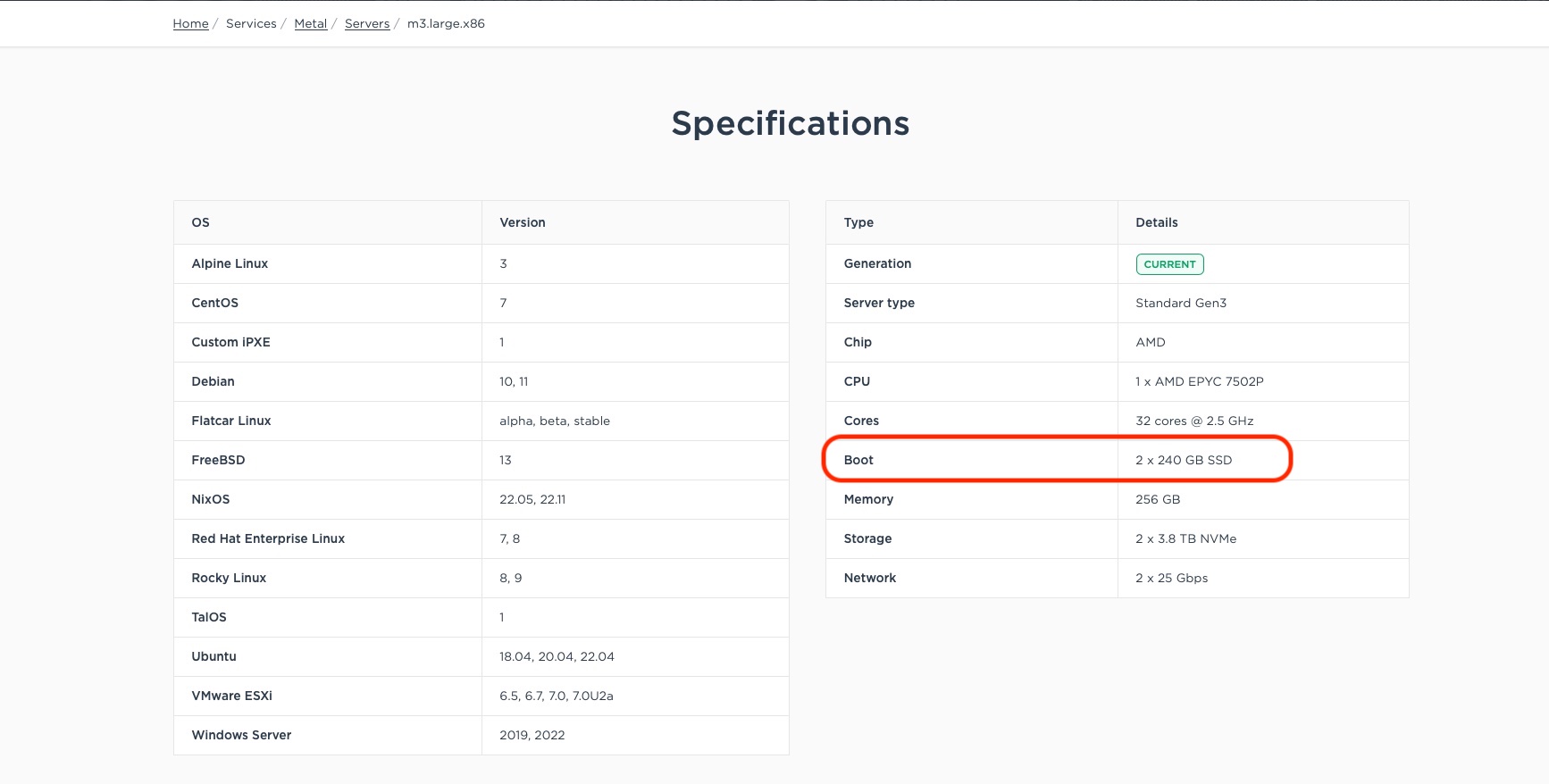

Looking back at the specs for the m3.large.x86, you see that it has 2x 240GB SSDs:

That's the hard drive, in this case a faster SSD.

As an aside, why is it called non-volatile, and not just "persistent"? Actually, it is. We will see shortly why the term "non-volatile" is particularly useful, and used, in this case, shortly.

RAM is also much more expensive than persistent storage. Look, for example, at the AWS m5d instance types:

m5d.large 2 8 1 x 75 NVMe SSD Up to 10 Up to 4,750

m5d.xlarge 4 16 1 x 150 NVMe SSD Up to 10 Up to 4,750

m5d.2xlarge 8 32 1 x 300 NVMe SSD Up to 10 Up to 4,750

m5d.4xlarge 16 64 2 x 300 NVMe SSD Up to 10 4,750

m5d.8xlarge 32 128 2 x 600 NVMe SSD 10 6,800

m5d.12xlarge 48 192 2 x 900 NVMe SSD 12 9,500

m5d.16xlarge 64 256 4 x 600 NVMe SSD 20 13,600

m5d.24xlarge 96 384 4 x 900 NVMe SSD 25 19,000

m5d.metal 96* 384 4 x 900 NVMe SSD 25 19,000

Every type has almost ten times as much SSD as RAM.

If non-volatile storage is so much better, meaning it can hold data, well, non-volatilely (persistently), and it is so much cheaper, why don't we just use it for everything? Why do we need RAM at all?

The answer is speed.

I went to Equinix Metal console and launched the exact same type of machine as

above, the m3.large.x86. Let's see what is installed.

root@m3-large-x86-01:~# lsblk

NAME MAJ:MIN RM SIZE RO TYPE MOUNTPOINTS

loop0 7:0 0 63.5M 1 loop /snap/core20/1891

loop1 7:1 0 111.9M 1 loop /snap/lxd/24322

loop2 7:2 0 53.2M 1 loop /snap/snapd/19122

sda 8:0 0 447.1G 0 disk

sdb 8:16 0 223.6G 0 disk

├─sdb1 8:17 0 2M 0 part

├─sdb2 8:18 0 1.9G 0 part [SWAP]

└─sdb3 8:19 0 221.7G 0 part /

sdc 8:32 0 223.6G 0 disk

nvme1n1 259:0 0 3.5T 0 disk

nvme0n1 259:1 0 3.5T 0 disk

Here are all of the block devices. The important ones for now are sda1, sdb and sdc, our boot disks.

Let's see what types those are (abbreviated output):

root@m3-large-x86-01:~# lshw -class disk

*-disk

description: ATA Disk

product: MTFDDAK480TDS

physical id: 0.0.0

bus info: scsi@12:0.0.0

logical name: /dev/sda

version: J004

serial: 213230B0EA3F

size: 447GiB (480GB)

capacity: 447GiB (480GB)

configuration: ansiversion=6 logicalsectorsize=512 sectorsize=4096

*-disk:0

description: ATA Disk

product: SSDSCKKB240G8R

physical id: 0

bus info: scsi@9:0.0.0

logical name: /dev/sdb

version: DL6R

serial: PHYH119600GA240J

size: 223GiB (240GB)

capabilities: gpt-1.00 partitioned partitioned:gpt

configuration: ansiversion=5 guid=5b3cd9cd-ca15-4e77-8618-e513a931b29b logicalsectorsize=512 sectorsize=4096

*-disk:1

description: ATA Disk

product: SSDSCKKB240G8R

physical id: 1

bus info: scsi@10:0.0.0

logical name: /dev/sdc

version: DL6R

serial: PHYH11960104240J

size: 223GiB (240GB)

configuration: ansiversion=5 logicalsectorsize=512 sectorsize=4096

So our large sda is MTFDDAK480TDS, while the two sdb and sdc are SSDSCKKB240G8R. Google is our friend,

and gives us the following results:

-

MTFDDAK480TDS: Micron 5300 PRO 480GB SATA 6Gbps 540MB/s read / 410 MB/s write -

SSDSCKKB240G8R: Intel 240GB SATA 6Gbps Enterprise Class M.2 SSD

That Micron is large, and not bad at 540MB/s read / 410 MB/s write. Those Intel drives are smaller but almost ten times faster.

What about memory?

root@m3-large-x86-01:~# dmidecode --type 17

# dmidecode 3.3

Getting SMBIOS data from sysfs.

SMBIOS 3.3 present.

Handle 0x1100, DMI type 17, 92 bytes

Memory Device

Array Handle: 0x1000

Error Information Handle: Not Provided

Total Width: 72 bits

Data Width: 64 bits

Size: 32 GB

Form Factor: DIMM

Set: 1

Locator: A1

Bank Locator: Not Specified

Type: DDR4

Type Detail: Synchronous Registered (Buffered)

Speed: 3200 MT/s

Manufacturer: 802C869D802C

Serial Number: F28E1B79

Asset Tag: 25212622

Part Number: 36ASF4G72PZ-3G2J3

Rank: 2

Configured Memory Speed: 3200 MT/s

Minimum Voltage: 1.2 V

Maximum Voltage: 1.2 V

Configured Voltage: 1.2 V

Memory Technology: DRAM

Memory Operating Mode Capability: Volatile memory

Firmware Version: Not Specified

Module Manufacturer ID: Unknown

Module Product ID: Unknown

Memory Subsystem Controller Manufacturer ID: Unknown

Memory Subsystem Controller Product ID: Unknown

Non-Volatile Size: None

Volatile Size: 32 GB

Cache Size: None

Logical Size: None

...

# repeats for each memory module

It is DDR4 memory, with "Configured Memory Speed: 3200 MT/s". We could go into a long-winded description of how that works, but let's keep things simple, and look at one of the best memory sites on the Internet, Crucial.

The chart at the top shows that "3200 MT/s" translates into 25600 MB/s of throughput, or 25 GB/s.

That is over four times faster than our sdb/sdc, and 50 times faster than our sda.

So SSDs are getting better and faster, but even our SATA SSDs are really slow in comparison.

If you think you can see a pattern here, you're right. First hard drives and now SSDs are getting faster and faster. This Wikipedia page shows how SSD read speeds have evolved from 49.3MB/s in 2007, up to 15GB/s in 2018, a 304 times improvement in 11 years.

As persistent storage gets faster and faster, people start to use workloads on it that might have been too slow before. The faster-than-disk, more-affordable-than-RAM opens a whole new series of doors.

However, to take advantage of these speeds, and the required low latency to go with it, we need two things:

- A connection that can handle the throughput, speed and latency.

- A protocol, or language, that can standardize connection to this kind of storage.

Historically, disks were connected via various interfaces. The most recent and common for years was, and still is in many cases, Serial AT Attachment (SATA).

If you look at the information on our sda, sdb, and sdc drives, you see that they connect via

SATA:

root@m3-large-x86-01:~# lshw -class disk

*-disk

description: ATA Disk

product: MTFDDAK480TDS

physical id: 0.0.0

bus info: scsi@12:0.0.0

logical name: /dev/sda

version: J004

serial: 213230B0EA3F

size: 447GiB (480GB)

capacity: 447GiB (480GB)

configuration: ansiversion=6 logicalsectorsize=512 sectorsize=4096

So what do we have?

First, we have faster persistent storage, getting closer and closer to memory. You almost could call it "persistent memory," but not quite. Well, isn't memory volatile? Doesn't that make this "non-volatile memory"? Yes it does.

Second, we have a faster and more modern connection, PCIe, to replace the older SATA.

Last, we just need a standard protocol to define how we will connect to various of these new faster storage devices, these persistent but catching-up-to-memory, sort of non-volatile memory devices, over these faster buses.

Welcome to NVMe.

What does it mean for you when you see an NVMe drive? For example, our server from above has two such

drives. Let's look at our NVMe information, using nvme:

root@m3-large-x86-01:~# nvme list

Node SN Model Namespace Usage Format FW Rev

--------------------- -------------------- ---------------------------------------- --------- -------------------------- ---------------- --------

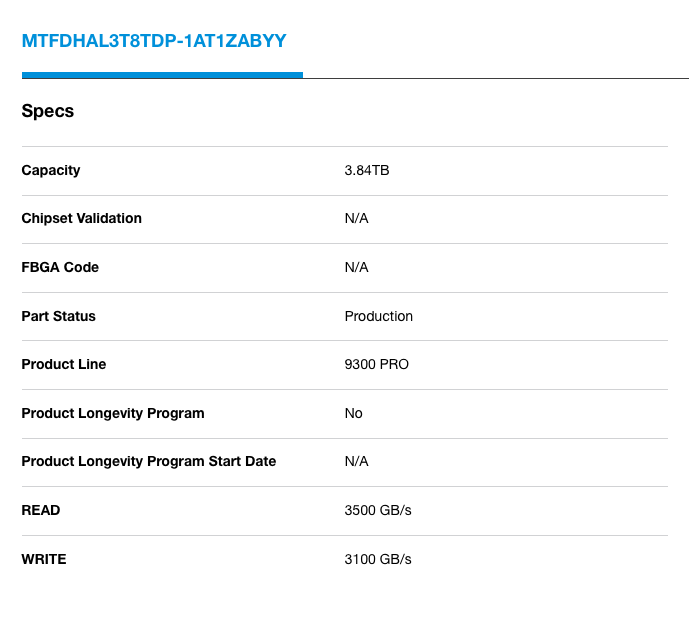

/dev/nvme0n1 213330E6DC09 Micron_9300_MTFDHAL3T8TDP 1 3.84 TB / 3.84 TB 512 B + 0 B 11300DU0

/dev/nvme1n1 213330E6F3FF Micron_9300_MTFDHAL3T8TDP 1 3.84 TB / 3.84 TB 512 B + 0 B 11300DU0

We have two drives, each offering 3.84TB of storage. Clearly, these are much larger than our other drives, 10 to 20 times as much storage.

The models for both of these are Micron_9300_MTFDHAL3T8TDP.

The read speed is 3500 MB/s, while the write speed is 3100 MB/s. In practice, it is unlikely to be quite that fast, but you can see how we are getting closer and closer to memory territory.

References

You may also like

Dig deeper into similar topics in our archives

Crosscloud VPN with WireGuard

Learn to establish secure VPN connections across cloud environments using WireGuard, including detailed setups for site-to-site tunnels and VPN gateways with NAT on Equinix Metal, enhancing...

Kubernetes Cluster API

Learn how to provision a Kubernetes cluster with Cluster API

Kubernetes with kubeadm

Learn how to deploy Kubernetes with kubeadm using userdata

OpenStack DevStack

Use DevStack to install and test OpenStack on an Equinix Metal server.