- Home /

- Resources /

- Learning center /

- Choosing a CNI for...

Choosing a CNI for Kubernetes

Make selecting a Container Networking Interface for your next cluster an easier choice.

On this page

Running your own Kubernetes cluster on Equinix Metal can be a fun but challenging endeavor. Even after you’ve written all your Infrastructure as Code and run kubeadm init on your first device, you’re presented with a choice: Which Container Networking Interface (CNI) should I adopt?

Fortunately and unfortunately, Kubernetes isn’t too opinionated and you can very quickly get decision fatigue. In this article, we’ll co over some of your available choices and present some pros and cons of each.

Calico

Calico is an open source CNI implementation that is designed to provide a performant and flexible system for configuring and administering Kubernetes networking. Calico uses BGP routing as an underlay network and IP-in-IP and VXLAN as an overlay network for encapsulation and routing. In a bare metal environment, avoiding the overlay network and using BGP will increase network performance and makes debugging easier, as you don’t need to unpeel the onion of encapsulation.

While Calico is a fantastic option, we do feel that there’s a better choice.

Cilium

Cilium is an open source CNI implementation that is designed and built around eBPF and XDP, rather than traditional networking patterns with iptables. Much like Calico, Cilium can run using VXLAN or BGP. Cilium used to rely on metallb to power its BGP features, but since Cilium 1.3 it is possible to use their own implementation built on GoBGP.

eBPF and XDP

eBPF is a relatively new technology that runs within the Linux kernel and enables the execution of eBPF programs, which run in a sandbox environment. These programs allow for user-land code to run within the kernel with unprecedented performance; extending the capabilities of the kernel.

XDP leverages eBPF to provide a highly performant packet processing pipeline that runs as soon as the networking driver receives the packet. That means that with XDP, Cilium can help mitigate DDOS attacks by dropping packets before they even hit the traditional networking stack.

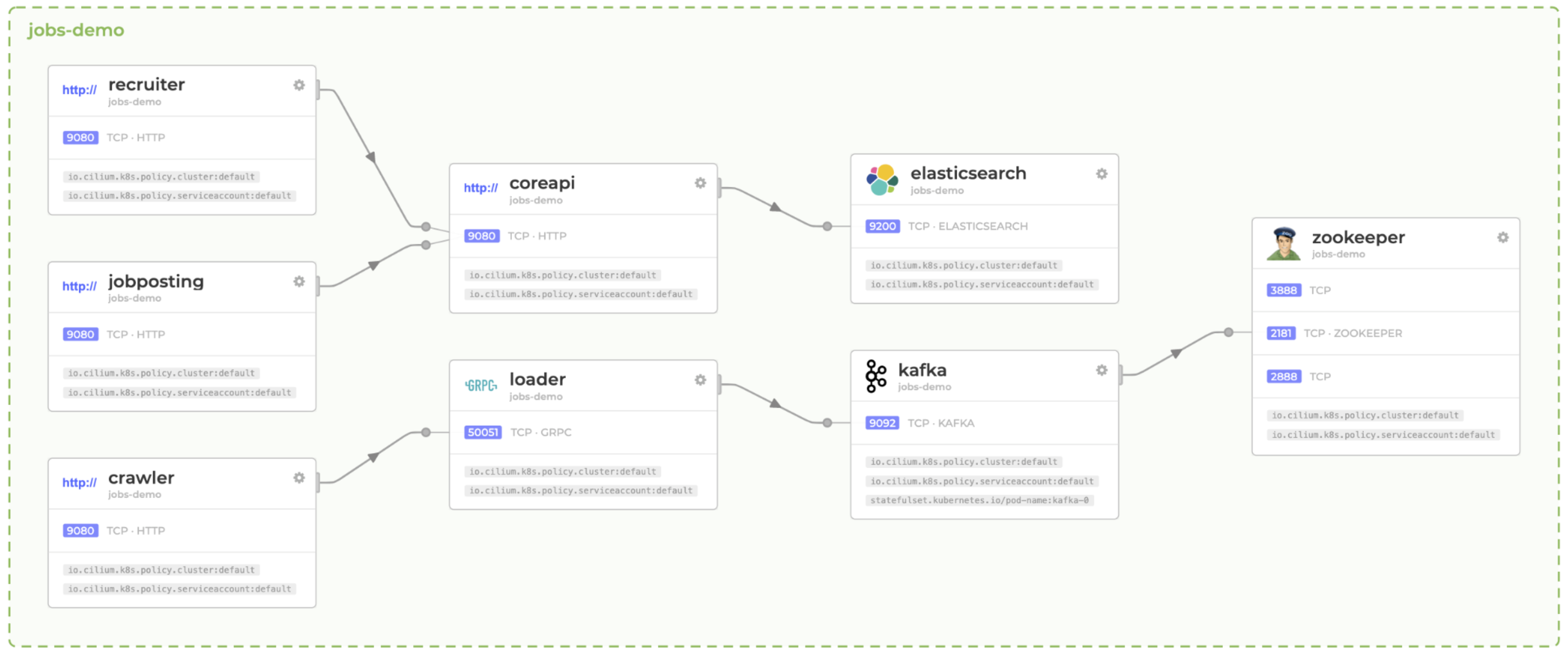

You may think that using these new technologies means all your previous experience of debugging the network is useless. However, Cilium is accompanied by Hubble.

Hubble is an observability platform that builds on top of eBPF and Cilium to give teams visibility into the networking stack. With an understanding of Cilium and Kubernetes networking policies and L4 and L7 networks, Hubble can help you more easily debug issues with your network.

Cilium Is the only CNI with L7-aware policies. This means you can write Kubernetes networking policies that understand DNS, HTTP, and even Kafka.

Take a look at a few examples. You can write a DNS L7 networking policy to restrict DNS resolution to a subset:

apiVersion: cilium.io/v2

kind: CiliumClusterwideNetworkPolicy

metadata:

name: dns-allow-list

spec:

endpointSelector: {}

egress:

- toEndpoints:

- matchLabels:

io.kubernetes.pod.namespace: kube-system

k8s-app: kube-dns

toPorts:

- ports:

- port: "53"

protocol: UDP

rules:

dns:

- matchPattern: "*.abc.xyz"

This example allows POST HTTP requests to abc.xyz:

apiVersion: cilium.io/v2

kind: CiliumNetworkPolicy

metadata:

name: http-post-abc-xyz

spec:

endpointSelector: {}

egress:

- toPorts:

- ports:

- port: "443"

protocol: TCP

rules:

http:

- method: POST

- toFQDNs:

- matchPattern: "*.abc.xyz"

Typically, we’re forced to write networking policies like: "Allow any application with the label kafka-consumer" to speak to Kafka. This casts a rather wide net. With L7 policies, you can limit the access to individual topics depending on the labels. As such, you can say that only the "beer-brewer" role can publish to the "hops" topic.

apiVersion: cilium.io/v2

kind: CiliumNetworkPolicy

metadata:

name: beer-brewers

spec:

ingress:

- fromEndpoints:

- matchLabels:

role: beer-brewer

toPorts:

- ports:

- port: 9092

protocol: TCP

rules:

kafka:

- role: produce

topic: hops

Developer Experience

These network policies can be visualised, modified, and constructed through a visual builder. You can try out the Cilium Editor for yourself.

Installing Cilium

Cilium is installable as a Helm chart. First, make the repository available:

helm repo add cilium https://helm.cilium.io/

Then you tweak the default values for your installation.

CIDRs

As with all CNI implementations, you’ll need to carve out your service and pod CIDRs.

Equinix Metal uses 10.x.x.x for their private network, so it’s usually best to split up 192.168.0.0.

If you require more address space than this, you can investigate Equinix Metal’s VLAN support.

IPAM Mode

Cilium has a few different modes to manage IPAM.

--set global.ipam.mode=cluster-pool

--set global.ipam.operator.clusterPoolIPv4PodCIDR=192.168.0.0/16

--set global.ipam.operator.clusterPoolIPv4MaskSize=23

Cilium also has a preview feature where the IPAM mode can be set to cluster-pool-v2beta, which allows for dynamic, resource-usage based allocation of node CIDRs.

Kube Proxy

Cilium’s use of eBPF and XDP means you're not relying on iptables, so you can disable the kube-proxy altogether. You’ll need to do this with kubeadm and through Cilium’s deploy.

--set kubeProxyReplacement=probe

Native Routing

As discussed above, Cilium doesn’t need encapsulation to handle the routing of packets within the cluster; so be sure to enable native routing.

--nativeRoutingCIDR=192.168.0.0/16

Hubble

As Hubble and Cilium observability is a big part of the appeal, make sure to enable it.

--set global.hubble.relay.enabled=true

--set global.hubble.enabled=true

--set global.hubble.listenAddress=":4244"

--set global.hubble.ui.enabled=true

Complete Install

The complete Cilium install looks like this:

helm repo add cilium https://helm.cilium.io/

helm upgrade --install cilium/cilium cilium \

--version 1.13.4 \

--namespace kube-system \

--set image.repository=quay.io/cilium/cilium \

--set global.ipam.mode=cluster-pool \

--set global.ipam.operator.clusterPoolIPv4PodCIDR=192.168.0.0/16 \

--set global.ipam.operator.clusterPoolIPv4MaskSize=23 \

--set global.nativeRoutingCIDR=192.168.0.0/16 \

--set global.endpointRoutes.enabled=true \

--set global.hubble.relay.enabled=true \

--set global.hubble.enabled=true \

--set global.hubble.listenAddress=":4244" \

--set global.hubble.ui.enabled=true \

--set kubeProxyReplacement=probe \

--set k8sServiceHost=${PUBLIC_IPv4} \

--set k8sServicePort=6443

Conclusion

Cilium might be much newer to the Kubernetes CNI landscape, but in its short time it has become the gold standard for Kubernetes networking. While Calico is also a great option, Cilium’s adoption of eBPF and XDP provide a future facing solution, enriched with the best debugging tool available (Hubble) and the best developer experience with the assistance of the Cilium Editor.

You may also like

Dig deeper into similar topics in our archives

Crosscloud VPN with WireGuard

Learn to establish secure VPN connections across cloud environments using WireGuard, including detailed setups for site-to-site tunnels and VPN gateways with NAT on Equinix Metal, enhancing...

Kubernetes Cluster API

Learn how to provision a Kubernetes cluster with Cluster API

Kubernetes with kubeadm

Learn how to deploy Kubernetes with kubeadm using userdata

OpenStack DevStack

Use DevStack to install and test OpenStack on an Equinix Metal server.