How Load Balancers Differ From Reverse Proxies, and When to Use Each

While reverse proxies do balance loads, they do a lot more than that, and there are often good reasons to use both.

Two components play a vital role in web traffic management: reverse proxies and load balancers. While each serves a different purpose, they work together to ensure smooth, secure and efficient data flow in a client-server architecture.

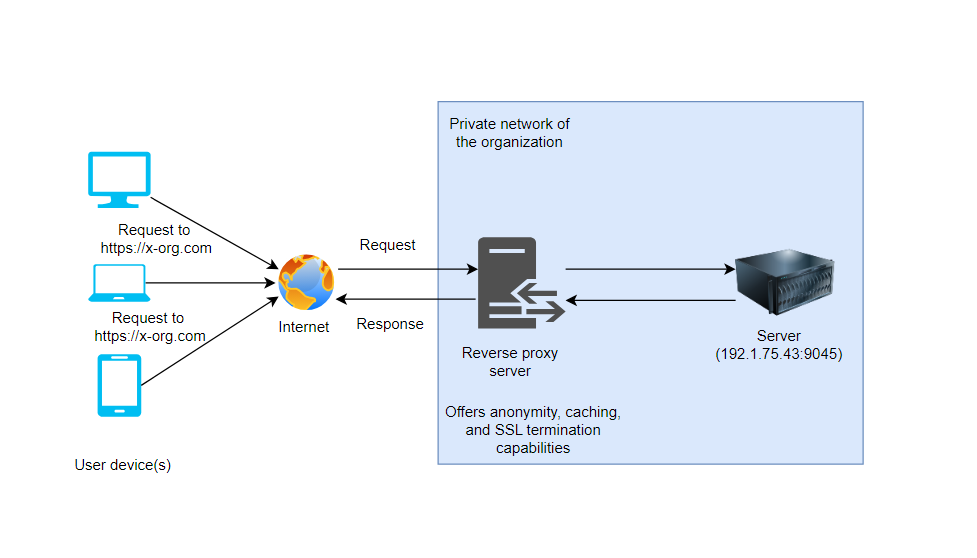

A reverse proxy is a server that sits between clients and backend servers. It's typically placed behind the firewall at the edge of a private network and distributes incoming client requests to various backend servers on the network. This intermediary infrastructure service’s benefits do include load balancing—in addition to improved security, content caching and Secure Sockets Layer (SSL) encryption.

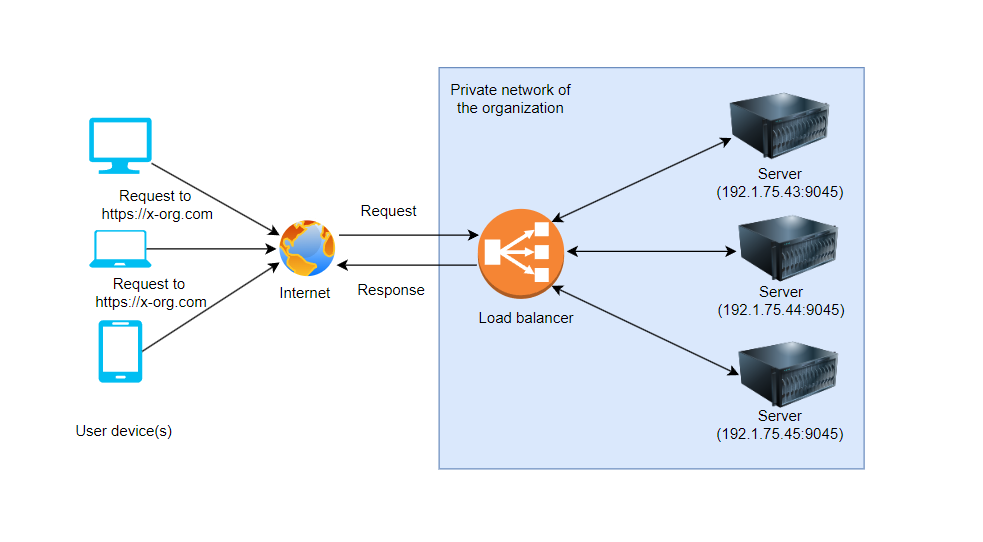

A dedicated load balancer, meanwhile, distributes traffic across multiple servers solely to ensure a smooth flow of traffic and prevent any single server from being overwhelmed. Its primary role is to enhance the overall performance of applications, ensure their reliability and scale their capacity to handle traffic demands.

More on load balancing:

- What Load Balancers Do at Three Different Layers of the OSI Stack

- How API Gateways Differ from Load Balancers and Which to Use

- How to Speed Up and Secure Your Apps Using DNS Load Balancing

Reverse proxies and load balancers have overlapping functionality, making knowing when to use each complicated. In this article, you'll learn more about reverse proxies and load balancers, their benefits and their use cases so that you can make an informed decision when building your web application.

Understanding Reverse Proxies

As an intermediary between client devices and a web server, a reverse proxy performs several key functions that enhance the security and performance of web services. The reverse proxy server receives a client request and decides whether it should go to a backend server or if cached content can be returned. When sending the request to the backend server the reverse proxy uses preconfigured routing and load balancing rules to decide which server to send it to. Before returning the response to the client, whether cached or received from a backend server, the reverse proxy can perform additional operations like compressing the response body or rewriting response headers. The client then receives this response from the reverse proxy.

Following are some of the reverse proxy advantages.

Provides Anonymity and Security

One of the primary advantages of a reverse proxy is the anonymity it provides. By acting as an intermediary, the reverse proxy hides the IP address and identity of the web server from the client. This makes it more difficult for malicious actors to target the web server directly.

Uses Content Caching

Reverse proxies can store (cache) responses that change infrequently from a web server. When a client requests the same content, the reverse proxy can deliver it directly from its cache without forwarding the request to the web server. This reduces the load on the web server and speeds up the response time for the client, leading to improved website performance.

Manages SSL Encryption

Reverse proxies can manage SSL encryption, transferring this CPU-intensive task away from web servers. When a client makes a secure (HTTPS) request, the reverse proxy can decrypt the request, forward it to the web server in plain text, retrieve the response, encrypt it and send it back to the client. This process, known as SSL termination, enhances security and frees up resources on the web server.

Common Reverse Proxy Use Cases

There are several scenarios where you might need an intermediary service, like a reverse proxy or load balancer. Following are some use cases where a reverse proxy would be best suited.

Handling High Traffic on E-commerce Websites

One of the most common use cases for reverse proxies is managing high traffic on e-commerce websites. Suppose you're working with an e-commerce website that receives thousands of requests per minute. The server has to process each request and return information like product details and images. A lot of these requests probably query data that is infrequently updated. For example, a product description and photos are rarely changed; even if they are, the client doesn't need to receive these updates immediately. You should consider caching this kind of data to avoid overloading your server.

A reverse proxy is the perfect candidate in this case. It provides flexible caching capabilities that you can use to cache the relatively static data. When configuring caching rules in your reverse proxy, you can configure which requests to cache based on HTTP method, paths and file types. In an e-commerce context, this lets you define different caching rules for querying product descriptions and reviews. You can also configure how often the cache should be refreshed. For example, you'd probably want to refresh cached product reviews every few hours, while a product description and images can be cached for days or months.

You’ll see your server usage go down significantly with optimal caching rules.

Obfuscating Servers and Protecting Sensitive Data

Reverse proxies can act as a gateway for your organization's internal services. For example, an online banking platform has several servers that manage different sections in an online portal. If the portal were to call each of these services individually, the client could easily see the DNS and IP addresses of these services. With enough time and effort, a malicious client could gain access to unauthorized data on that server, such as transaction history or account balances.

A reverse proxy mitigates this risk by letting the portal call a single endpoint to access all these services. This obfuscates the internal servers' details since the client can only see the reverse proxy DNS and IP address. As a result, it's harder for the client to probe and access unauthorized data on one of these internal servers. It's important to mention that your servers should also sit in a private network inaccessible from the Internet. In such a setup, the reverse proxy sits on the private network's edge, behind a firewall, and provides controlled access to the internal servers from the Internet.

Distributing Traffic Evenly across Servers

Some reverse proxies, such as Nginx, offer load balancing features that help distribute traffic evenly across servers. For example, a popular news website might receive millions of requests during peak hours, which can be challenging to handle with one server. Overloading the server degrades customer experience since loading articles takes longer, and some functionality, like commenting or reacting to articles, might not even be available.

By deploying a reverse proxy with load-balancing capabilities, you can set up several servers to host the same website backend and add them to a server pool. When the reverse proxy receives a request, it can distribute the request to any of the servers in the pool, reducing the risk of a single server becoming a bottleneck. Reverse proxies can distribute traffic based on application data like request headers, user sessions and IP addresses, making it an ideal solution if you have straightforward load-balancing requirements. However, a dedicated load balancer might be necessary if you have significantly high traffic volumes or need advanced routing configuration options.

Understanding Load Balancers

A load balancer acts as a central point of entry for client requests to a particular backend service. Load balancers can employ one of several algorithms to ensure requests get distributed efficiently across a pool of servers running the same application, ensuring optimal performance and resource utilization. Each algorithm has its own unique benefits and drawbacks, so you must consider your particular web application's functionality and requirements before choosing one.

Load balancers can operate at different layers of the OSI model. At the lowest level, L2 load balancers operate at the Data Link Layer. These load balancers use MAC addresses, Ethernet Frame Headers and VLAN tags to distribute data within a single network, such as in a data center.

L3 load balancers operate at the network layer, Layer 3 in the OSI Model. These load balancers operate globally and can have servers in different data centers worldwide. When the load balancer receives a request, it considers the user's distance to a server and the server's health to route the request. If a server or geographic region goes down, L3 load balancers can route the traffic to a different region.

L4 load balancers can distribute the load based on source and destination IP addresses and ports (where the request comes from and what service it's intended for). This type of load balancing is often referred to as transport-level or Layer 4 load balancing.

Load balancers operating at the application layer are called L7 load balancers and can make routing decisions based on the content of the message, such as the HTTP header, the SSL session ID or the actual contents of the message. This allows for more sophisticated load balancing strategies, such as directing web traffic based on the URL or type of content requested. This type of load balancing is often referred to as application-level or Layer 7 load balancing.

The following are a few of the main advantages of load balancers.

Distribution of Client Requests

Load balancers efficiently distribute client requests using different algorithms. You can configure the load balancer to distribute requests using the Round-Robin, Weighted Round-Robin or Randomized algorithms for simple traffic distribution. These algorithms distribute traffic without checking server utilization. However, some scenarios might require more sophisticated algorithms like the IP Hashing algorithm, which ensures a particular client's requests always go to the same session for session persistence, or the Least-Connections, Weighted Least Connections, Least Bandwidth, Least Packets and Least Response Time algorithms which distribute traffic dynamically based on each server’s performance and utilization. When distributing traffic, load balancers can detect if a server goes down and reroute traffic to the remaining online servers in the pool.

All these algorithms aim to make the best use of resources, enhance data processing capacity, reduce the time taken to respond and prevent any single resource from becoming overwhelmed.

- More on the algorithms: How Load Balancing Algorithms Work, and How to Choose the Right One

Increased Application Availability and Reliability

Load balancers can monitor connections to servers to ensure they route requests to the server with the least number of connections, lowest bandwidth usage or lowest response time. They can also track server health to ensure requests are only directed to servers that can respond promptly. To do this, servers constantly publish statistics about their resource utilization. The load balancer can then monitor these statistics and determine if a server is healthy and responsive or not. If a target server in the pool cannot be reached, or its health reports indicate the server is unavailable, the load balancer can divert requests to the next suitable server in the available pool, improving the availability and reliability of applications.

Scalability and Flexibility

Load balancers offer the flexibility to manage growing workloads by seamlessly incorporating additional servers into the pool as demand increases. This scalability allows businesses to manage traffic demands efficiently and maintain application performance during peak traffic times. During low-traffic times of the day, you can also reduce your server pool to a single server to avoid wasting money on unused server resources.

Common Load Balancer Use Cases

Depending on your use case, a load balancer might be more suitable than a reverse proxy. Below are some such cases.

Handling High-Traffic Websites and Applications

Load balancers are essential for managing high-traffic websites and applications. If you have a video streaming site that receives over a million views and over 100,000 unique visitors per month, with bandwidth usage exceeding 1,000 GB per month, a single server would struggle to handle this volume of traffic. If you're working with such high volumes across several regions, a reverse proxy with load balancing capabilities might not be enough.

On the other hand, a dedicated load balancer can handle such volumes and distribute incoming requests across multiple servers, ensuring smooth operation even during peak traffic times. The type of load balancer you implement will depend on the nature of your incoming traffic. An L3 load balancer is an ideal solution if you have a global application spread across multiple geographic regions. If you're only distributing content in a single region or hosting servers in a single geographic area, you could use an L4 or L7 load balancer.

Failover Protection in High-Availability Setups

In high-availability setups, where downtime can result in significant revenue loss or damage to reputation, load balancers provide failover protection. If one server fails, the load balancer automatically redirects traffic to the remaining operational servers. For instance, an online banking system might have a critical service that should never fail. The bank can set up several servers with the same application and place them behind a load balancer. The load balancer monitors each server and automatically redirects requests to a working server in the pool if another fails.

For such instances, you might use a load balancing algorithm like Least Connections, Weighted Least Connections, Least Bandwidth, Least Packets or Least Response Time to monitor each server and send requests to the server with the best metrics.

Session Persistence

Session persistence, also known as sticky sessions, is another common use case for load balancers. In an e-commerce scenario, a customer's shopping cart must persist across multiple requests. If the load balancer doesn't support session persistence, the customer could lose their shopping cart contents when their requests get routed to a different server.

Load balancers can implement session persistence to ensure all requests from a client during a session are sent to the same server, maintaining session continuity. This is possible using an algorithm like IP Hashing, which involves creating a hash of the source IP address and then using that hash to route all requests from that IP to the same server in the pool. Suppose you're using an L7 load balancer that can decode application data. In that case, you can also use the user's session in a request to ensure their requests get sent to the same server for the duration of their authenticated session.

Application Deployment in Multicloud Environments

Finally, load balancers play a crucial role in multicloud environments. Businesses often deploy applications across multiple cloud platforms to avoid vendor lock-in, increase resilience and optimize costs. If a single cloud provider goes down, client requests can simply be redirected to another cloud provider hosting the same application. To achieve this, you need a load balancer that's configured to distribute traffic to a server pool with servers on different clouds.

If you've implemented a multicloud environment across multiple regions, an L3 load balancer is ideal since it can route traffic to the closest operational server in one of the cloud provider data centers. Otherwise, L4 or L7 load balancers will work for smaller geographic setups. When routing traffic inside one of the cloud providers, you can use an L2 load balancer to ensure traffic gets distributed efficiently inside the cloud provider's data center.

Conclusion

In this article, you took an in-depth look at two key components of web traffic management: reverse proxies and load balancers.

Reverse proxies help improve website performance, handle SSL termination and promote anonymity. They play a crucial role in enhancing security. In contrast, load balancers manage web traffic by distributing client requests, increasing application availability and reliability and providing scalability for growing traffic demands.

If you need a high-performance, fast and secure network to deploy your application globally, Equinix dedicated cloud provides full control of your network configuration and server hardware and software. You decide exactly how packets get routed using direct cloud-to-cloud or colo-to-cloud private networking and direct Layer 2 and 3 connections, allowing you to build high-performance Network-as-a-Service solutions globally.

The features include Equinix's Load Balancer-as-a-Service, a powerful load balancing solution that uses the global Equinix network backbone. External traffic directed to your dedicated cloud compute clusters behind a load balancer gets transported through the global backbone, ensuring your clients' requests arrive at the most suitable location on your network, wherever in the world that may be. This ensures optimal performance and efficient use of resources and makes Equinix an excellent choice for businesses seeking to optimize their web traffic management.