How API Gateways Differ from Load Balancers, and Which to Use

Get a deeper understanding of what these essential technologies behind web services do, and how and when they can be used together.

Regardless of your web development experience, there's a good chance you've heard of load balancers and API gateways. At first glance, they might seem interchangeable—both sit between a web client and the server and can distribute requests to different servers. However, these terms refer to two distinct infrastructure services with unique purposes. Knowing these differences will help you build robust web applications that scale.

For example, when designing an e-commerce website, you need to understand the roles of load balancers and API gateways before deciding which to use. Load balancers can help you handle the influx of traffic by distributing requests to different servers to ensure a smooth user experience. In contrast, API gateways can handle tasks like authentication and authorization, helping you create a secure online shopping experience.

This article introduces both API gateways and load balancers so that you can make an informed decision about which to choose for your use case. It covers the advantages of each and explores scenarios where integrating API gateways and load balancers is advantageous.

More on load balancing:

- What Load Balancers Do at Three Different Layers of the OSI Stack

- How to Speed Up and Secure Your Apps Using DNS Load Balancing

- How Load Balancers Differ From Reverse Proxies, and When to Use Each

Understanding Load Balancers

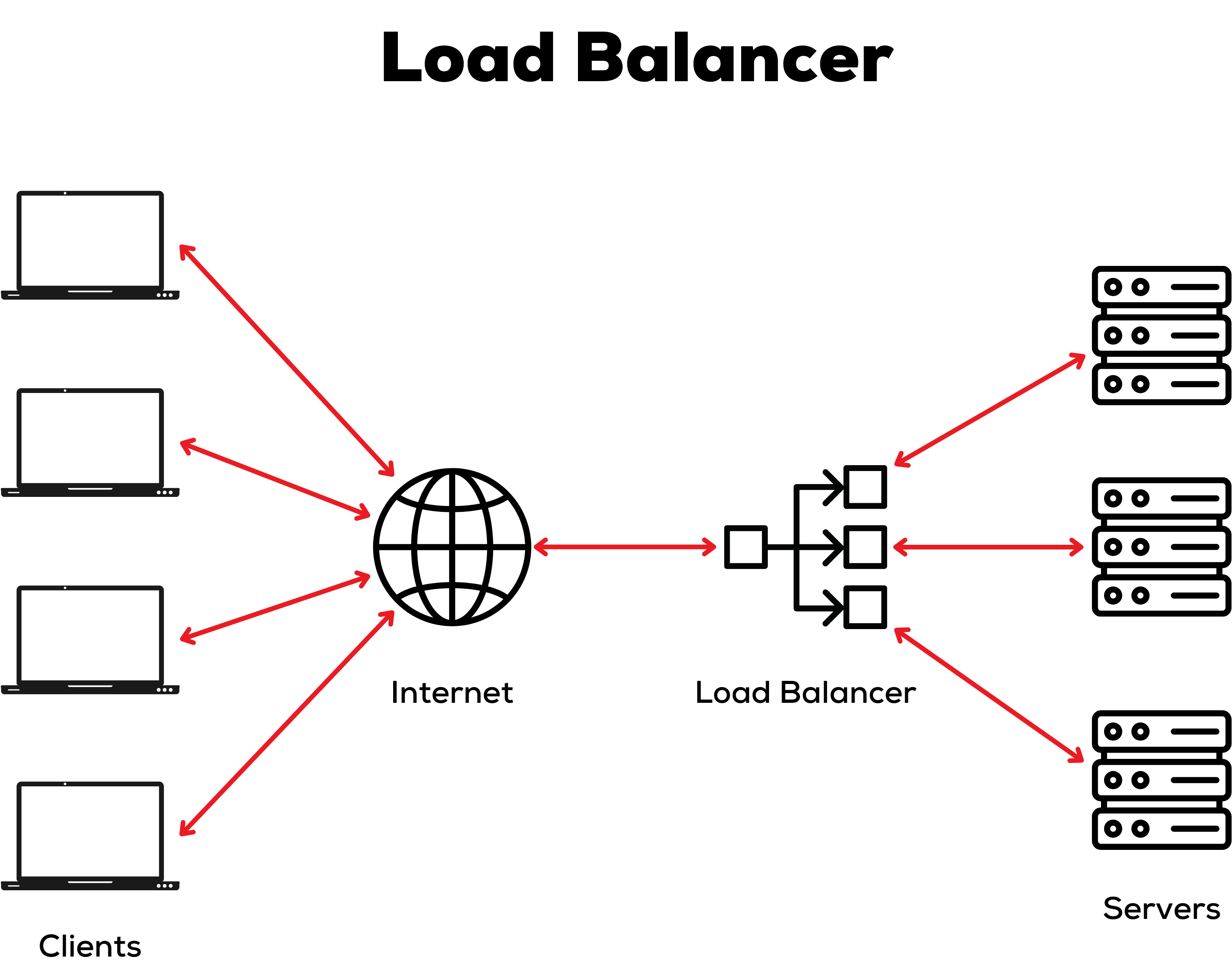

Load balancers distribute requests between two or more servers running the same application. Server pools group together servers that a load balancer might send traffic to. When a client sends a request to the load balancer, it delegates the request to one of the servers in the server pool and returns the response.

The following diagram illustrates how a load balancer manages user requests and responses:

Load Balancer Algorithms

Load balancers use different algorithms when deciding which server should receive a request.

Static load balancing uses a predetermined algorithm to distribute traffic across servers. This distribution is fixed and doesn't change based on server usage or response time. The round-robin algorithm is one such algorithm that cycles through servers in a predetermined order as it receives requests. The weighted round-robin algorithm adds weights to each server in the server pool, letting you send more requests to servers in the pool with a higher capacity. You can also use a randomized algorithm that distributes incoming requests to random servers in the server pool.

Sometimes you need to ensure a client connects to the same server throughout their session. The IP hash algorithm lets you configure this by creating a hash of the incoming request's IP address and using the hash to determine which server should process the request.

In contrast, dynamic load balancing adjusts the distribution of traffic based on real-time factors like server connections, bandwidth and response time. Dynamic load balancing is often more complex since algorithms must monitor servers and connections. The least connections algorithm delegates requests to the server in the pool with the least active connections. The weighted least connections algorithm is similar but lets you assign weights to each server so that servers with more capacity receive more requests. You can also delegate traffic based on the bandwidth of each server.

The least bandwidth algorithm lets you route requests to the server serving the least amount of traffic, and the least packets algorithm lets you route requests to the server with the least number of packets received in a certain time period. Finally, the least response time algorithm measures the average response time across the different servers and routes requests to the server with the fewest active connections and fastest average response time.

- More on the algorithms: How Load Balancing Algorithms Work, and How to Choose the Right One

Load Balancers at Different OSI Layers

Load balancers can be configured at different OSI layers. Each implementation has its own features and purpose.

Application-level load balancers operate at the OSI model's application layer (Layer 7). These load balancers interpret and understand the request contents, such as the URL, HTTP headers and cookies. With this additional request context, Layer 7 load balancers can offer advanced functionality like SSL termination, compression and session persistence.

Further down the OSI model, Layer 4 (transport layer) load balancers, or network load balancers, make forwarding decisions based on network layer information, such as the source and destination IP addresses and port numbers. The request contents are not parsed or inspected, making Layer 4 load balancers remarkably efficient when forwarding requests. These load balancers often use the least response time, least bandwidth, or least packets algorithms to route traffic.

Global server load balancers operate at Layer 3 (the network layer) in the OSI model. These load balancers have servers in different data centers worldwide, which form a global server pool. When the load balancer receives a request, it considers the user's proximity to a server and the server's health to route the request. If a region or data center goes down, global server load balancers can redirect requests to servers in a nearby geographic location. This redundancy lets you build highly available, globally accessible applications.

Finally, Layer 2 (L2) load balancers operate on the OSI model's Data Link Layer. They manage traffic based on MAC addresses and other Layer 2 information, like Ethernet Frame Headers and VLAN tags. These load balancers are typically used in data centers to distribute traffic between servers within a local network rather than across multiple networks. They do this by working with frames and the information contained within them instead of packets, which L3 load balancers use. This approach provides efficient traffic management in a local network and improves performance within a LAN.

Load Balancer Benefits

Load balancers distribute traffic across multiple servers, letting you share an application's computational load across servers. By sharing the load, load balancers reduce the risk of your application going down because a single server is overloaded or taking too long to service a request. If one of the servers takes too much time to process a large request, the load balancer forwards requests to other servers in the server pool. This redundancy enhances your application's availability and minimizes downtime.

Server pools make scaling your applications straightforward. You can add new servers to your load balancer's server pool to scale your application's computational capacity. Once added, the load balancer will immediately start routing traffic to the new servers.

Load Balancer Use Cases

Load balancers are well suited for the following use cases:

- Distribution of traffic across multiple servers: A high-traffic website can use a network load balancer (Layer 4) to distribute requests among multiple web servers using the least response time algorithm.

- Improved scalability and redundancy: An e-commerce platform can use a load balancer to automatically redirect incoming traffic to healthy servers in the event of a server failure. The load balancer does this by proactively monitoring connections to each server and routing requests to healthy servers.

- SSL termination: An application-level load balancer (Layer 7) can decrypt incoming requests before distributing them to servers using an algorithm like least connections. This ensures requests are processed by servers as quickly and efficiently as possible.

- Session persistence: A Layer 7 load balancer can be configured to route requests to the same server in a pool for the duration of a user's session. For example, a banking website might want to make sure that all requests made by a user go to the same server throughout their session to provide a seamless experience for the user.

- Backend server health checks: To proactively detect unhealthy servers, you can configure scheduled health checks in the load balancer. These checks involve pinging servers or sending a request to a particular endpoint on the server. For example, a video streaming service can use health checks to monitor server load and ensure users continuously stream videos from the most efficient server using the least bandwidth algorithm.

- Data center load balancing: L2 load balancers are particularly effective in data center environments where multiple servers on the same network provide the same service. The load balancer works within a single network and efficiently distributes requests within the data center's LAN network.

Understanding API Gateways

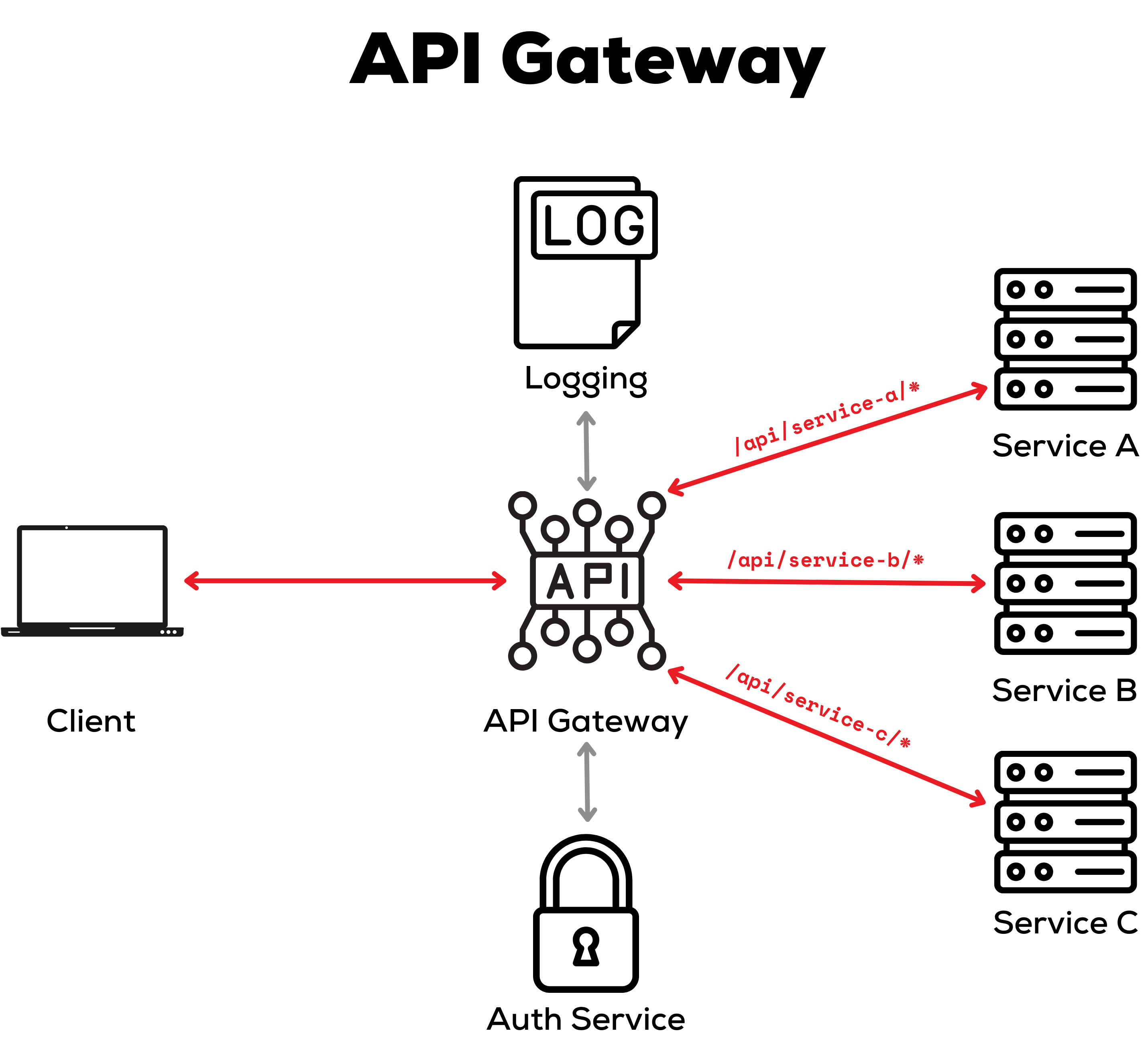

API gateways provide a single entry point for all requests to your backend systems. When clients send requests to the API gateway, the gateway maps the request to the correct service in an organization's internal network. When routing requests, API gateways can also handle tasks like authentication, authorization, logging, routing and protocol translation.

API gateways are implemented in the application layer (Layer 7) of the OSI model. This lets them read incoming packets and use the parsed data to route the request. Requests are routed based on their path, headers, query parameters and other attributes. The gateway might also serve a cached response, if configured, for the request endpoint.

API Gateway Benefits

API gateways simplify API management. The centralized control and monitoring make it easy to maintain, update and add new API endpoints pointing to different services on different servers.

In addition, many API gateways come with advanced security features. For example, you can integrate your API gateway with your authentication provider to grant users access to specific endpoints. You can also configure API keys and set granular rate limits for different endpoints in the API gateway to prevent clients from overloading or abusing your services.

API gateways also provide a consistent, unified API for your internal services. Using transformations, you can convert an incoming request into a protocol and structure that your internal service can accept. For example, you can translate a REST request into a GraphQL request that gets sent to an upstream service. You can also aggregate results from different internal services into a single response, letting you abstract the details of your internal infrastructure.

API Gateway Use Cases

API gateways are beneficial when building systems with the following use cases:

- Centralizing API management and security across multiple services: If several internal services require security, it can be cumbersome to implement authentication and authorization in each service. This is a common scenario when building a microservices architecture, such as a logistics company with microservices for quoting, tracking, routing and customer support that must authenticate and authorize users using the company's identity provider. Fortunately, API gateways let you implement and manage API security in a central place and, in some cases, even integrate with your company's identity provider.

- Legacy systems: Legacy systems often don't conform to modern content formats. For example, a legacy system might only accept requests and respond using XML, even though JSON is a far more popular format for modern web APIs. API gateways' protocol translation lets you map between these different formats directly within the API gateway.

- Simplifying the developer experience: If you're building a public-facing or partner API, an API gateway can massively improve the developer experience for engineers consuming your API. They only need a single endpoint to access all your services, instead of using different endpoints for different services. Many API gateways also let you document endpoints configured in the gateway and generate Swagger files.

- Rate limiting: If your API is going to be exposed to the public or even consumed in a public app, rate limiting is beneficial. Rate limiting restricts how many requests a client can make in a certain time period (often short, like every hour or day). Without rate limits, malicious actors might try to overload and crash your application by rapidly requesting these endpoints.

- Throttling: Throttling is more disruptive than rate limiting and cuts off a client's access until a predefined timeout expires or the client requests a higher rate. For example, if you expose a paid API to developers where a paid plan lets developers make a hundred requests a month, you can use throttling with your API gateway to block the client's requests after they've hit the limit.

- Caching static content: Your application might have endpoints that expose infrequently updated data and get called quite often by the client. For example, your application might have an endpoint to retrieve all lookup values for a form field. API gateways give you flexible rules that you can use to cache frequently accessed and infrequently updated data returned by your API. You can configure a lifetime for cached responses, after which the API gateway will refresh its cache by fetching from the application server.

Using Load Balancers and API Gateways Together

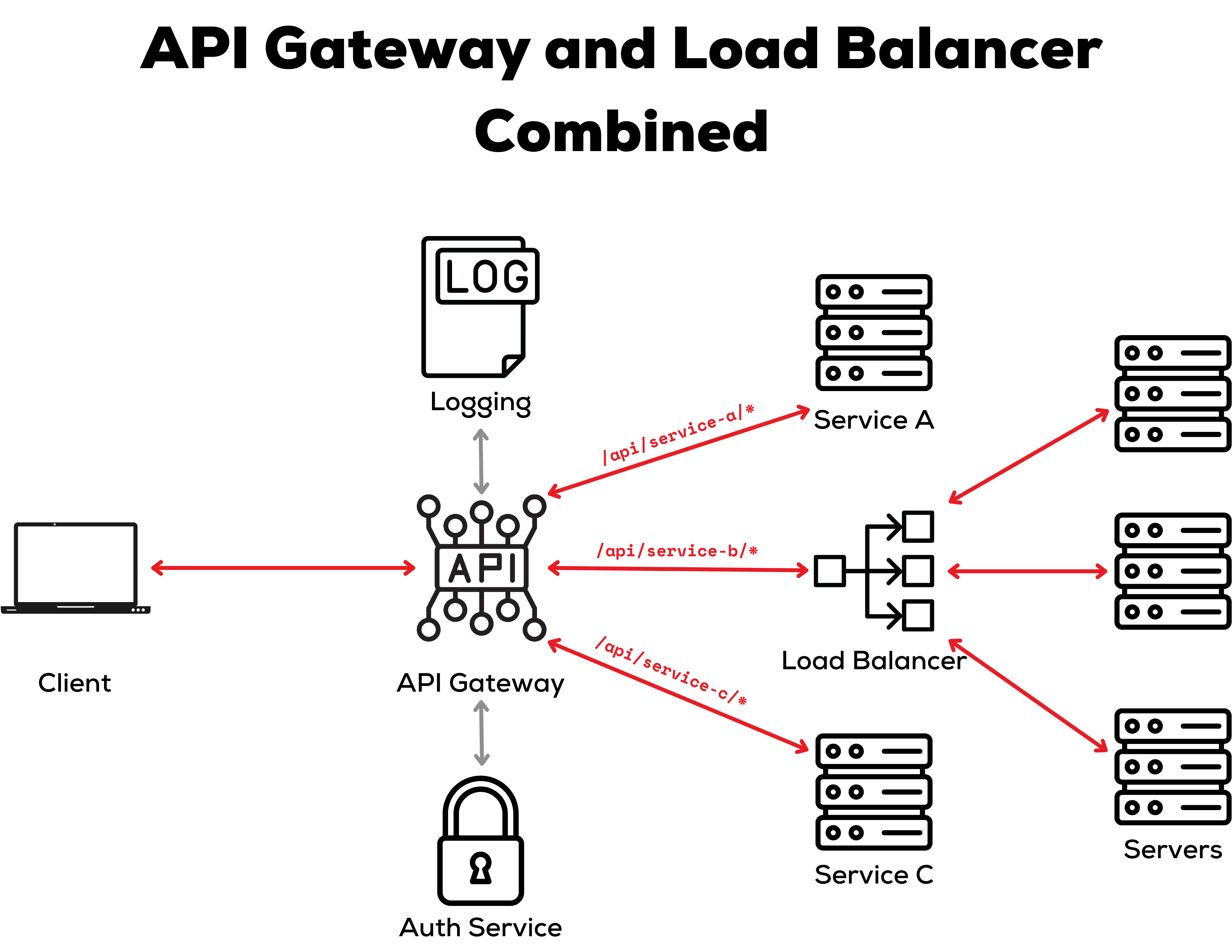

Even though load balancers and API gateways serve different purposes, they can be used together. This solution combines the traffic management of a load balancer with the API access control of a gateway, giving you the best of both worlds.

Benefits of Combining Load Balancers and API Gateways

Using API gateways and load balancers together helps ensure your application is highly available. In this scenario, the load balancer plays a crucial role in maintaining application availability within your infrastructure by directing requests to operational servers. At the same time, API gateway features like caching, throttling and rate limiting can minimize the volume of requests reaching application servers, alleviating the load and ensuring optimal availability.

When you use both an API gateway and a load balancer together, you also get enhanced traffic management and routing. API gateways manage sending requests to the correct application servers, and load balancers efficiently route traffic to application servers. This lets you route traffic efficiently at both levels.

As previously mentioned, API gateways and load balancers can both enhance security. Combining the two gives you the authentication, authorization and rate limiting features of an API gateway and the security features of load balancers, like SSL/TLS termination.

Combining the two infrastructure services also increases your application's scalability and performance. You get the scalability advantages that load balancers provide, and your API gateway's caching features help you avoid sending unnecessary requests to your application servers.

When You Should Combine Load Balancers and API Gateways

The advantages of combining load balancers and API gateways are compelling, but it's important to remember that setting up both infrastructure services can be complicated. You'll want to make sure you need to use both before implementation.

You'll derive the most benefit from using both API gateways and load balancers in the following scenarios:

- Microservice architecture: API gateways and load balancers simplify exposing microservices to end users by letting API gateways handle application-level routing and letting load balancers handle network traffic. You can also add new servers to load balancer pools without updating the API gateway.

- High-traffic applications: By combining API gateways and load balancers, you can cater to high-traffic scenarios by caching popular, infrequently updated endpoints in the API gateway. This way, requests to these endpoints don't overload your application server. When requests go to the application server, the load balancer ensures that all the servers are being utilized effectively.

- Hybrid cloud environments: If you're building public and private cloud applications, API gateways can provide a single endpoint to access services in both environments. In this scenario, load balancers can also help by routing traffic to the geographically closest server to reduce the end user's latency.

Conclusion

While they might seem similar at first glance, load balancers and API gateways are vastly different and serve different purposes in your infrastructure. Load balancers are an excellent choice if you need to scale a single application across multiple servers and have a single endpoint for that application. API gateways are useful if you need a single endpoint that provides access to several services in your internal network.

Consider combining API gateways and load balancers to cater to high-traffic situations or if you're distributing your application across different microservices. It's essential to consider your needs and decide which approach will be most appropriate for your application.

Most major cloud providers offer load balancer and API gateway services. The load balancer-as-a-service on Equinix’s dedicated cloud is uniquely powerful because of its use of the global Equinix network backbone. External traffic headed for your Metal clusters behind a Metal load balancer automatically ends up on our backbone to get delivered to its destination, wherever on the planet that may be. Think of it as a private global fastlane for your data and a building block as you create the kind of network your application needs.