ARM’ing the World with an ARM64 Bare Metal Server

Bringing an automated ARMv8 solution to the datacenter wasn’t easy, and this marks an important step for us in building a rich ecosystem of ARMv8-optimized workloads.

Since our Series A funding announcement two months ago led by SoftBank Corp (the same SoftBank that acquired ARM Holdings in a $31 billion deal this summer), we’ve been asked several times for our thoughts related to ARM chips in the datacenter. Well, we think it matters. Big Time. So much so that we’ve been hard at work on an ARMv8 server solution for nearly a year -- well before Mr. Son flew to Greece to bid on taking over ARM Holdings!

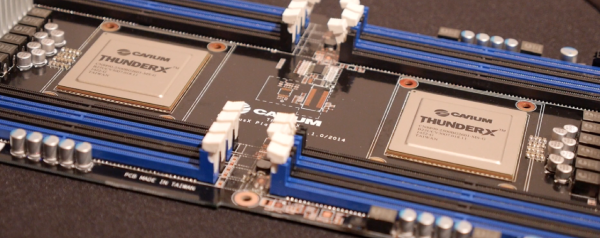

As such, it is with great pleasure (and a whole lot of anticipation) that I'm writing to announce that you can now provision hourly, on-demand ARMv8 servers powered by 2 x 48 Cavium SoC’s on Packet. We’re starting with our EWR1 home (New York metro), as well as Sunnyvale and Amsterdam. We’ll add in Tokyo in early December when the facility opens for production customers.

The amazing thing about this server (which also features 128 GB of DDR4 RAM and 320 GB of m.2 SSD flash) is the incredibly low cost per core: at $0.50/hr for 96 cores, you’re paying about 1/10th the cost per core (per hour) versus our Intel-based Type 2 machine. The comparisons aren’t exact, but the fuzzy math pans out if you can use 96 cores in a single machine.

Bringing an automated ARMv8 solution to the datacenter wasn’t easy, and this marks an important step for us in building a rich ecosystem of ARMv8-optimized workloads. To help grease the wheels, it’s worth noting that Packet’s API’s, 3rd party integrations and powerful meta data service are all 100% supported with this launch, so you’ll find deploying your first ARM server cluster as easy as the Intel-powered types we offer.

Just like everything else we do, these are single-tenant bare-metal servers, not virtualized slices. We’re starting with Ubuntu 16.04 and CentOS 7, but have CoreOS and FreeBSD almost ready for prime time, along with IoT focused OS flavors. Want something specific? Just ask and we’re glad to add it to our public roadmap!

Background on ARM

For those of you that aren't microprocessor junkies, ARM creates a processor architecture that implements a reduced set of CPU instructions (commonly referred to as “RISC”). While CISC chips like Intel x86 implement a broad set of generalized CPU features that allow compilers to offload a lot of common instructions onto the CPU, ARM takes a more “lean and mean” approach that forces compilers to do a little more work upfront, but allows for more control and better optimization for specific workloads.

Importantly, ARM chips are made and sold differently. ARM Holdings essentially licenses the chip architecture and CPU core designs to companies that customize them and then either fabricate them directly or contract the silicon work with a 3rd party fabricators like TSMC. Licensees include Applied Micro, Broadcom, NVidia, Huawei, AMD, Samsung and Apple.

Because of the diverse makeup of licensees and their focus on customizing chips for specific markets, ARM chips tend to be significantly more competitive than generic Intel E3 or E5 chips, which are made for a broad set of use cases and effectively lack competition. Basically there is a lot more choice when buying an ARM processor vs. when buying an x86 one.

ARM chip designs are also focused on power efficiency, which is why nearly every smart phone, IoT device or remote control for your TV is made with an ARM chip design inside of it.

Who Cares?

If a powerful ARMv8 server lands in a datacenter near you...does anybody care? The question deserves an entire post on its own, so you can expect that coming down the pike. But basically we think so, and here’s the cliffnotes reason why:

- Choice: Intel vs Intel?

- Workload portability

- Workload specific processing

- High core count

- Security

Use Cases

While we have our own ideas, the main reason we just deployed hundreds of thousands of dollars of ARMv8 servers in our datacenters is to get your help in finding the use cases. From our beta users, we are seeing the following as strong leaders:

Building on ARM - Instead of doing all your ARM building on a Raspberry Pi located under your desk, or in a container on a VM inside of VirtualBox on of your laptop, you can now do so on 96 cores of dedicated ARMv8 in our fancy temperature-controlled, interweb-connected, man-trapped, state-of-the-art datacenters. Oh and hook it up to your CI pipeline using standard AWS-style cloud-init and provisioning hooks.

Porting to ARM - Have an open-source project and want to port it to ARM architecture or simply test to see if your multi-arch support really works? Now’s your chance to get easily-consumable ARM to port and test your software without having to bear the burden of getting an OS successfully booting on an ARM VM/host. (It wasn’t trivial! More on that later.)

Docker Containers - One of the more interesting workloads we’re interested to see are Docker containers. Containerization in an automated, bare-metal world obviates the need/penalty for the virtual machine and we’re excited to see the price/performance of how that applies to containers running on ARM and high core count systems where multi-threading is an advantage over pure clock speed.

Network Applications - With ARMv8 and SSL/crypto offload, a lot of network applications like http/s, load balancing, switching, VPN, and tunneling will find a natural home on ARM architecture. If you need to deliver high-performance network applications to your end users, you should be looking at ARMv8. We’re testing Cavium’s Nitrox SSL offload our labs now and will report back on the results.

Price - Oh yeah, it all comes down to price - price to watt basically. If you’re running a SaaS platform, crunching some data set or trying to optimize a large workload, the cost savings on the 96 core systems could be a game changer for you.

In the end, everybody wins when we have automated, repeatable ARM servers in the datacenter. We’re excited to take a big step towards that future today and can’t wait for your feedback, bug reports and performance comparisons. Thanks for helping us make the Internet a bit better today!