What’s Old Is Cool Again: Liquid Cooling Unlocks Data Center Sustainability

Like Kate Bush ran up those pop charts in the summer, a cooling technology invented decades ago is poised to make a comeback in the data center.

The fastest way to cool your favorite beverage is to spin it in a saltwater bath. Liquids are great heat conductors. Air, meanwhile, is the opposite of a great conductor: it’s a great insulator. Given how quickly the power density of CPUs has been rising, we think it’s time to reassess the runway current air-based data center cooling systems give us. Perhaps we can mix things up and take liquid cooling for another spin!

Air cooling dominates computing today, fans spinning fast to remove heat from laptops, servers, switches, and so on. Blowing cold air over an inexpensive heatsink can be very cost effective—or at least appear that way if all you look at is the acquisition cost. But, as computers get more powerful and increase the amount of heat per cubic inch of air that needs to be removed, dissipating that heat becomes harder for data center cooling systems, and taking a close look at liquid cooling vs air cooling becomes necessary.

Liquid Cooling vs Air Cooling: the ‘Magic Numbers’

We think a lot about these things in the data center space—things like removing heat from point A and dissipating it at point B. People in our industry use a few “magic numbers” that pinpoint the power density thresholds at which air cooling becomes too inefficient: 200W in the space of one standard data center rack unit, 350W in the space of two rack units, and 20kW within the footprint of a standard, 600-millimiter-wide server rack.

These numbers, referred to as TDP (Thermal Design Power), roughly describe the heat density, or heat flux, on a single piece of silicon. These thresholds are where the amount of energy spent moving air of a specified temperature over a heatsink forces one to start considering liquid cooling vs air cooling. And doubly so, if we also take into account the equipment’s total cost of ownership and carbon footprint over its lifespan. Its energy consumption is responsible for the largest portion of a server’s CO2 equivalent emissions (CO2e).

As the World's Digital Infrastructure Company™, Equinix can play a positive role in bringing about a sustainable future. In 2015 the company established its 100% renewable energy target and in 2021 expanded its commitment to include science-based targets and climate neutrality. One of the tools in our toolbox for building sustainable data centers is liquid cooling.

Data Center Liquid Cooling 101

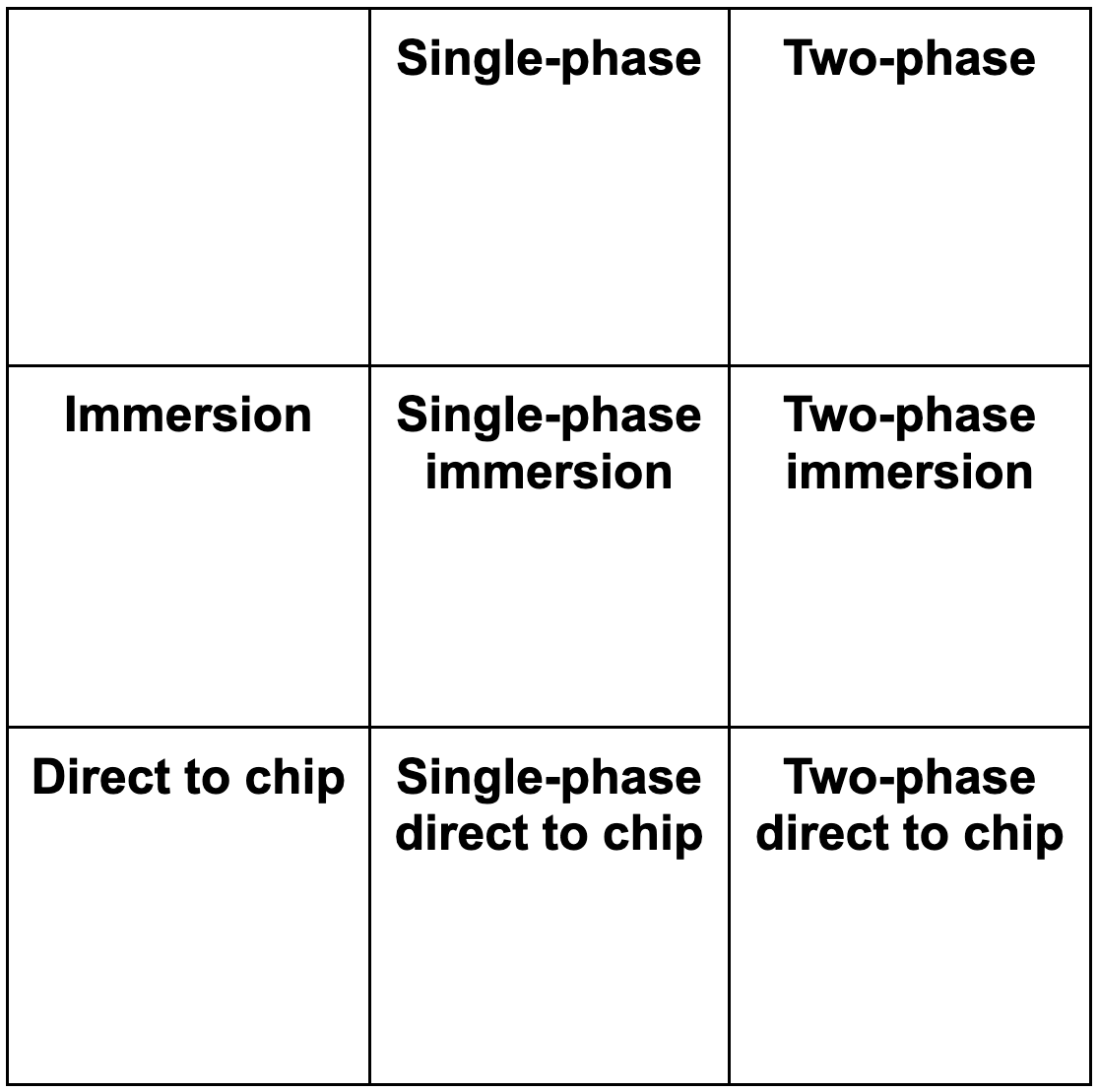

Let’s start with some basics. Liquid cooling technologies used in data centers fall in two broad categories: immersion and “direct to chip.” In both categories there are “single-phase” and “two-phase” designs, and the distinction between them has to do with the different physical properties of liquid used for heat removal. If all this was in a Punnett square, the square would look like this:

The first dimension describes the method for containing the “working” fluid. Immersion cooling means all server components are fully submerged in a dielectric fluid (fluid that doesn’t conduct electricity). Direct-to-chip designs use a plumbing system to pump coolant to metal “cold plates” that are attached directly to processors.

Single-phase liquid cooling uses conduction to remove heat, simply transferring it from the warmer body (the processor) to the cooler body (the fluid). Two-phase cooling removes heat through a phase transformation: once the source reaches a certain temperature, the liquid gets transformed into a gas. Pure water, for example, boils at 100C at 1 atm (unit of atmospheric pressure). The 3M Novec 7000 engineered fluid used in many two-phase systems boils at 34C at 1 atm.

Direct-to-chip single-phase liquid cooling solutions are a mature and well understood technology. It was used to cool the original IBM System 360, released circa 1964 (the first liquid-cooled computer), and it cools the latest, exascale supercomputers, Frontier and Aurora. The working fluid in these systems is deionized water, or PG25.

Direct-to-chip two-phase solutions are relatively recent market arrivals that hold a lot of promise.

(We acknowledge but avoid systems that rely on “air-to-liquid” heat exchangers, rack cooling doors, and in-row coolers, since they leverage indirect heat transfer.)

Liquid Cooling Is Not a Sport With Many Fans

Electric fans are the primary mechanism for moving air through servers and switches, pulling cool air in in the front and pushing hot air out in the rear. Operators have two “knobs” they can turn to adjust for more or less heat in an air cooling system: air temperature and air volume, the latter measured in CFM, or Cubic Feet per Minute. A server getting too hot? Make the air cooler, push more of it through the system, or do both.

Server fans can consume a tangible amount of power when running at full tilt. A 40mm 29,500/25,500 rpm contra-rotating fan, aka “mini-jet,” uses 30.24W (2.52A at 12V). Fans in a typical 1RU system running at full load consume 241.92W.

Therefore, a rack of 30 1RU servers could take 7.26kW just to power the fans inside each of the boxes. Even after an 80% derating (reducing rotation speed), all the fans in the rack would use 1.45kW. And that’s not counting the fans that cool server power supplies. We can do better!

The parts of a direct-to-chip liquid cooling solution that are inside the server chassis are passive (no pumps needed). They use an external heat exchanger to remove heat from the working liquid. Here it’s important to remember the problem that direct-to-chip liquid cooling solves: heat density inside a server that cannot be easily addressed with a heatsink.

The external heat exchanger has two options for dissipating the heat: exhausting it into the data center hot aisle or sending it into the facility cooling loop. Even in a worst case scenario, in which a liquid-to-air exchanger consuming 750W is used, the 30-server rack would save roughly 6.5kW. Compared to the scenario where the fans are 80% derated, the liquid-cooled rack would need 701W less.

The servers wouldn’t be completely fanless; other components, such as voltage regulators, CPLDs, PCI cards, memory, and so on, would be air cooled. But a system optimized for liquid cooling could use much more efficient fans to move that remaining heat. Eight 8500 RPM fans using 1.44W each (11.52W total) would do the trick in the same 1RU system.

A liquid-to-air exchanger wouldn’t be ideal. Unlike air-cooled systems, liquid-cooled ones create high-grade heat, a source of energy a liquid-to-air exchanger would simply dissipate. Using a liquid-to-liquid exchanger instead would be a way to preserve the heat in the facility loop for additional use: comfort heating for example.

By this point, even with some toy numbers, the advantages of liquid cooling should start to become clear.

All the Other Fans

The fans inside the servers aren’t the only fans in the data hall. Data center cooling systems include CRAHs (Computer Room Air Handlers) and CRACs (Computer Room Air Conditioners), which use fans to move air around the room. Some data centers, such as the more recently constructed Equinix IBXs, have entire fan walls. The fans in these data center designs are used to flood the data hall with air cooled down to a temperature guided by the ASHRAE A1R specification. The current recommended “inlet air temperature” range for IT equipment is 18C-27C (64.4F-80.6F).

Assuming the trend of rising TDP in systems continues, data center operators have three options for remaining within that range:

- Continue increasing air volume in the data center

- Lower air temperature even further

- Do both

Increasing air volume requires accelerating fan rotation, which increases power consumption. Lowering inlet air temperature also requires more power. Both are the opposite of what you want to do if you want to operate sustainable data centers. Adding liquid cooling to assist with heat removal is the logical next step. The more heat you remove from the source and deposit directly into liquid, the less air cooling capacity you need to supply at the facility level. (This, by the way, opens up another power savings opportunity: safely operating at higher air temperatures!)

Liquid Cooling vs Air Cooling: Quantifying the Power Savings

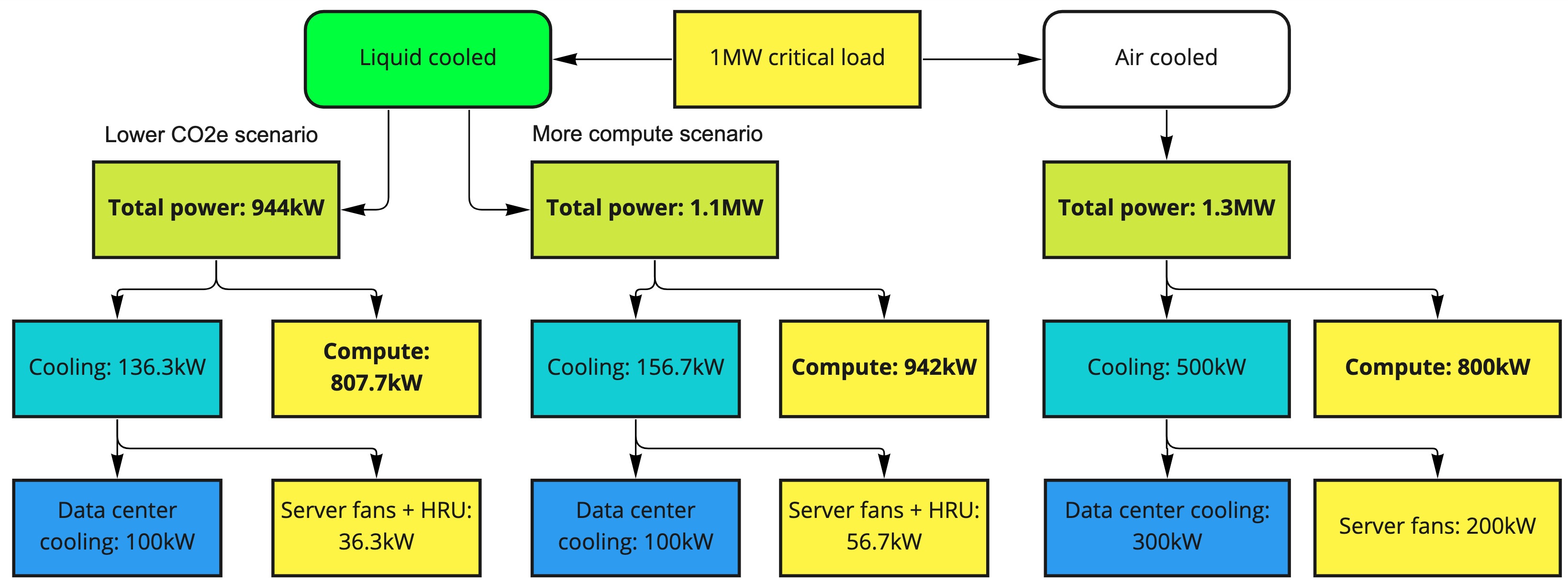

To illustrate the potential for aggregate power savings in a data center designed for liquid cooling vs air cooling, let's use an idealized model: a colocation customer with a 1MW critical load. (Critical load is the load required to power IT equipment. It doesn’t include the power used by the supporting data center mechanical and electrical gear).

Let’s assume that the customer’s servers are the same as the 1RU server we used in the previous example, with a name-plate 1200W power supply and a worst-case 241.92W fan load. Assuming all their servers are identical, about 200kW (or 20% of the critical power load) is used by server fans. (We’ve seen real-life configurations where fans use 25% to 28%.)

A traditional air-based data center cooling system would use around 300kW to cool that 1MW critical load, bringing the total to 1.3MW. Our cooling power load is now racking up: 500kW to cool 800kW of useful compute. Thus in our worst-case scenario the 1MW critical load can power 833 servers.

If we take the same 1RU system but replace all the high-flow fans with just the fans required to cool components other than the CPU—in other words, the fans remaining in a direct-to-chip liquid cooled system—each system would need 969.6W.

To ensure we’re comparing apples to apples, let’s also include the 652W consumed by an appropriate liquid-to-liquid exchanger and share the burden over 15 systems. This gives us a cooling system that consumes 5.67% of total power, or 56.7kW to cool the 1MW critical load.

The facility would need an estimated 100kW to power the remaining air cooling load and the liquid-cooling system pumps, bringing the total to 1.1MW. That is, 942.8kW of useful compute requires 157kW of power for cooling. In our worst case scenario, the 1MW critical load can fully support 987 servers.

The efficiency improvement can be leveraged in one of two ways: 356kW in power savings, consisting of a 200kW facility power reduction (20% down) and a 156kW critical load reduction; or a 200kW facility power reduction plus capacity freed up to support an additional 153 servers (that’s 18% more servers).

This can lead to a substantial emissions reduction for any customer, even with this idealized model for weighing liquid cooling vs air cooling. It’s important to recognize that the model heavily penalizes the 1RU system by using worst case scenarios and minimal loading of the liquid-to-liquid exchanger. Real-life deployments would likely see even bigger efficiency improvements.

The Three “Scopes” of Emissions

Using liquid cooling instead of air cooling can dramatically reduce a data center’s carbon emissions—and not only the emissions associated directly with electricity use. Sustainability practitioners organize the various direct and indirect ways in which a facility, business operations, and/or products contribute to climate change into three general categories, or “Scopes of emissions.”

A data center’s Scope 1 emissions are emissions produced directly at the facility, such as emissions from backup diesel generators. As you can imagine, Scope 1 isn’t the biggest concern in the data center context, because backup generators run only rarely. Scope 3 encompasses carbon emissions that indirectly result from various activities associated with building and operating the facility: carbon produced to create the cement to construct the building’s walls, carbon produced by equipment manufacturers, carbon produced by the transportation employees use to get to work, and so on. The big one for data centers is Scope 2, which measures carbon emissions that result from producing the energy a data center operator buys to run the facilities.

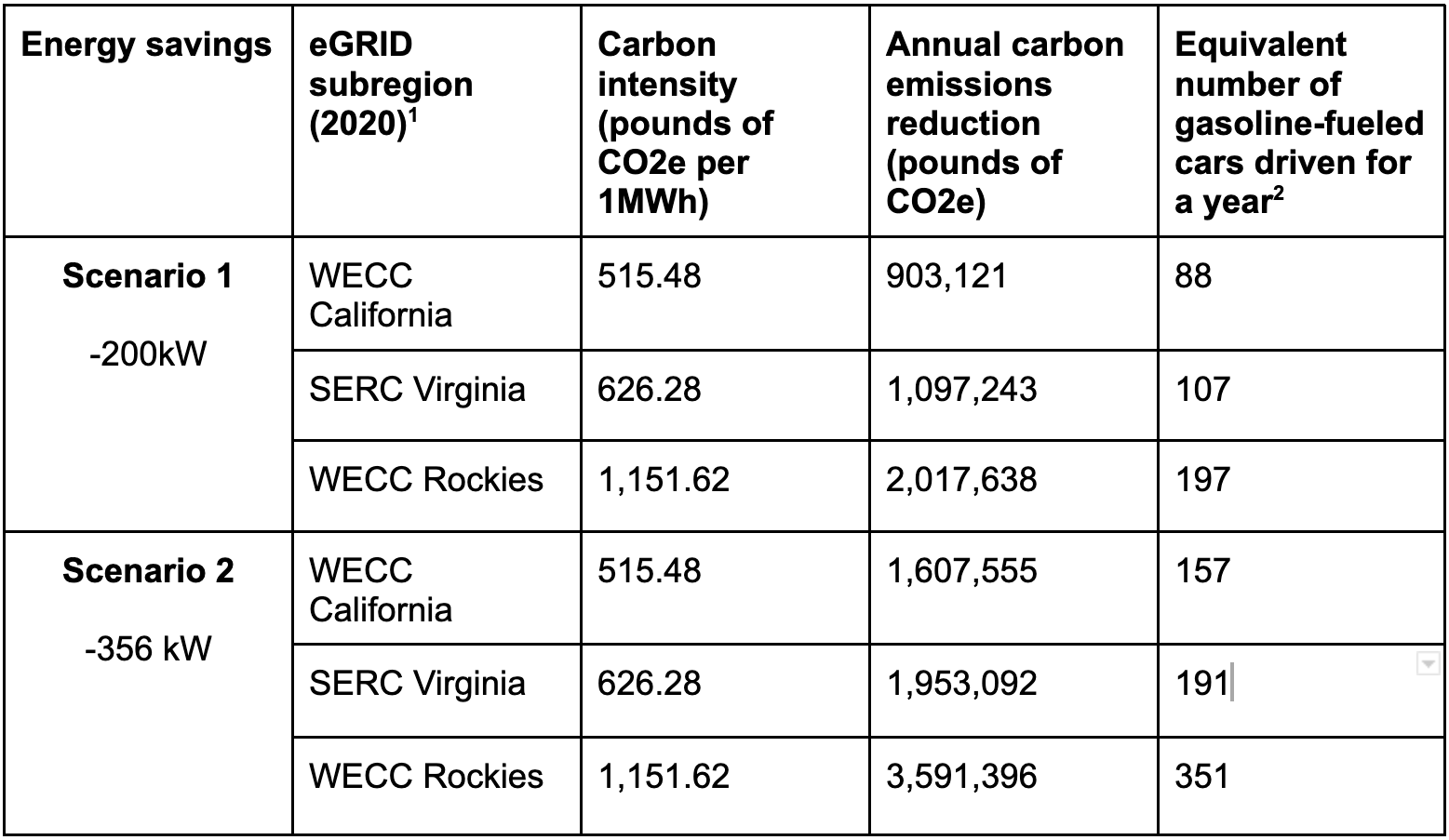

The biggest factors determining a facility’s Scope 2 emissions (besides the amount of energy it consumes) is the generation mix of the electricity supplier(s) it relies on and whether (and how) its operator sources renewable energy for its sites. Using the EPA Data Explorer for US power generation, we can model the CO2e impact of converting an air-cooled data center to a liquid-cooled one. The following table shows the carbon reduction achieved in each of the two scenarios we outlined above: the higher compute capacity scenario, where total power is reduced by 200kW while critical power remains at 1MW, and the lower-CO2e scenario, where data center facilities power is reduced by 200kW and critical power is reduced by 156kW.

The potential for CO2e reduction ranges from 903,121 lbs to 3.5 million lbs of CO2e per year. For perspective, 17.87 lb of CO2 is what gets emitted when we burn 1 gallon of gasoline.

- https://www.epa.gov/egrid

- https://www.epa.gov/energy/greenhouse-gas-equivalencies-calculator#results

Building Sustainable Corporate Infrastructure

In addition to improving energy efficiency of its data centers Equinix recognizes the importance of both locating them in territories served by cleaner energy grids and the urgent need to source more renewable energy than the grids currently offer.

In 2021 Equinix became the first data center operator to set both a science-based target for its operations and supply chain and a global goal to become climate-neutral across its operations by 2030. These targets build on our previous commitment to become 100% renewable by 2030.

Equinix is embracing other innovations to build more sustainable data centers. Our approach to reducing operational and value chain-embodied carbon emissions is outlined in our 2021 Sustainability Report.

Corporations are the leading buyers of renewable energy across the world. Forward-looking technologies like the new generation of liquid cooling systems and innovative renewable energy contract mechanisms can unlock the potential to make a significant source of companies’ carbon emissions, their technical infrastructure, carbon neutral.