Welcome to the Year of Subscale

How we're working to bring the best of hyperscale deployments to specialized hardware.

In today’s public cloud-driven world, everything is about massive scale. Huge, autoscaling, Kubernetes-powered, never-ending serverless scale. Out with the terabytes and in with the zetabytes!

To drive the point home, I recently read a definition from FutureCreators stating that the cloud is nothing more than “universal access to unlimited computing at marginal cost.” Universal and unlimited are rather big concepts, I’d say!

Imagine: with the cloud we have finally invented something that you can get quickly, at high quality AND at a low price all at the same time. How is this magic even possible?

My opinion is it is only seems possible because there is no viable alternative to the universal access piece of the equation - the “get it fast” factor. Easy enough to get unlimited computing power at a great cost basis - it’ll just take you a ton of time (including much of it inside the walls of a datacenter).

But what if it were possible to stretch the cloud experience to include the off-limit no-man’s land of custom hardware, even in relatively small batches? My hunch is that you would quickly see how massive scale is yesterday’s news, and subscale is where the action is.

Call Me When You Need a Megawatt!

Lots of companies have or deploy 300, 500 or even 1000 servers. Most large banks have tens of thousands in their fleet. Ask any one of them and they’ll tell you the dirty little secret we all share: when you’re counting in hundreds or thousands, you’re dealing with a ridiculously subscale situation. Nobody worth their salt wants to innovate in bite sizes of racks or cages anymore - they’re too busy with megawatt campuses.

Of course there are cloud providers like Equinix Metal, as well as OEM’s and integrators, that will help you buy or rent a few dozen / hundred / thousand servers nearly anywhere in the world. But being subscale forces us all to swim upstream against an industry and supply chain built only for the biggest buyers.

Hyperscalers, with billions of dollars in concentrated (and fast growing) spend, rule the roost.

Slightly Special Little Snowflakes

Allow me to explain the implications (and later, the opportunities) with an example that is fresh in the gestalt of Equinix Metal: in November we sold and delivered a deal for just over 300 servers. The contract was worth a few millions dollars annually, making it one of our larger deals this year. Cool right?

The deployment consisted of four separate configs spread across four availability zones (three in the United States and one in APAC). Here’s what it looked like:

- Config #1 - A specialty database box with 30TB of high speed NVMe.

- Config #2 - A general compute workhorse, optimized for clock speed.

- Config #3 - A fleet of efficient storage machines for long term analytics.

- Config #4 - A lightweight general purpose node for ‘everything else’.

None of these configs was outrageously custom, simply the right boxes for a well known set of workloads. Standard x86 servers (in this case mainly from Dell) with the right processor, RAM and disk options.

In order to win the deal we needed to work within a 30 day deployment timeframe. Since we don’t stock these exact configs in our public cloud - nor do we happen to keep millions of dollars of spare servers around “just in case” - this meant:

- Pulling out all the stops, and cashing in all the favors, with our friends at Dell.

- Working like mad with our vendors and team to get everything in the right places on time;

- Racking / enrolling / burning in / testing and updating all the firmware;

- Delivery everything before the holiday traffic rush.

Ew, Look at that Rack Diagram

To further understand the “no pain, no gain” side of the story, all you have to do is look at our rack layouts for this deployment.

We deployed most of the servers across three locations, with a smaller footprint in Asia. For the primary locations that meant about 90 servers per location. For HA, we pushed them across several racks in each facility.

Since we leverage a full Layer 3 topology we were at least able to fill the rest of each rack with a variety of our public cloud configs. The downside is that without the brilliance of a few super-experienced datacenter technicians, our racks can easily start to look like a mid-2000’s cabling nightmare (thanks Tim, Michael, Alex, and team!)

Now, if you showed this deployment plan to a hyperscaler or a vendor that services them, they would be appalled. Nothing about this deployment makes any sense -- the size, the diversity between racks, the inefficiency of power and cooling between them all, the failure domains, the stranded and “over-priced” retail colocation expenses, the limited expansion capability. The list just goes on and on!

But we aren’t playing in hyperscale land -- we’re living in planet subscale. 19" racks, lots of variety and all the pain that comes with complex cable plants, sparing needs, ad hoc repairs, unanticipated growth, changes in "the plan" and buying datacenter space by any other measure than the megawatt.

Is Two out of Three Good Enough?

At this point, I know what you’re thinking: wouldn’t it have been easier for our client to go with a public cloud solution? I mean “universal access to unlimited computing at marginal cost” sounds really good compared to the literally hundreds of emails, shipping forms, metric ton of cardboard boxes, rushed cable and PDU orders, manual installation hours, Slack messages and such that it took us to turn 300 custom servers online in a month.

Well, it would have been easier, but it wouldn’t have made sense by any other metric. By leveraging these four slightly curated server configs, our client is able to meet their SLOs with outstanding performance, all at 1/2 or less the cost of a public cloud deployment.

So, save millions of dollars annually and get far better performance...two out of three ain’t bad, right? Well, to a developer-led business, the value of fast, universal access is crucial. Waiting weeks or months for infrastructure to come online is not only annoying, it’s a huge disadvantage.

I guess we just have to live with it: doing custom means you can't be efficient, right?

If that’s the truth, than the easy answer is: don’t do custom. Ever. Make things generic and one size fits all, or virtualize it and don't worry about it. But if we did that, our client doesn't get the performance or cost benefits they need to win with their business, and we leave millions of dollars per year on the cutting room floor.

Borrowing the Best of Hyperscale

I have a better idea: embrace these problems and solve for them!

Let's hack the delivery model for subscale by taking a play from the hyperscaler playbook and applying it to a totally different use case. What would I borrow? I would design, just like a hyperscaler, for operational efficiency and flexibility at the lowest possible layer I can control.

Hyperscalers design for efficiency by crafting from the datacenter up. Since we can’t design the datacenters, we need to invest in the lowest common layer we can control: we need to design from the rack up.

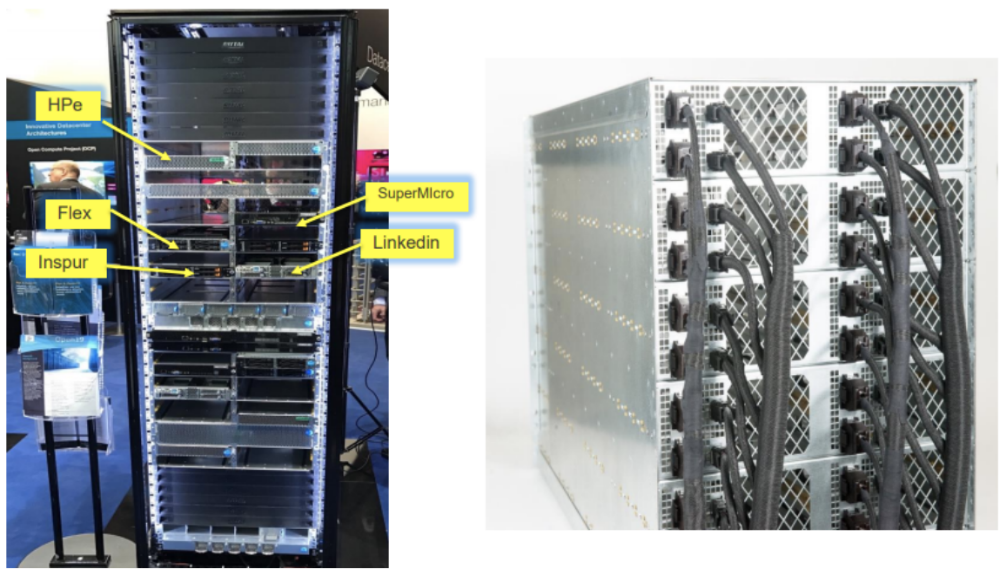

It’s pretty simple, actually: we need racks that don’t care what goes in them, we need to remove expensive power and network from each node and place it at the rack level, and we need servers that don’t take a PhD in cabling, like this:

In short, make it look like this Open19 rack deployed by our friends at LinkedIn, which removes expensive power supplies from each node, leverages a simple, mechanical design, brings massive network to each sled cheaply and makes it so the FedEx guy (or your marketing intern) can install a server node:

Of course, there are always limitations, but building around the lowest one allows us to serve a wider variety. It's also the same reason why we automate against bare metal machines versus at a hypervisor -- it’s more of a pain in the butt, but it lets our customers choose the software they want instead of us choosing the much of it for them.

This allows our customers to do what the hyperscalers do: build the hardware around the software, instead of the software around the hardware.

Our Investments for Subscale in 2019

If it seem like I have a lot of passionate opinions about subscale, you’re right! This has been my world since the early 2000’s, and unfortunately the experience hasn’t improved much. All of the real operational innovation has gone towards the hyperscale problem set.

We’re on a mission to change that. In fact, I am betting our company on the premise that the leading one or two thousands Enterprises will benefit from just the right infrastructure “tool for the job” - like the right CPU, the latest GPU or the hot new inference card that came out yesterday. They will need it in relatively small bite sizes (100’s or 1000’s of them) and in the locations that matter to their business or use case.

Forget the device or network edge and cell towers for a minute and just think about cities with millions of people and no cloud availability region: Geneva, Prague, Buenos Aires, Chicago, Jakarta, Ho Chi Min City, Greece -- and Kansas City!

In 2019 and beyond, we plan to sell a lot of 300 server deals. And also 50, 100, 1000, and 5000 server deals with both standard and custom configurations. And our goal is to make that experience both economical (for us and our clients) and absolutely fantastic.

So here is what is on our hit list for 2019:

- Hardware Delivery - We need a not shitty delivery system for subscale deployments. We are not doing hyperscale things, so things like pre-built L11 racks in shipping containers don’t fit.

- Remove the Cables - Seriously. Cables suck when you’re trying to get a guy or gal in Jakarta or Pittsburgh to do them right at 2AM in the morning.

- Pizza Tracker - Okay not really pizza but if you can track your “custom” pizza getting made and delivered why can't you have the same experience with $10k servers (that look a lot like pizza boxes)?

- Practice - We will crawl, then walk, then run before we sprint at this game. We plan to get really good at adding +1 servers into any market in the world in 5 days or less.

- Transparency - We expect to learn a lot, and fail a lot. We will share our successes and failures along the way and ask for your good ideas and feedback.

- Community - We can’t do this on our own and we know we’re not the only service provider, enterprise, startup or SaaS company suffering from the subscale quagmire.

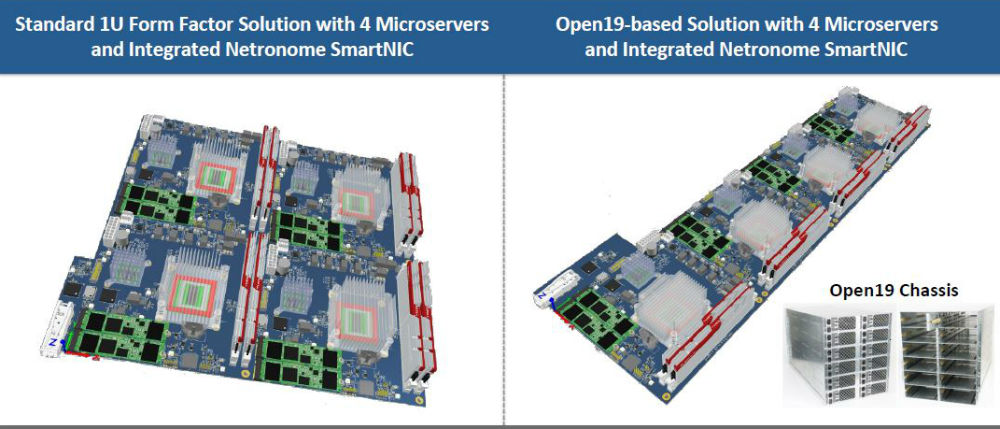

The good news is, we already have our first deployments going in to market this March. We’ve designed a new microserver (in Intel, AMD and Ampere variants) that includes a SmartNIC from our friends at Netronome, and it will be deployed to Open19 racks in all of our facilities.

Instead of shipping 1000 of them at a time, we’ll add them one at a time, and get really good at doing it quickly, cheaply, and transparently.

Are you Subscale? Swipe Right and Let’s Talk.

If you’re interested in putting (or having) hardware in places -- and you’re not one of the 10 hyperscale customers in the world -- we’d love to hear from you. We share and embrace your challenges, war stories and frustrations and would love to hack the delivery model to make it work for you.