How Equinix Metal is Bringing Cloud Networking to Bare Metal Servers

Networking models and features for virtualized clouds have evolved dramatically in the last decade -- while bare metal networks are largely the same as they were in 2005. At Metal, we’re changing things up a bit.

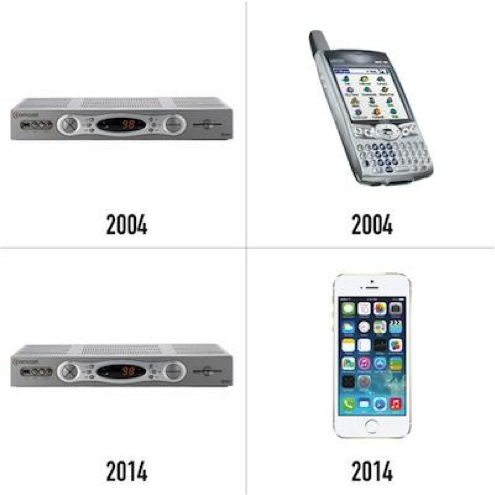

When I think about the current state of dedicated hosting infrastructure, I’m reminded of an article from theverge. The article re-posts a famous Internet meme comparing a cable box and a smart phone, 10 years apart.

When I think about the current state of dedicated hosting infrastructure, I’m reminded of an article from theverge. The article re-posts a famous Internet meme comparing a cable box and a smart phone, 10 years apart.

The 2004 models are a slow, power-hungry, Motorola clunker, and a Palm Treo. Pictured beneath are 2014’s state of the art, a full decade later: the same Motorola box, and a brand new iPhone. Not a lot of visible innovation going on in the cable world!

I think this analogy rings pretty true in the world of dedicated hosting. Network technologies, customer demands, and core datacenter facilities have changed dramatically over the past ten years, particularly in the world of virtual compute clouds. Just think of the paradigm pre- and post-AWS in terms of their virtual private cloud, elastic IP addressing, and API-controllable network assets. And yet, the “state of the art” for dedicated hosting / bare metal networks looks pretty much the same in 2015 as it did in 2005! Sure the prices are cheaper, but otherwise, it would be pretty hard to guess the era when looking at a typical dedicated hosting feature page today versus 10 or 15 years ago.

Around the the office, we discuss the archetypal industry competitor of “The Phone Company” -- a company that, in spite of a decades with some of the best laboratories and PhDs in its lineage, has little more to show for itself than Call Waiting and Caller ID. You could probably also throw the "Cable Company" into that bucket these days -- but what about "The Hosting Company"?

A Bit of Perspective

In the months leading up to Packet’s formation, Zac and I had many lively lunchtime conversations (debates?), during which we marveled at how both the IaaS landscape and the undlerying infrastructure had evolved since our last go around building things from scratch. It was pretty obvious how virtual compute infrastructure (e.g. "pure cloud") had leap frogged all other types of infrastructure services, leaving colocation and dedicated servers in the dust. And the underlying tools that power much of this magic, including purpose built facilities, dense server instances and powerful networking hardware and software, had also come into a different league. It's as if 10 years of Internet time had created a pretty clear generational gap.

As a network and layer zero infrastructure nerd all my life, near and dear to my heart were the speeds and feeds of the latest crop of switches and datacenters. In relatively inexpensive 1U/2U form factors, we now have feature sets previously available only in hugely expensive backbone routers occupying full racks. Likewise, the current inventory of datacenter properties is truly impressive, with purpose-built campuses, and their ultra-reliable, hyper-efficient power and cooling, replacing hastily retrofitted office buildings.

At the same time, none of these advancements have materially changed how basic bare-metal servers are provisioned and sold. It's still pretty much dumb layer 2 networks, no real automation and a pretty inflexible set of control options.

We challenged ourselves and asked: with the right software stack and user experience, could we fundamentally change the end-user experience for buying, consuming and managing dedicated servers? How could we bring cloud-like capabilities to the bare metal? How could we bring this essential building block for modern cloud servers up to the modern age?

It's all About the Network - Duh!

A prime example of the stagnation I describe is how typical hosting vendors (hosters, for short!) approach basic topics of segmentation and multi-tenancy. It's not uncommon to find hundreds or thousands of servers, belonging to many disparate clients, lumped together inside the same broadcast domain. Few safeguards exist to prevent customers from causing outages by hijacking IP addresses or creating a broadcast storm -- either out of malice, or by honest misconfiguration -- and the potential for “man in the middle” attacks abound. Some of the more enterprising network operators segment customers into per-customer Virtual LANs (VLANs); while this addresses some security concerns, scaling and preventing outages remains a challenge. Managing large and dated layer-2 networks presents seriously hard topological restrictions (want ring or CLOS topologies? Dream on!), and is likely to tax your switch fabric as it begins to learn many thousands of hosts with MAC addresses. I won’t even talk about the downfalls of the Spanning Tree Protocol as a redundancy mechanism, as not to incite violence amongst readers. But the summary is layer-2 VLANs simply don't scale in big multi-tenant environments and, more importantly, can't offer the feature set that cloud-users have become accustomed to with their networks.

So What To Do About it? Enter Layer 3...

While running a flat layer-2 topology works fine on a small enterprise LAN, it’s certainly no way to run a multi-tenant datacenter at scale. These networks are remnants of a bygone era when there were simply no good options for providing routing at the rack level -- routers were enormously complex, big iron devices costing six figures, and switches were relatively inexpensive and “dumb” wiring closet devices (which either could not route IP, or did a very poor job at it), thus the old adage to “let the routers route and switches switch” (credit due AboveNet founder Dave Rand, et al.)

In contrast, the current crop of 1U/2U top-of-rack switches from Arista, Cisco, Juniper, and friends are a completely different beast, and can function as reliable IP (or MPLS, VXLAN, …) edge devices. Metal believes that by connecting each and every bare metal server to such devices, we are able to deliver on stability and scalability, with none of the “noisy neighbor” problems which have haunted similar product offerings in the past. The key benefit is that we are not constrained by our topology, rather we are enabled by it -- convergence is snappy, new products / services can be designed on the network and scale-out of the network is a breeze, hinging on tried-and-true IP routing protocols.

High Availability and Bonding

At Equinix Metal , we believe that high availability is a first class citizen. Although most customers build with the concept of horizontal scaling -- we feel that having high confidence in each component in your stack is critical. Here's what we've come up with:

-

Counter to common wisdom, a single host shouldn’t live or die by the switch it connects to. With Metal, every bare metal server connects to a redundant pair of switches using 802.3ad link aggregation. Under normal operating conditions, a server is provided a bonded 2G (2 x 1G) or 20G (2 x 10G) interface, with uplinks fat enough to use them. If a switch experiences a mechanical failure, or undergoes maintenance for software upgrades (bugs are still a thing, as much as we like to deny it!), a server’s bandwidth is halved, however the server remains online.

-

By the same token, I believe that a host shouldn’t require a mess of interfaces and static routes requiring an advanced degree to decipher, just to segment its frontend and backend as best practice a typical LAMP stack dictates, so we’re eliminating all of that. A simple default route is all you get; we use ACLs and virtual routing tables, on top of our all-layer-3 topology, to make your back-end networking "Just Work", while staying private and secure.

-

Whether you use or forget to use (as is often the case!) your private addresses for inter-server traffic, we’re making traffic between servers in the same datacenter free. Exchanging a lot of traffic with a SaaS service also in our datacenter? Have at it, that’s free too. Writing our operations and support systems from the ground up, we took the liberty of replacing conventional SNMP-based port counters with sFlow and destination-based billing. The deep analytics we can provide to each customer also starts to get really fun. We'll be exposing some of that soon.

-

The Intelligent Platform Management Interface (IPMI), commonplace on modern server hardware, is a powerful toolset for “out of band” functions such as hardware telemetry, power cycling, and remote console -- and one often poorly implemented in multi-tenant environments. We’ve seen IPMI left exposed to the public Internet, where it’s susceptible to a myriad of vulnerabilities (we love our server manufacturers, but they didn’t exactly design this stuff with Internet-grade security in mind), or disabled altogether. We’ve implemented a physically separate IPMI network, locked down tightly and accessible to staff and our internal management systems only. Not to worry though, our customer portal exposes all of the features of IPMI, without any of the security hassle.

IPv6

Let’s face it, IPv6 is here to stay. While the reports of IPv4’s death are greatly exaggerated, I do believe IPv4-only content is about to feel the pain. As broadband ISPs increase their deployment of NATs to deal with address exhaustion, performance will suffer due to very expensive and performance-constrained router cards. And as entire communities are masqueraded behind a single IP address, abuse tracking/filtering becomes difficult.

While the writing is clearly on the wall, only a small handful of hosters release their machines and VMs enabled for IPv6. At some, it’s a manual configuration, available by special request (and incessant nagging/escalations to the one engineer at the company familiar with the technology) only. At many more, it’s not supported at all, due to a deployment base of antiquated routers and/or management software that doesn't like big, long, messy IP addresses.

Here at Equinix Metal, every server we ship has a v4 and v6 address. As we introduce our suite of IP routing and load balancing options, we’ll be sure to make the transition to IPv6 really simple, even for IPv6-unaware legacy applications.

Thoughts on Overlays, Agents, and Agility

My esteemed colleague Mr. Laube received many comments in response to his “How We Failed at OpenStack” blog, among them the suggestion that all of the capabilities I describe above are already available in various “SDN” software packages. While somewhat true, we would have done a disservice to our customers had we accepted that as our answer. As a true bare metal provider, our goal is to deliver all of our networking magic to a “bare” host, without the need for any complex hypervisors or software agents running on the machine. Likewise, we must not let down our guard in protecting the server’s physical network from its neighbors, by “simplifying” or “flattening” our topology. If a customer wants to deploy a private cloud environment with an overlay network running on top of the Metal infrastructure, this is, of course, a configuration we’ll encourage and support.

Conclusion

I hope this post interests people and provides some transparency regarding some of the secret sauce which makes the Equinix Metal network awesome. This is just the tip of the iceberg, however, we're planning to continue to write about our thought processes for designing our server networks and the racks servicing them. Stay tuned for some real statistics on how we’ve made our network unique and legacy-free further up the stack, extending to our datacenters and core network!