How an Event-Driven Architecture Ensures App Speed and Scalability

What you need to know about this go-to design pattern for distributed real-time apps, including an example of what implementation may look like using Apache Kafka.

Event-driven architecture (EDA) is a software design pattern in which systems interact with one another based on “events” that occur, as they occur. An event is triggered when a user performs an action or a change happens in a system. Different systems listen to such events and react to them independently and asynchronously. As systems process events, they might publish those events for other systems to process.

This architecture pattern is crucial when building responsive and scalable decoupled systems. EDA is a go-to architecture in scenarios like e-commerce order processing, Internet of Things (IoT) data collection, user registration and authentication, notification systems, stock market trading, real-time analytics, online gaming and event-driven microservices.

In this article, you'll learn more about EDA, including its benefits and drawbacks and when to consider using it in your systems. You'll also learn how EDA can help you build decoupled and scalable systems, with some practical examples using Apache Kafka, a popular event streaming service.

Is EDA Right for Your Workload?

If you’re building modern software that needs to be scalable, responsive and flexible, EDA is often a good choice. However, like any architecture pattern, it can't be applied blindly to every application. You must first consider the architecture's benefits and drawbacks before deciding whether to use it.

Pros of EDA

The following pros highlight a few ways EDA may benefit your application:

- Loose coupling between components: In EDA, different systems act independently of one another and only need to know about the emitted event to which they react. This decoupling of systems makes it easy to develop, maintain and deploy individual components without worrying about how they might impact other systems. This decoupling is particularly useful for teams working on a shared system, allowing each to focus on their own components without affecting others.

- Seamless integration across disparate systems: By communicating through events, different systems and technologies can work together without directly depending on one another. These events let you use the best technology stack for each component, making the overall system more adaptable and future-proof.

- Real-time processing and responsiveness: Unlike traditional systems that often need to poll other systems for changes or updates, EDA lets other systems notify your system of new events as soon as they happen. These real-time notifications let your system immediately process events and provide feedback, which can be critical for scenarios like fraud detection and real-time analytics.

- Reliability and fault tolerance: Since components are loosely coupled and communicate only through events, one component going down won't cause other components in the overall system to go down. Other system components can continue functioning, and the faulty component can be restarted or replaced. Once restarted, it can also process any messages it missed while unavailable.

- Ability to react asynchronously and indepen dently to events: EDA's ability for components to react asynchronously and independently to events makes it ideal for scalability. As the load increases, you can scale up individual components to handle the increased volume while keeping other components constant.

Cons of EDA

While EDA comes with many advantages, make sure you consider the following cons before using it in your application:

- More complexity than in monolithic architectures: While EDA helps build more responsive and decoupled applications, it makes them more complicated than monolithic applications. A monolithic application runs on one or very few servers and has all its functionality on a single server. In contrast, an EDA application consists of many smaller system components distributed across servers and needs an event broker to communicate between components.

- Event ordering and consistency challenges: Because EDA decouples applications so they can process messages asynchronously, it's difficult to ensure that events are processed in the correct order. You might run into cases where events are processed out of order or are processed multiple times. The system needs to be designed so that such cases don't create inconsistencies in the application's data.

- Issues are often more complicated to troubleshoot: In a traditional API-based system, you can troubleshoot issues relatively quickly by simply looking at the application's logs. However, because events are processed asynchronously and pass through multiple components, troubleshooting the root cause of an issue takes time and effort. Effective logging is needed to monitor logs across components in an EDA application.

Strategies to Manage the Complexity of EDA

The drawbacks mentioned above might make event-driven architecture less appealing because of its complexity. However, if your team manages the complexity appropriately, you can still reap the benefits of using EDA in your applications. The following are some strategies that teams can use to manage complexity:

- Clearly define and document event schemas: Use a standardized event schema to remain consistent across different components when defining events. Depending on your data format, you can use JSON Schema, Apache Avro or Protocol Buffers to define your event schemas. These schemas should also be explicitly versioned so that future changes don't break existing systems. You should also document these schemas clearly, especially if you’re developing across multiple teams.

- Ensure event ordering and consistency: When designing an EDA application, each component should ideally be idempotent. This idempotency ensures they can handle duplicate events without creating inconsistencies in the data. If events need to be in order, you can use event sourcing to store sequential events, which can be processed and later replayed in order.

- Implement robust cross-system logging and monitoring: Using centralized logging for all the components in your EDA application simplifies troubleshooting. Tools like Grafana Loki, the ELK Stack and Splunk gather logs from multiple systems and aggregate them so that you can easily browse logs across systems. You can also use tools like Prometheus and Grafana to collect metrics and perform health checks on components in the system, helping you proactively identify issues in the application.

Now that you understand some of the benefits and drawbacks of event-driven architecture, we’ll cover the components that make up EDA and how they integrate to create scalable, decoupled systems.

How Event-Driven Architecture Enables Scalable, Decoupled Systems

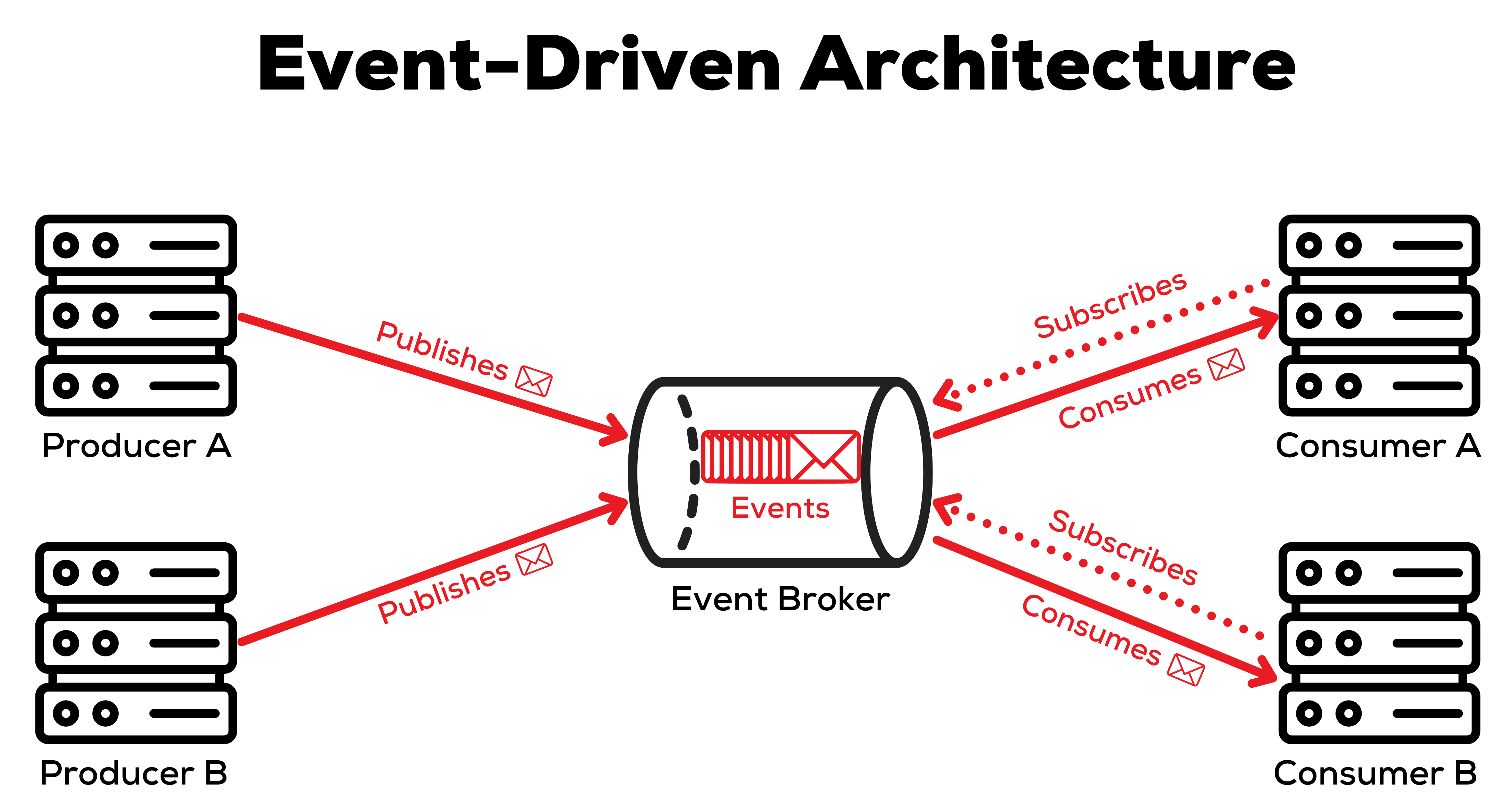

Event-driven architecture decouples services using an event broker. Instead of communicating with one another directly, components send messages through a middleware service known as an event broker.

Events

At the heart of EDA are events. Events are sent whenever a change or action occurs in one of the system's components. Events are always sent to the event broker, which then distributes them to other components in the system for consumption.

There are different types of events used in EDA, but the following are some of the most common ones:

- Simple events represent individual actions or state changes in a system, such as a user adding an item to their wishlist on an e-commerce website.

- Composite events combine multiple simple events to represent a more complex event. For example, an e-commerce website's "Checkout Complete" composite event consists of several simple events, such as adding items to a cart, entering delivery information and paying.

- Temporal events are triggered at specific times, such as after a delay or on a schedule. They might be used to send a daily report at the end of every weekday.

- Error events notify other components of an error. For example, a payment service might notify other components if a payment fails.

- System events indicate system infrastructure and state changes, like resource allocation, deallocation or scaling. For example, you can send an event when a new server gets added to a load balancing pool.

Producers

Events are triggered based on an action or change in state in a system component. When a component causes an event to be triggered, it's called a producer.

For example:

- An internet of things (IoT) sensor can be a producer that sends events when it detects events or changes in an environment, such as a temperature sensor sending a "Temperature Change" event when detecting a temperature change.

- An identity provider (IdP) can be considered a producer if it sends events when a user authenticates. For example, an IdP might send a "User Logged In" event when a user has successfully logged in.

- A banking system can produce a "Payment Initiated" event when a user creates a new payment in an online banking portal.

Producers might be huge monolithic applications or small microservices. Regardless of size, a service is considered a producer if it triggers events based on certain conditions. These events are sent directly to an event broker.

Event Brokers

Event brokers are the pieces of middleware in an EDA application that receive events from producers and route them to consumers. These brokers typically run on their own server in your infrastructure and can send and receive events from all other components in the system.

Here are some popular event brokers:

- Apache Kafka is a distributed event streaming platform that can handle high-throughput, low-latency data feeds. It's ideal for real-time data processing and integration in large-scale systems. The broker can publish and subscribe to streams of records, store them and process them in real time.

- RabbitMQ is a robust message broker that lets applications communicate with one another using multiple protocols, such as Advanced Message Queuing Protocol (AMQP), Simple/Streaming Text Oriented Messaging Protocol (STOMP) and MQTT. The broker is simple to use and can handle high message volumes with complex routing requirements.

- Eclipse Mosquitto is a message broker developed by Cedalo as part of the Eclipse Foundation. The lightweight event broker implements the MQTT protocol, making it ideal for messaging between IoT or other low-power devices.

Most major cloud service providers also offer their own event brokers as Software as a Service (SaaS). When choosing an event broker, it's important to consider your application requirements and existing infrastructure.

Processors

Processors let you transform an incoming event into a different format before sending it to consumers. This lets you perform custom logic like filtering, transforming and aggregating events. For example, you can use a processor to receive a "Payment Completed" event, enrich it with the corresponding order details and then send the transformed event to consumers.

Processors can be classified based on how they transform incoming events:

- Stream processors transform individual events and send them to consumers. They're crucial when events need to be processed in real time, as there's almost no delay between the event broker receiving the event, processing it and sending it to consumers.

- Batch processors aggregate incoming events into batches that are then forwarded to consumers, unlike stream processors, which deal with large volumes of individual events. You'd use batch processors in cases where real-time processing is not required, such as data analysis and end-of-day jobs.

Consumers

Event brokers receive events from producers and then distribute them to any other components that might need to process the event. When components need to react to an event, they are called consumers. Consumers first notify the event broker about what events they're interested in by subscribing to an event topic. Once subscribed, the event broker forwards those events to the consumer to process.

The following are some examples of consumers:

- When an "Order Placed" event is sent on an e-commerce website, an inventory system can receive it and reduce its stock levels based on what was ordered.

- If a user places a product on their wishlist, a "Wishlist Item Added" event is raised and received by a recommendation system. The recommendation system can then update product recommendations for that user based on their wishlist items.

- A "Password Reset Complete" event is raised when a user finishes resetting their password. A notification system can listen to this event and email the user to let them know their password was recently updated.

Components can be consumers or producers, depending on the context. If the system reacts to an event in a particular scenario, it's considered the consumer.

Types of Event-Driven Architectures

You can follow several strategies when implementing EDAs in your applications. Each approach offers a unique way of building decoupled and scalable systems. The following are a few strategies you can consider.

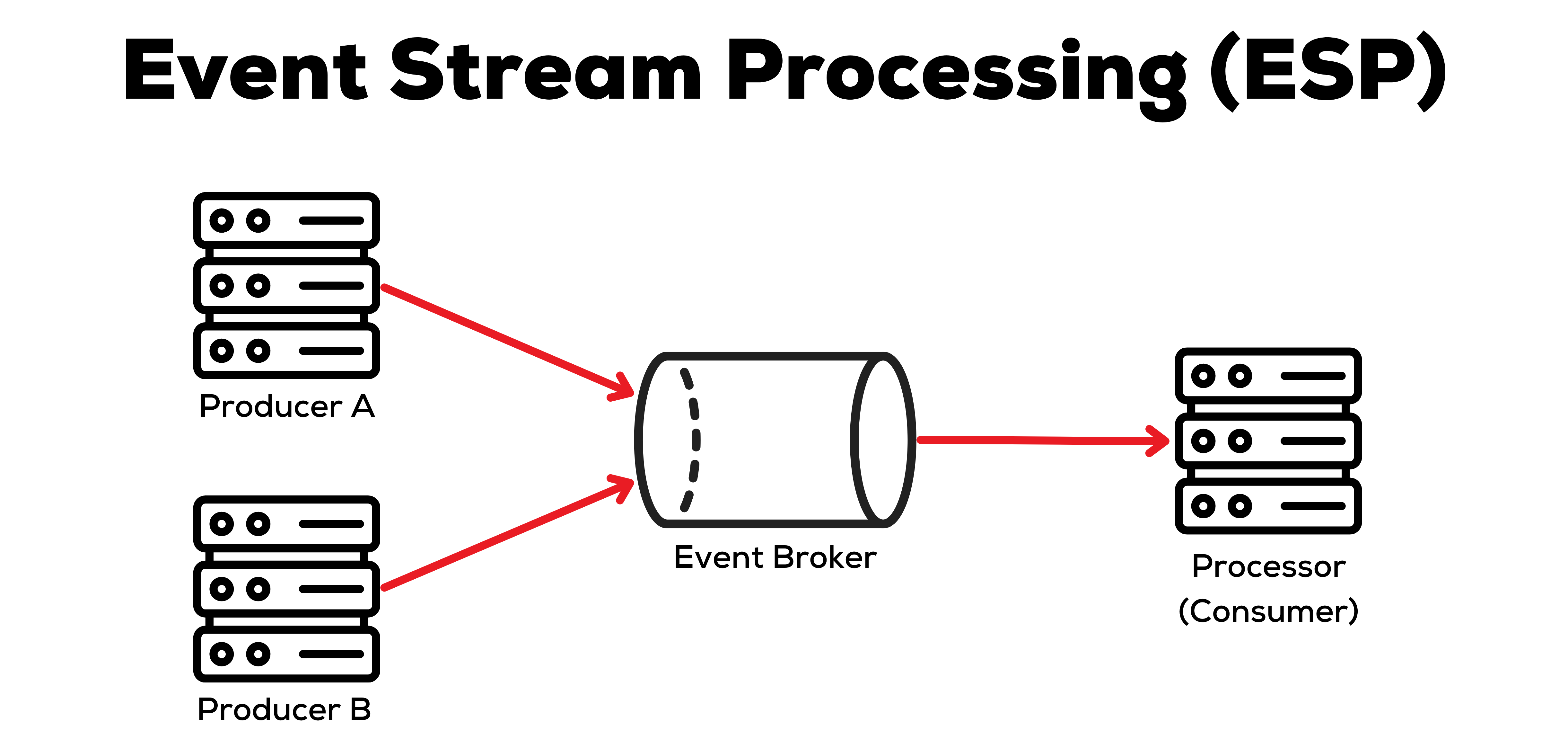

Event Stream Processing

Event stream processing (ESP) involves processing streams of events in real time as they're produced. Producers send messages to an event broker as soon as they occur, and the broker immediately forwards them to all consumers subscribed to those events. Because events are processed as they occur, components can react to and process new data immediately, making the system highly responsive.

ESP decouples systems because consumers and producers only communicate using events. You can also place consumers behind a load balancer and scale them dynamically based on real-time event volumes.

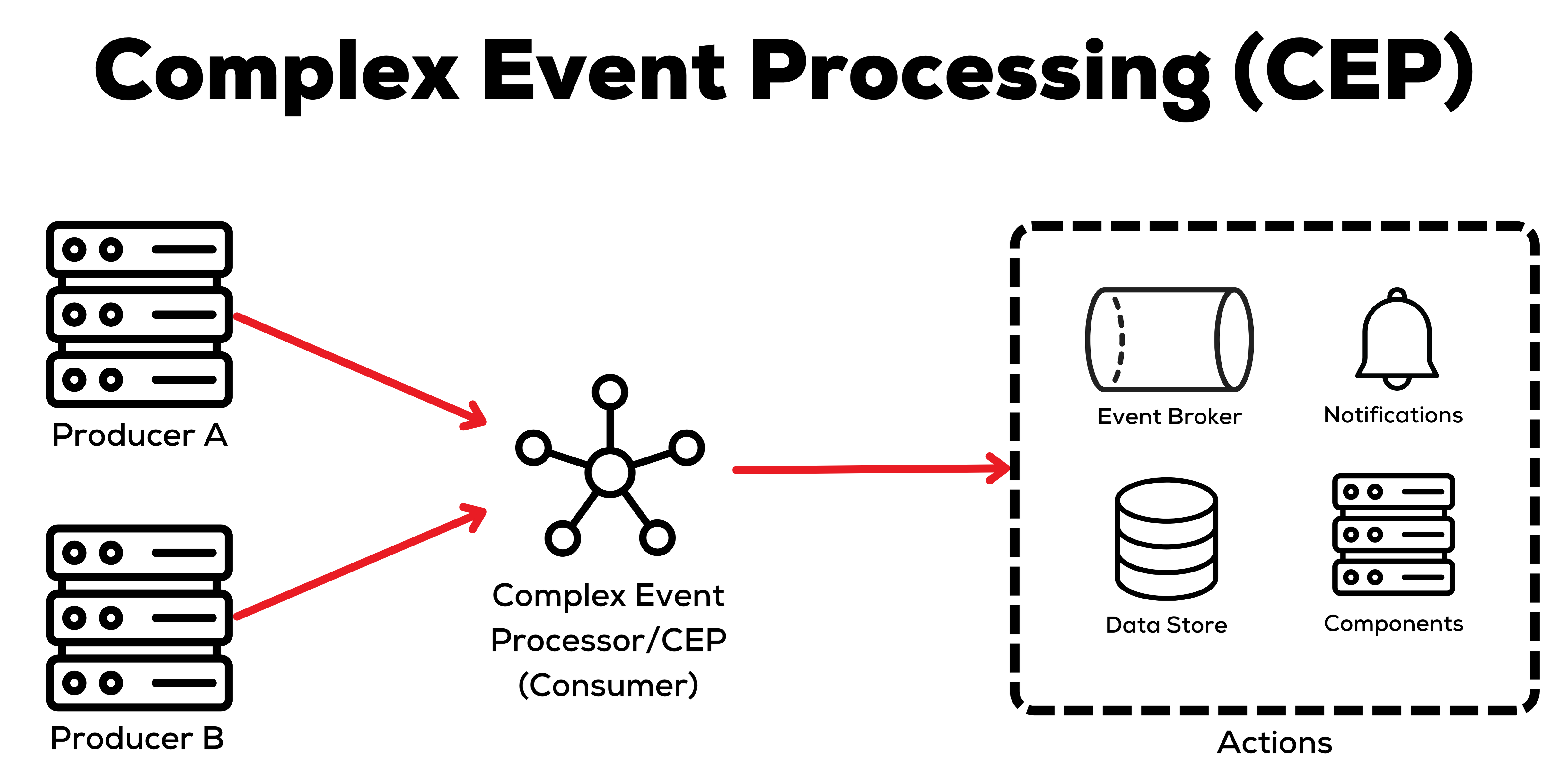

Complex Event Processing

With complex event processing (CEP), consumers analyze multiple events from different event streams. These consumers look for patterns or anomalies in the continuous stream of events. Matching patterns or anomalies trigger actions or alerts, which can initiate a process in another system component, notify users of an issue, send a new event to an event broker or store the result in a data store. This pattern matching happens in real time as events are received. Real-time analysis makes CEP well-suited for use cases like fraud detection and network monitoring.

Using events to identify patterns decouples CEP from producers. You can also route events through an event broker for further decoupling, but this is optional. Regarding scalability, you should design your system such that the number of CEP consumers can be increased or decreased based on the volume of events received.

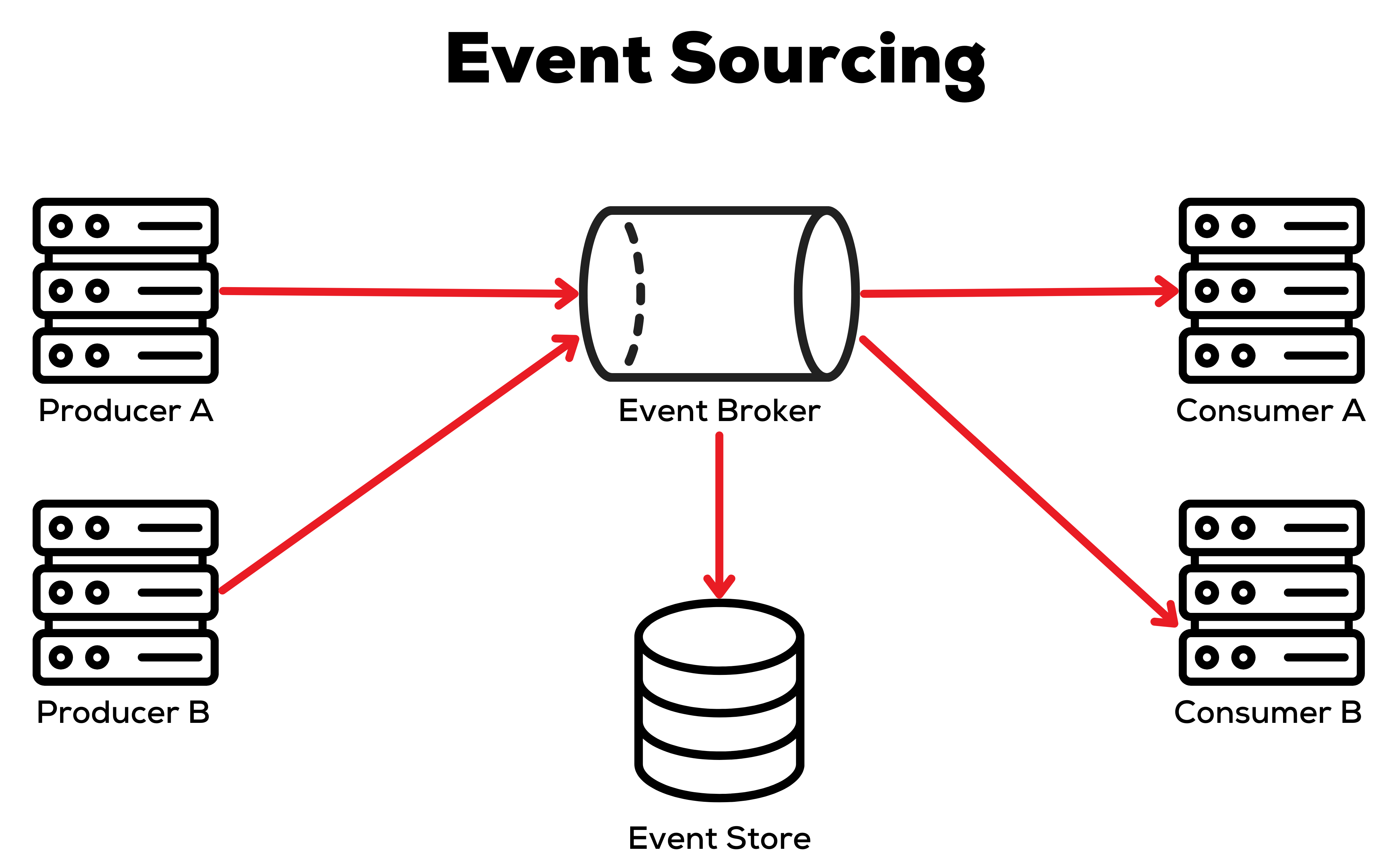

Event Sourcing

Event sourcing lets you keep track of application state and data by storing changes to state and data as sequential events in an event store. Systems can replay these events to determine the overall state or data at a specific point in time. An event broker is optional for event sourcing but is often used to distribute events to different components in a system as they occur. Some event brokers offer durable stores that store events for other system components to query.

With event sourcing, data and application state can be retrieved from the event log rather than having to query a database. The event log decentralizes data and application state to help you create decoupled systems. You can also quickly scale your application by spreading the event log over multiple nodes in your system. The number of nodes can be scaled up or down based on event volume.

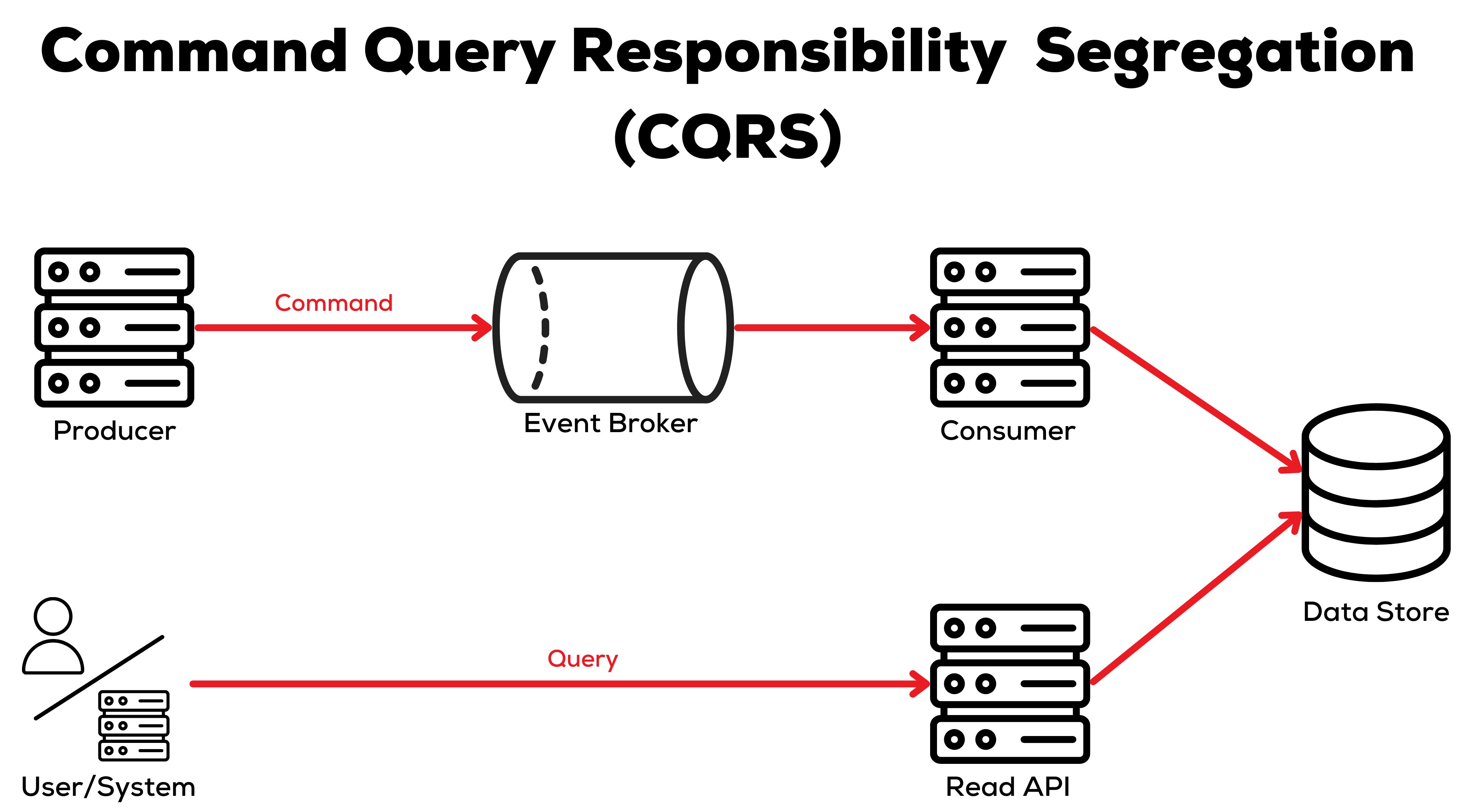

Command Query Responsibility Segregation

Command Query Responsibility Segregation (CQRS) lets you separate the responsibility of handling commands, which update data in a system, and queries, which retrieve data from a system. In CQRS, producers execute commands that generate events to update the application state or data. Queries read current data directly from a data store, which is updated by commands asynchronously. An event broker is typically used to distribute events triggered by commands.

Using events along with an event broker lets you decouple system components that handle commands from those that handle queries. CQRS works particularly well when building scalable systems since you can independently scale read and write operations. This is highly beneficial, as most systems have much higher read operation volumes than write operation volumes.

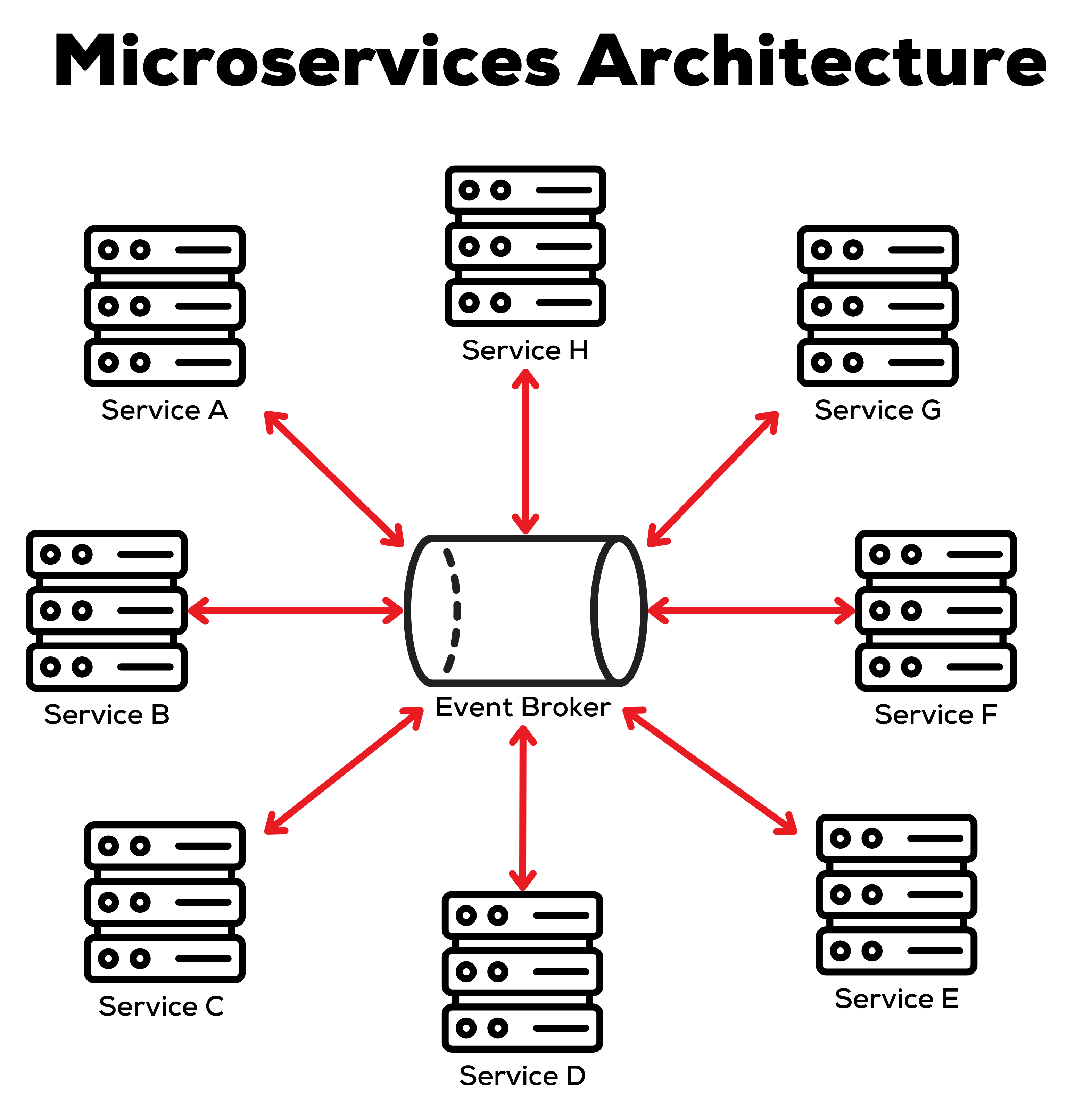

Microservices Architecture

Microservices architecture involves splitting an application into small, independent services. Each service is responsible for a specific area of functionality in the system and communicates with other services using well-defined event schemas.

Microservices are a great way of decoupling your application since each piece of system functionality belongs to its service. Teams can make changes to individual services without breaking other services, provided they don't make breaking changes to events that other consumers use. Scalability is also very straightforward since you can autonomously scale individual services in the system.

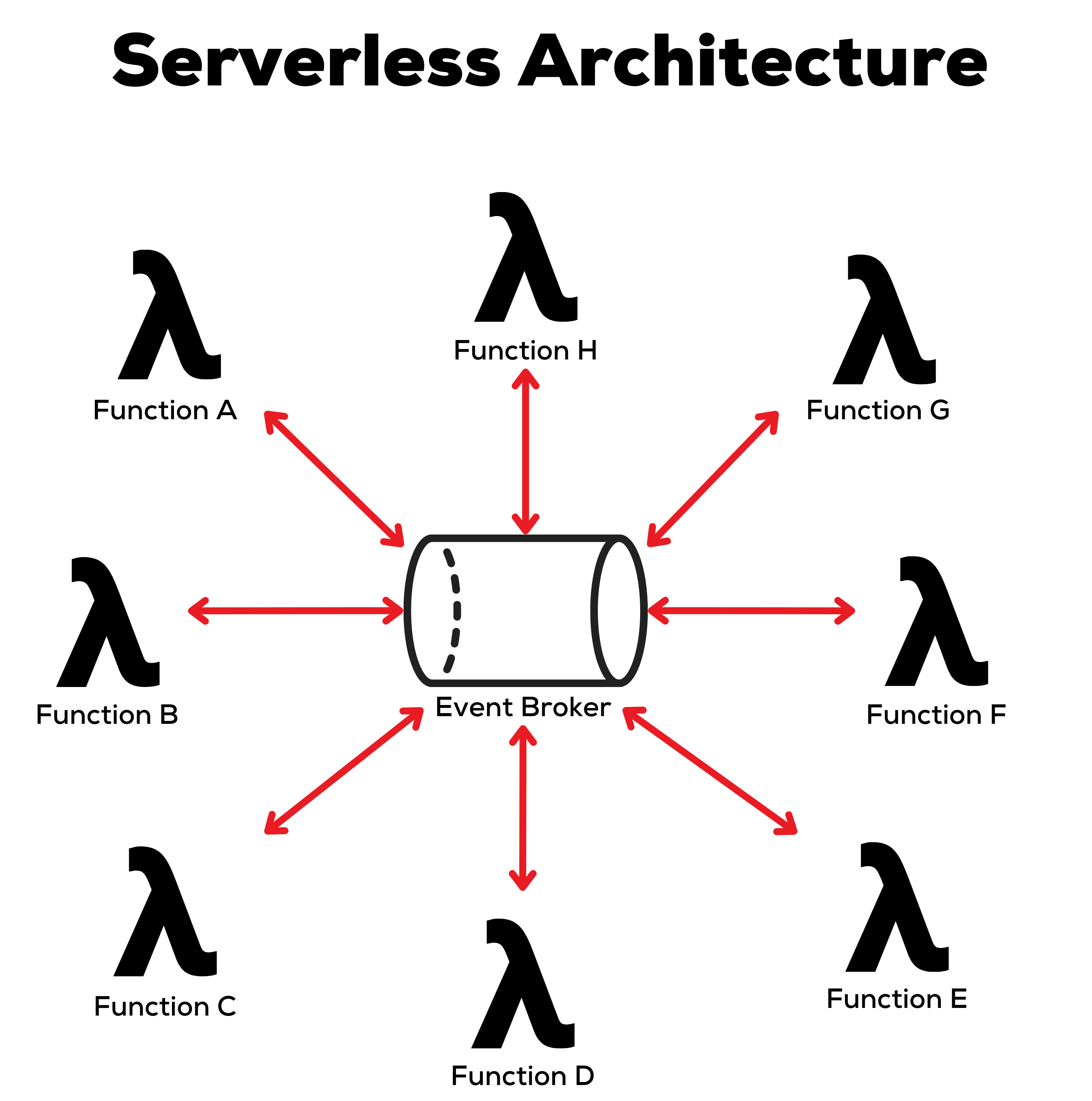

Serverless Architecture

In serverless architectures, functions act as consumers triggered by events from an event broker. The infrastructure for the functions and event broker is typically managed by a cloud provider. Events come from various producers, such as other functions or infrastructure components hosted by the cloud provider.

Serverless architecture lets you decouple functions from one another and their underlying infrastructure. Functions are triggered by events and can scale automatically based on event volume without you having to worry about allocating additional servers to run them.

Examples of Event-Driven Architecture Using Apache Kafka

Apache Kafka is a powerful event broker capable of handling high-throughput, real-time data streams with low latency. The broker offers permanent storage for events and can be distributed across multiple nodes in different availability zones and regions, making it scalable and robust. The open source platform is a popular choice when building applications using event-driven architecture.

In Kafka, a topic is a category or channel to which producers can send events, which consumers can then read. It's the primary unit of organizing events, letting you group related events within a single topic. Topics also have some advanced features, like storing a log of events to disk and partitioning events in a topic across multiple brokers for parallel processing. The following are examples of how you can implement the different EDA patterns described above in Kafka.

Event Stream Processing

Kafka provides Kafka Streams, which lets you develop consumers that receive and process continuous data streams. Multiple processors can be chained to form a processor topology. These topologies let you spread your consumer logic across multiple processors, with each performing a specialized task on the data.

To use Kafka Streams, you must first set up the different topics to which events should be sent. Then, you create a Kafka Streams project in Java that receives and processes the continuous data stream. Once you've tested the processor locally, you can deploy it to your cloud environment and connect it to your Kafka cluster.

Complex Event Processing

CEP is also possible in Kafka. While you could set up a processor topology with windowed processors to capture events over time and analyze them in Kafka, you're better off using a dedicated CEP tool like Apache Flink or Spark. These tools let you write code that identifies patterns in events over time. If specific patterns are detected, the CEP tool can take action, like raising another event in Kafka or interacting with another system.

To use Apache Flink with Kafka, you must first install both tools and set up the topics in Kafka. Once set up, you can write pattern-matching logic in Java that can run in Apache Flink. Flink can then receive the continuous stream of events from Kafka and run them through the CEP logic to find patterns.

Event Sourcing

To implement event sourcing, you need a durable event store that can store events sequentially so that other systems can replay them. Fortunately, Kafka provides permanent storage for events that you can distribute across multiple Kafka clusters for this purpose.

First, create the necessary topics for your application in your Kafka cluster. Then, you can set up event logs that persist events permanently. If performance is critical, you can also set up a key-value data store or database to store the latest state of the data. As new events come in, the cache can be updated based on those events, making queries for the latest data more efficient and letting you query the event log for historical data when necessary.

Command Query Responsibility Segregation

When implementing CQRS, you can use Kafka as an event store to decouple commands from queries using topics. The system can process commands asynchronously by sending events on Kafka topics to be processed in real time or in batches, depending on the processing your consumers implement. Data can be queried separately and directly from the data store.

You'll first need to set up an Kafka cluster. You can then configure topics for your different commands and subscribe consumers to those topics to process events and update a data store as events are sent by producers through the topics.

Microservices Architecture

Apache Kafka can act as the event broker that decouples services in a microservices architecture. Services can send events to Kafka topics, which are then forwarded to subscribed services instead of calling other services directly. Kafka also lets multiple services subscribe to a topic, meaning multiple services can react to a single event.

To implement Kafka in a microservices architecture, you need a Kafka cluster with topics for the different services. Then, configure all your services to send events to the cluster and subscribe to topics to receive events from other services.

Serverless Architecture

Kafka can coordinate your serverless architecture with integrations for all major cloud providers, like AWS, Azure Functions and Google Cloud Functions. These integrations let you trigger serverless functions using events in a Kafka topic. They can also send events to trigger other serverless functions.

To use Kafka in your serverless environment, you must first set it up in your cloud environment. Some cloud providers offer Kafka as a managed service, while others offer integrations that let you trigger serverless functions using your cluster. Once set up, you can configure your serverless functions to trigger based on events sent to specific topics.

Conclusion

In the article we explained about event-driven architecture and the pros and cons of using it. We also looked at several popular patterns for implementing EDA in applications and a way to implement them with Apache Kafka.

If you are implementing an event-driven architecture for a highly distributed application that must respond in real time, we encourage you to take a close look at Equinix dedicated cloud. It provides private compute and storage in more than 30 densely populated metro areas globally and a way to quickly and conveniently provision private network links to public cloud onramps and network operators in the same locations.

You can create redundant infrastructure across regions to ensure event producers and consumers are always available. Private connectivity (rather than public, over the internet) makes failover seamless. You can integrate with public cloud microservices to make your event processing modular and scalable, use cloud serverless functions and the plethora of cloud-based monitoring, logging and analytics services. By connecting to the public cloud providers directly and locally, you get the lowest possible latency to deliver real-time responsiveness. Try it for free!